1083

AI Is Starting to Look Like the Dot Com Bubble

(futurism.com)

This is a most excellent place for technology news and articles.

AI is bringing us functional things though.

.Com was about making webtech to sell companies to venture capitalists who would then sell to a company to a bigger company. It was literally about window dressing garbage to make a business proposition.

Of course there's some of that going on in AI, but there's also a hell of a lot of deeper opportunity being made.

What happens if you take a well done video college course, every subject, and train an AI that's both good working with people in a teaching frame and is also properly versed on the subject matter. You take the course, in real time you can stop it and ask the AI teacher questions. It helps you, responding exactly to what you ask and then gives you a quick quiz to make sure you understand. What happens when your class doesn't need to be at a certain time of the day or night, what happens if you don't need an hour and a half to sit down and consume the data?

What if secondary education is simply one-on-one tutoring with an AI? How far could we get as a species if this was given to the world freely? What if everyone could advance as far as their interest let them? What if AI translation gets good enough that language is no longer a concern?

AI has a lot of the same hallmarks and a lot of the same investors as crypto and half a dozen other partially or completely failed ideas. But there's an awful lot of new things that can be done that could never be done before. To me that signifies there's real value here.

*dictation fixes

You get stupid-ass students because an AI producing word-salad is not capable of critical thinking.

It would appear to me that you've not been exposed to much in the way of current AI content. We've moved past the shitty news articles from 5 years ago.

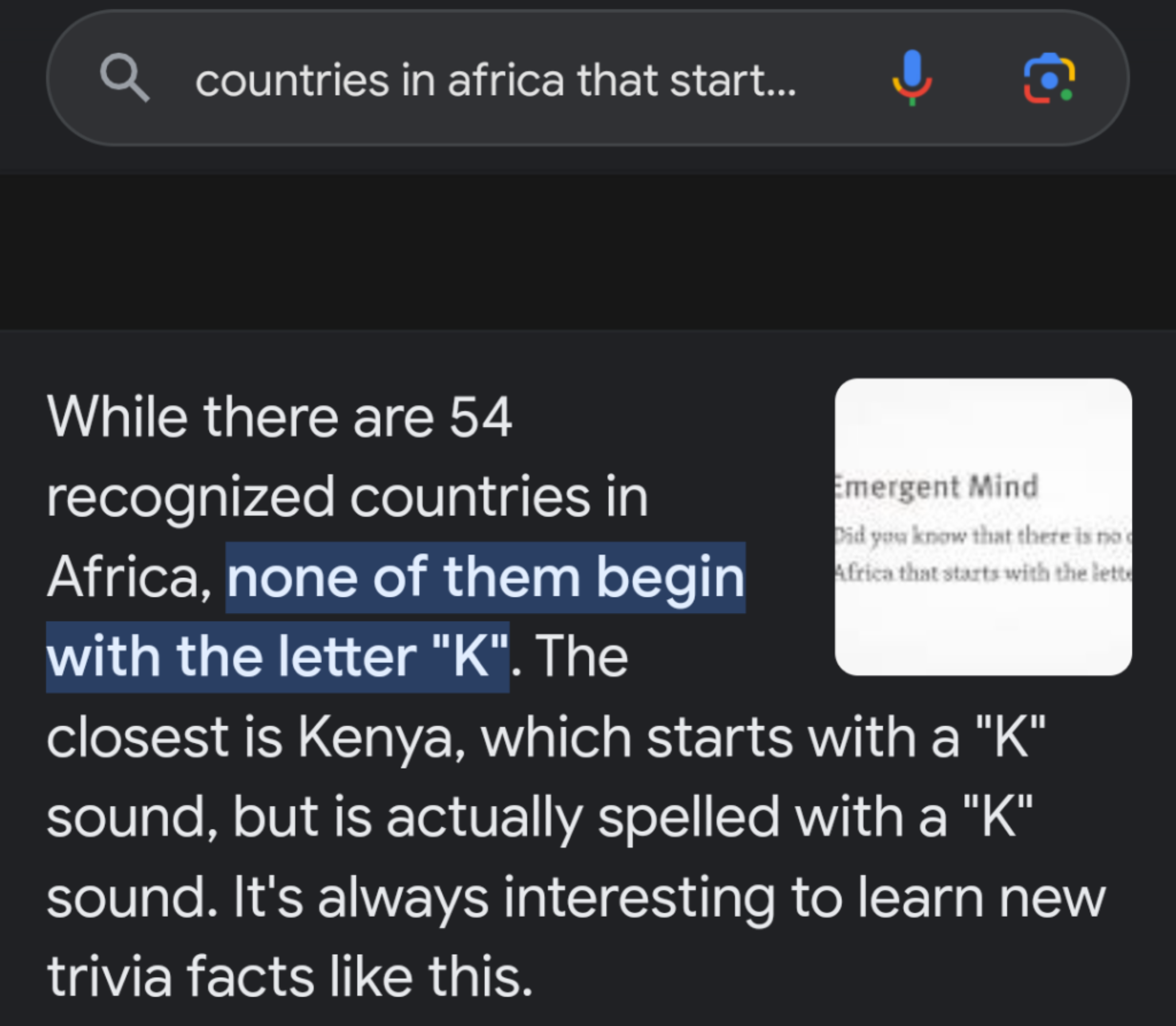

Five years ago? Try last month.

Or hell, why not try literally this instant.

You make it sound like the tech is completely incapable of uttering a legible sentence.

In one article you have people actively trying to fuck with it to make it screw up. And in your other example you picked the most unstable of the new engines out there.

Omg It answered a question wrong once The tech is completely unusable for anything throw it away throw it away.

I hate to say it but this guy's not falling The tech is still usable and it's actually the reason why I said we need to have a specialized model to provide the raw data and grade the responses using the general model only for conversation and gathering bullet points for the questions and responses It's close enough to flawless at that that it'll be fine with some guardrails.

Oh, please. AI does shit like this all the time. Ask it to spell something backwards, it'll screw up horrifically. Ask it to sort a list of words alphabetically, it'll give shit out of order. Ask it something outside of its training model, and you'll get a nonsense response because LLMs are not capable of inference and deductive reasoning. And you want this shit to be responsible for teaching a bunch of teenagers? The only thing they'd learn is how to trick the AI teacher into writing swear words.

Having an AI for a teacher (even as a one-on-one tutor) is about the stupidest idea I've ever heard of, and I've heard some really fucking dumb ideas from AI chuds.