But I thought armies of teenagers were starting tech businesses?!

A buddy of mine is into vibe coding, but he actually does know how to code as well. He will reiterate through the code with the llm until he thinks it will work. I can believe it saves time, but you still have to know what you are doing.

The most amazing thing about vibe coding is that in my 20 odd years of professional programming the thing I’ve had to beg and plead for the most was code reviews.

Everyone loves writing code, no one it seems much enjoyed reading other people’s code.

Somehow though vibe coding (and the other LLM guided coding) has made people go “I’ll skip the part where I write code, let an LLM generate a bunch of code that I’ll review”

Either people have fundamentally changed, unlikely, or there’s just a lot more people that are willing to skim over a pile of autogenerated code and go “yea, I’m sure it’s fine” and open a PR

I don't think it saves time. You spend more time trying to explain why it's wrong and how the llm should take the next approach, at which point it actually would've been faster to read documentation and do it yourself. At least then you'll understand what the code is even further.

Vibe coding tools are very useful when you want to make a tech movie but the hollywood command just does not cut it.

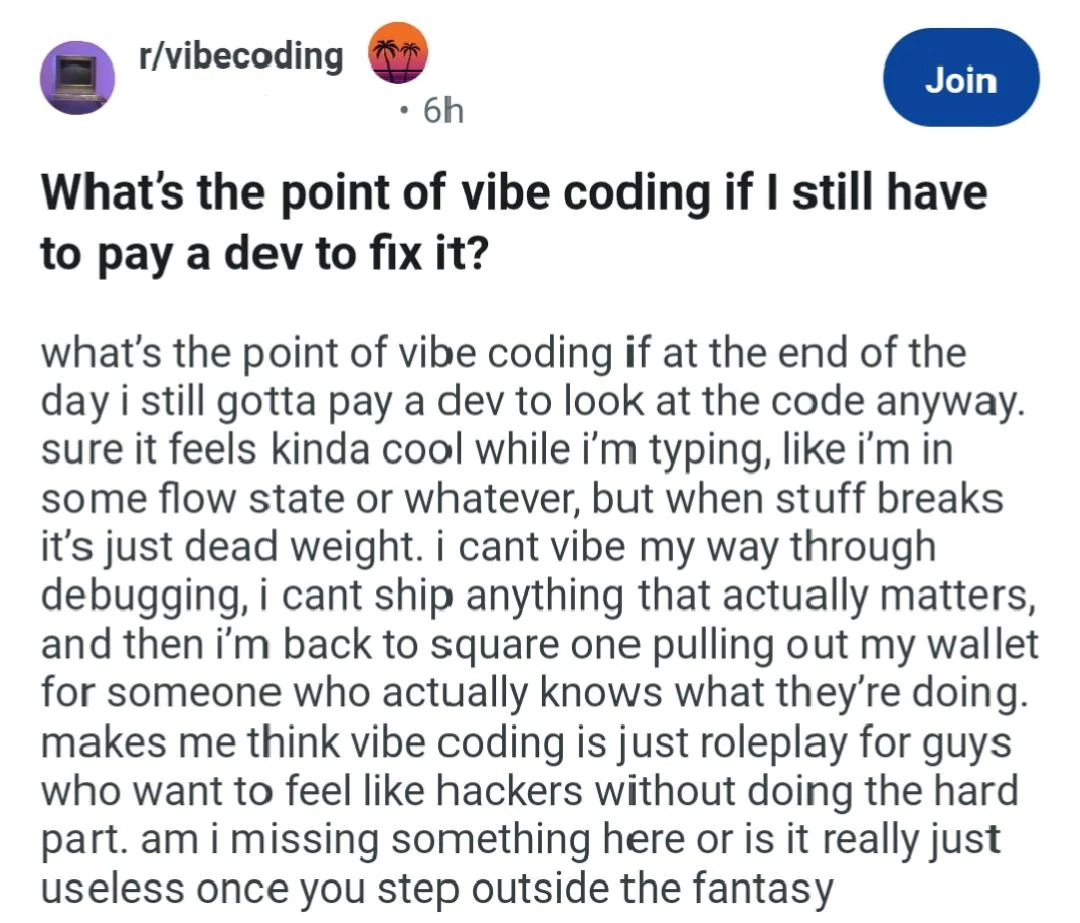

The post was probably made by a troll, but the comment section is wise to the issue.

I know we like to mock vibe coder because they can be naive, but many are aware that they are testing a concept and usually a very simple one. Would you rather have them test it with vibe coding or sit you down every afternoon for a week trying to explain how it's not quite what they wanted?

They're so close to actual understanding of how much they suck.

No way. Youtube ad told me a different story the other day. Could that be a... lie? (shocked_face.jpg)

I refuse to believe this post isn't satire, because holy shit.

If I was 14 and had an interest in coding, the promise of 'vibe coding' would absolutely reel me in. Most of us here on Lemmy are more tech savvy and older, so it's easy to forget that we were asking Jeeves for .bat commands and borrowing* from Planet Source Code.

But yeah, it feels like satire. Haha.

Consulting opportunity: clean up your vibe-coding projects and get them to production.

That comes up in that sub occasionally and people offer it as a service. It's 2 different universes in there - people who are like giving a child a Harry Potter toy wand that think they're magic, and then a stage magician with 20 years of experience doing up close slight-of-hand magic that takes work to learn, telling the kid "you're not doing what you think you're doing here" and then the kid starts to cry and their friends come over and try to berate the stage magician and shout that he's wrong because Hagrid said Harry's a wizard and if you have the plastic wand that goes "bbbring!" you're Harry Potter.

is it not making someone fix generated project is a massive work rather than building smething from the ground up?

I had a project where I was supposed to clean up a generated 3D model. It has messed up topology, some missing parts, unintelligible shapes. It made me depressed cleaning it up.

few of them was simple enough for me to rebuild the mesh from the ground up following the shape, as if I'm retopologizing. But the more complex ones have that unintelligible shapes that I can't figure what that is or the flow of the topology.

If I was given more time & pay I could rebuild all of that my own way so I understand every vertices exist in the meshes. But oh well that contradicts their need of quick & cheap.

Programmer Humor

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics