By now, you’ve probably heard about Kosmos 482, a Soviet probe destined for Venus in 1972 that fell a bit short of the mark and stayed in Earth orbit for the last 53 years. Soon enough, though, the lander will make its fiery return; exactly where and when remain a mystery, but it should be sometime in the coming week. We talked about the return of Kosmos briefly on this week’s podcast and even joked a bit about how cool it would be if the parachute that would have been used for the descent to Venus had somehow deployed over its half-century in space. We might have been onto something, as astrophotographer Ralf Vanderburgh has taken some pictures of the spacecraft that seem to show a structure connected to and trailing behind it. The chute is probably in pretty bad shape after 50 years of UV torture, but how cool is that?

Parachute or not, chances are good that the 495-kilogram spacecraft, built to not only land on Venus but to survive the heat, pressure, and corrosive effects of the hellish planet’s atmosphere, will at least partially survive reentry into Earth’s more welcoming environs. That’s a good news, bad news thing: good news that we might be able to recover a priceless artifact of late-Cold War space technology, bad news to anyone on the surface near where this thing lands. If Kosmos 482 does manage to do some damage, it won’t be the first time. Shortly after launch, pieces of titanium rained down on New Zealand after the probe’s booster failed to send it on its way to Venus, damaging crops and starting some fires. The Soviets, ever secretive about their space exploits until they could claim complete success, disavowed the debris and denied responsibility for it. That made the farmers whose fields they fell in the rightful owners, which is also pretty cool. We doubt that the long-lost Kosmos lander will get the same treatment, but it would be nice if it did.

Also of note in the news this week is a brief clip of a Unitree humanoid robot going absolutely ham during a demonstration — demo-hell, amiright? Potential danger to the nearby engineers notwithstanding, the footage is pretty hilarious. The demo, with a robot hanging from a hoist in a crowded lab, starts out calmly enough, but goes downhill quickly as the robot starts flailing its arms around. We’d say the movements were uncontrolled, but there are points where the robot really seems to be chasing the engineer and taking deliberate swipes at the poor guy, who was probably just trying to get to the e-stop switch. We know that’s probably just the anthropomorphization talking, but it sure looks like the bot had a beef to settle. You be the judge.

Also from China comes a report of “reverse ATMs” that accept gold and turn it into cash on the spot (apologies for yet another social media link, but that’s where the stories are these days). The machine shown has a hopper into which customers can load their unwanted jewelry, after which it is reportedly melted down and assayed for purity. The funds are then directly credited to the customer’s account electronically. We’re not sure we fully believe this — thinking about the various failure modes of one of those fresh-brewed coffee machines, we shudder to think about the consequences of a machine with a 1,000°C furnace built into it. We also can’t help but wonder how the machine assays the scrap gold — X-ray fluorescence? Ramann spectroscopy? Also, what happens to the unlucky customer who puts some jewelry in that they thought was real gold, only to be told by the machine that it wasn’t? Do they just get their stuff back as a molten blob? The mind boggles.

And finally, the European Space Agency has released a stunning new image of the Sun. Captured by their Solar Orbiter spacecraft in March from about 77 million kilometers away, the mosaic is composed of about 200 images from the Extreme Ultraviolet Imager. The Sun was looking particularly good that day, with filaments, active regions, prominences, and coronal loops in evidence, along with the ethereal beauty of the Sun’s atmosphere. The image is said to be the most detailed view of the Sun yet taken, and needs to be seen in full resolution to be appreciated. Click on the image below and zoom to your heart’s content.

From Blog – Hackaday via this RSS feed

Impedance matching is one of the perpetual confusions for new electronics students, and for good reason: the idea that increasing the impedance of a circuit can lead to more power transmission is frighteningly unintuitive at first glance. Even once you understand this, designing a circuit with impedance matching is a tricky task, and it’s here that [Ralph Gable]’s introduction to impedance matching is helpful.

The goal of impedance matching is to maximize the amount of power transmitted from a source to a load. In some simple situations, resistance is the only significant component in impedance, and it’s possible to match impedance just by matching resistance. In most situations, though, capacitance and inductance will add a reactive component to the impedance, in which case it becomes necessary to use the complex conjugate for impedance matching.

The video goes over this theory briefly, but it’s real focus is on explaining how to read a Smith chart, an intimidating-looking tool which can be used to calculate impedances. The video covers the basic impedance-only Smith chart, as well as a full-color Smith chart which indicates both impedance and admittance.

This video is the introduction to a planned series on impedance matching, and beyond reading Smith charts, it doesn’t really get into many specifics. However, based on the clear explanations so far, it could be worth waiting for the rest of the series.

If you’re interested in more practical details, we’ve also covered another example before.

From Blog – Hackaday via this RSS feed

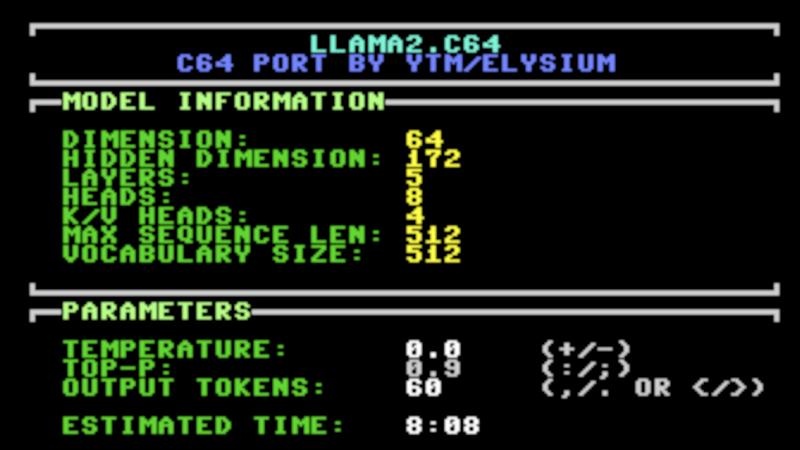

“If there’s one thing the Commodore 64 is missing, it’s a large language model,” is a phrase nobody has uttered on this Earth. Yet, you could run one, if you so desired, thanks to [ytm] and the Llama2.c64 project!

[ytm] did the hard work of porting the Llama 2 model to the most popular computer ever made. Of course, as you might expect, the ancient 8-bit machine doesn’t really have the stones to run an LLM on its own. You will need one rather significant upgrade, in the form of 2 MB additional RAM via a C64 REU.

Now, don’t get ahead of things—this is no wide-ranging ChatGPT clone. It’s not going to do your homework, counsel you on your failed marriage, or solve the geopolitical crisis in your local region. Instead, you’re getting the 260 K tinystories model, which is a tad more limited. In [ytm]’s words… “Imagine prompting a 3-year-old child with the beginning of a story — they will continue it to the best of their vocabulary and abilities.”

It might not be supremely capable, but there’s something fun about seeing such a model talking back on an old-school C64 display. If you’ve been hacking away at your own C64 projects, don’t hesitate to let us know. We certainly can’t get enough of them!

Thanks to [ytm] for the tip!

From Blog – Hackaday via this RSS feed

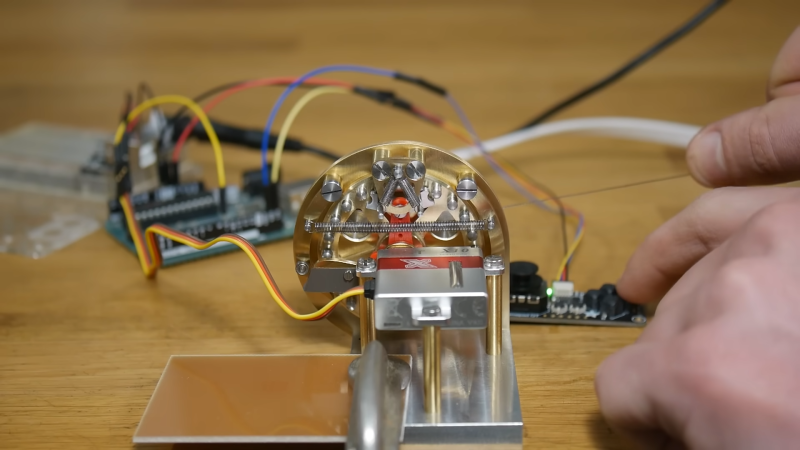

A common ratchet from your garage may work wonders for tightening hard to reach bolts on whatever everyday projects around the house. However, those over at [Chronova Engineering] had a particularly unusual project where a special ratchet mechanism needed to be developed. And developed it was, an absolutely beautiful machining job is done to create a ratcheting actuator for tendon pulling. Yes, this mechanical steampunk-esk ratchet is meant for yanking on the fleshy strings found in all of us.

The unique mechanism is necessary because of the requirement for bidirectional actuation for bio-mechanics research. Tendons are meant to be pulled and released to measure the movement of the fingers or toes. This is then compared with the distance pulled from the actuator. Hopefully, this method of actuation measurement may help doctors and surgeons treat people with impairments, though in this particular case the “patient” is a chicken’s foot.

Blurred for viewing ease

Blurred for viewing ease

Manufacturing the mechanism itself consisted of a multitude of watch lathe operations and pantographed patterns. A mixture of custom and commercial screws are used in combination with a peg gear, cams, and a high performance servo to complete the complex ratchet. With simple control from an Arduino, the system completes its use case very effectively.

In all the actuator is an incredible piece of machining ability with one of the least expected use cases. The original public listed video chose to not show the chicken foot itself due to fear of the YouTube overlords.

If you wish to see the actuator in proper action check out the uncensored and unlisted video here.

Thanks to [DjBiohazard] on our Discord server tips-line!

From Blog – Hackaday via this RSS feed

If you’ve got a popular 3D printer that has been on the market a good long while, you can probably get any old nozzles you want right off the shelf. If you happen to have an AnyCubic printer, though, you might find it a bit tougher. [Startup Chuck] wanted some specific sized nozzles for his rig, so set about whipping up a solution himself.

[Chuck]’s first experiments were simple enough. He wanted larger nozzles than those on sale, so he did the obvious. He took existing 0.4 mm nozzles and drilled them out with carbide PCB drills to make 0.6 mm and 0.8 mm nozzles. It’s pretty straightforward stuff, and it was a useful hack to really make the best use of the large print area on the AnyCubic Kobra 3.

But what about going the other way? [Chuck] figured out a solution for that, too. He started by punching out the 0.4 mm insert in an existing nozzle. He then figured out how to drive 0.2 mm nozzles from another printer into the nozzle body so he had a viable 0.2 mm nozzle that suited his AnyCubic machine.

The result? [Chuck] can now print tiny little things on his big AnyCubic printer without having to wait for the OEM to come out with the right nozzles. If you want to learn more about nozzles, we can help you there, too.

From Blog – Hackaday via this RSS feed

While the COVID-19 pandemic wasn’t an experience anyone wants to repeat, infections disease experts like [Dr. Pardis Sabeti] are looking at what we can do to prepare for the next one.

While the next pandemic could potentially be anything, there are a few high profile candidates, and bird flu (H5N1) is at the top of the list. With birds all over the world carrying the infection and the prevalence in poultry and now dairy agriculture operations, the possibility for cross-species infection is higher than for most other diseases out there, particularly anything with an up to 60% fatality rate. Only one of the 70 people in the US who have contracted H5N1 recently have died, and exposures have been mostly in dairy and poultry workers. Scientists have yet to determine why cases in the US have been less severe.

To prevent an H5N1 pandemic before it reaches the level of COVID and ensure its reach is limited like earlier bird and swine flu variants, contact tracing of humans and cattle as well as offering existing H5N1 vaccines to vulnerable populations like those poultry and dairy workers would be a good first line of defense. So far, it doesn’t seem transmissible human-to-human, but more and more cases increase the likelihood it could gain this mutation. Keeping current cases from increasing, improving our science outreach, and continuing to fund scientists working on this disease are our best bets to keep it from taking off like a meme stock.

Whatever the next pandemic turns out to be, smartwatches could help flatten the curve and surely hackers will rise to the occasion to fill in the gaps where traditional infrastructure fails again.

From Blog – Hackaday via this RSS feed

Dan Maloney and I were talking on the podcast about his memories of the old electronics magazines, and how they had some gonzo projects in them. One, a DIY picture phone from the 1980s, was a monster build of a hundred ICs that also required you to own a TV camera. At that time, the idea of being able to see someone while talking to them on the phone was pure science fiction, and here was a version of that which you could build yourself.

Still, we have to wonder how many of these were ever built. The project itself was difficult and expensive, but you actually have to multiply that by two if you want to talk with someone else. And then you have to turn your respective living rooms into TV studios. It wasn’t the most practical of projects.

Still, we have to wonder how many of these were ever built. The project itself was difficult and expensive, but you actually have to multiply that by two if you want to talk with someone else. And then you have to turn your respective living rooms into TV studios. It wasn’t the most practical of projects.

But amazing projects did something in the old magazines that we take a little bit for granted today: they showed what was possible. And if you want to create something new, you’re not necessarily going to know how to do it, but just the idea that it’s possible at all is often enough to give a motivated hacker the drive to make it real. As skateboard hero Rodney Mullen put it, “the biggest obstacle to creativity is breaking through the barrier of disbelief”.

In the skating world, it’s seeing someone else do a trick in a video that lets you know that it’s possible, and then you can make it your own. In our world, in prehistoric times, it was these electronics magazines that showed you what was possible. In the present, it’s all over the Internet, and all over Hackaday. So when you see someone’s amazing project, even if you aren’t necessarily into it, or maybe don’t even fully understand it, your horizons of what’s possible are nonetheless expanded, and that helps us all be more creative.

Keep on pushing!

This article is part of the Hackaday.com newsletter, delivered every seven days for each of the last 200+ weeks. It also includes our favorite articles from the last seven days that you can see on the web version of the newsletter. Want this type of article to hit your inbox every Friday morning? You should sign up!

From Blog – Hackaday via this RSS feed

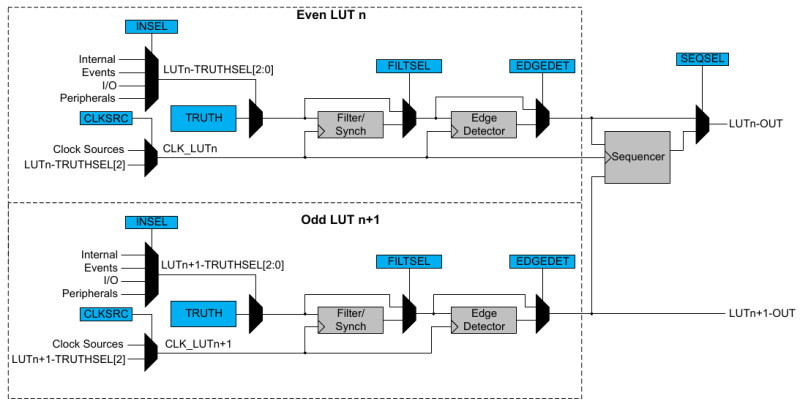

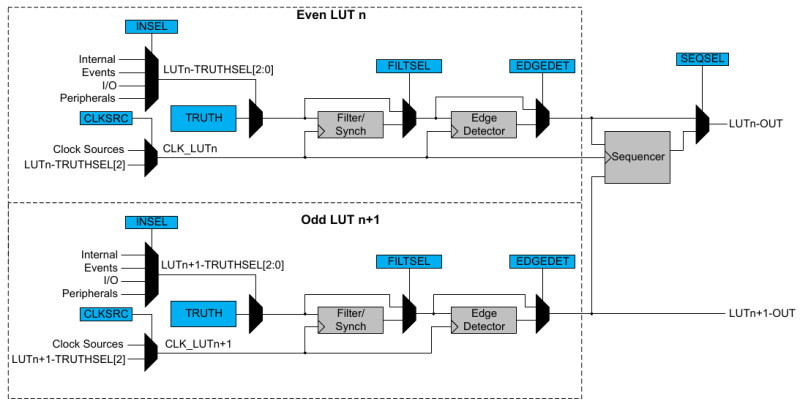

In the Microchip tinyAVR 0-series, 1-series, and 2-series we see Configurable Custom Logic (CCL) among the Core Independent Peripherals (CIP) available on the chip. In this YouTube video [Grug Huhler] shows us how to make your own digital logic in hardware using the ATtiny CCL peripheral.

If you have spare pins on your tinyAVR micro you can use them with the CCL for “glue logic” and save on your bill of materials (BOM) cost. The CCL can do simple to moderately complex logic, and it does it without the need for support from the processor core, which is why it’s called a core independent peripheral. A good place to learn about the CCL capabilities in these tinyAVR series is Microchip Technical Brief TB3218: Getting Started with Configurable Custom Logic (CCL) or if you need more information see a datasheet, such as the ATtiny3226 datasheet mentioned in the video.

A tinyAVR micro will have one or two CCL peripherals depending on the series. The heart of the CCL hardware are two Lookup Tables (LUTs). Each LUT can map any three binary inputs into one binary output. This allows each LUT to be programmed with one byte as simple 2-input or 3-input logic, such as NOT, AND, OR, XOR, etc. Each LUT output can optionally be piped through a Filter/Sync function, an Edge Detector, and a Sequencer (always from the lower numbered LUT in the pair). It is also possible to mask-out LUT inputs.

In the source code that accompanies the video [Grug] includes a demonstration of a three input AND gate, an SR Latch using the sequencer, an SR Latch using feedback, and a filter/sync and edge detection circuit. The Arduino library [Grug] uses is Logic.h from megaTinyCore.

We have covered CIP and CCL technology here on Hackaday before, such as back when we showed you how to use an AVR microcontroller to make a switching regulator.

From Blog – Hackaday via this RSS feed

In the Microchip tinyAVR 0-series, 1-series, and 2-series we see Configurable Custom Logic (CCL) among the Core Independent Peripherals (CIP) available on the chip. In this YouTube video [Grug Huhler] shows us how to make your own digital logic in hardware using the ATtiny CCL peripheral.

If you have spare pins on your tinyAVR micro you can use them with the CCL for “glue logic” and save on your bill of materials (BOM) cost. The CCL can do simple to moderately complex logic, and it does it without the need for support from the processor core, which is why it’s called a core independent peripheral. A good place to learn about the CCL capabilities in these tinyAVR series is Microchip Technical Brief TB3218: Getting Started with Configurable Custom Logic (CCL) or if you need more information see a datasheet, such as the ATtiny3226 datasheet mentioned in the video.

A tinyAVR micro will have one or two CCL peripherals depending on the series. The heart of the CCL hardware are two Lookup Tables (LUTs). Each LUT can map any three binary inputs into one binary output. This allows each LUT to be programmed with one byte as simple 2-input or 3-input logic, such as NOT, AND, OR, XOR, etc. Each LUT output can optionally be piped through a Filter/Sync function, an Edge Detector, and a Sequencer (always from the lower numbered LUT in the pair). It is also possible to mask-out LUT inputs.

In the source code that accompanies the video [Grug] includes a demonstration of a three input AND gate, an SR Latch using the sequencer, an SR Latch using feedback, and a filter/sync and edge detection circuit. The Arduino library [Grug] uses is Logic.h from megaTinyCore.

We have covered CIP and CCL technology here on Hackaday before, such as back when we showed you how to use an AVR microcontroller to make a switching regulator.

From Blog – Hackaday via this RSS feed

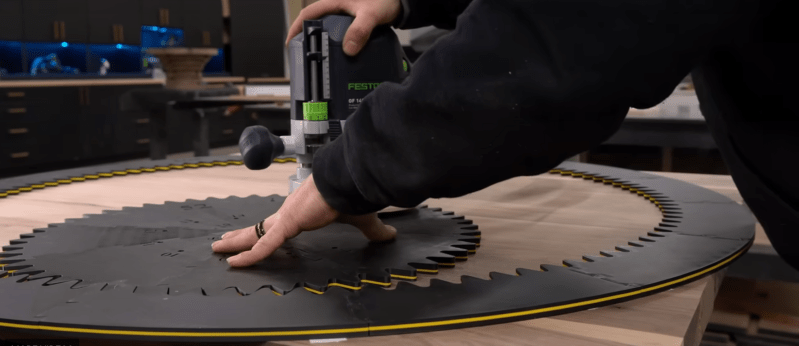

Who else remembers Spirograph? When making elaborate spiral doodles, did you ever wish for a much, much bigger version? [Fortress Fine Woodworks] had that thought, and “slapped a router onto it” to create a gorgeous walnut table.

This printed sanding block was a nice touch.

This printed sanding block was a nice touch.

The video covers not only 3D printing the giant Spirograph, which is the part most of us can easily relate to, but all the woodworking magic that goes into creating a large hardwood table. Assembling the table out of choice lumber from the “rustic” pile is an obvious money-saving move, but there were a lot of other trips and tricks in this video that we were happy to learn from a pro. The 3D printed sanding block he designed was a particularly nice detail; it’s hard to imagine getting all those grooves smoothed out without it.

Certainly this pattern could have been carved with a CNC machine, but there is a certain old school charm in seeing it done (more or less) by hand with the Spirograph jig. [Fortress Fine Woodworks] would have missed out on quite the workout if he’d been using a CNC machine, too, which may or may not be a plus to this method depending on your perspective. Regardless, the finished product is a work of art and worth checking out in the video below.

Oddly enough, this isn’t the first time we’ve seen someone use a Spirograph to mill things. It’s not the first giant-scale Spirograph we’ve highlighted, either. To our knowledge, it’s the first time someone has combined them with an artful walnut table.

From Blog – Hackaday via this RSS feed

[sprite_tm] had a problem. He needed a clock for the living room, but didn’t want to just buy something off the shelf. In his own words, “It’s an opportunity for a cool project that I’d rather not let go to waste.” Thus started a project to build a fun e-paper digit clock!

There were several goals for the build from the outset. It had to be battery driven, large enough to be easily readable, and readily visible both during the day and in low-light conditions. It also needed to be low maintenance, and “interesting,” as [sprite_tm] put it. This drove the design towards an e-paper solution. However, large e-paper displays can be a bit pricy. That spawned a creative idea—why not grab four smaller displays and make a clock with separate individual digits instead?

The build description covers the full design, from the ESP32 at the heart of things to odd brownout issues and the old-school Nokia batteries providing the juice. Indeed, [sprite_tm] even went the creative route, making each individual digit of the clock operate largely independently. Each has its own battery, microcontroller, and display. To save battery life, only the hours digit has to spend energy syncing with an NTP time server, and it uses the short-range ESPNow protocol to send time updates to the other digits.

It’s an unconventional clock, to be sure; you could even consider it four clocks in one. Ultimately, though, that’s what we like in a timepiece here at Hackaday. Meanwhile, if you’ve come up with a fun and innovative way to tell time, be sure to let us know on the tipsline!

[Thanks to Maarten Tromp for the tip!]

From Blog – Hackaday via this RSS feed

It’s the podcast so nice we recorded it twice! Despite some technical difficulties (note to self: press the record button significantly before recording the outro), Elliot and Dan were able to soldier through our rundown of the week’s top hacks.

We kicked things off with a roundup of virtual keyboards for the alternate reality crowd, which begged the question of why you’d even need such a thing. We also looked at a couple of cool demoscene-adjacent projects, such as the ultimate in oscilloscope music and a hybrid knob/jack for eurorack synth modules.

We dialed the Wayback Machine into antiquity to take a look at Clickspring’s take on the origins of precision machining; spoiler alert — you can make gas-tight concentric brass tubing using a bow-driven lathe. There’s a squishy pneumatic robot gripper, an MQTT-enabled random number generator, a feline-friendly digital stethoscope, and a typewriter that’ll make you Dymo label maker jealous.

We’ll also mourn the demise of electronics magazines and ponder how your favorite website fills that gap, and learn why it’s really hard to keep open-source software lean and clean. Short answer: because it’s made by people.

Where to Follow Hackaday Podcast

Places to follow Hackaday podcasts:

iTunesSpotifyStitcherRSSYouTubeCheck out our Libsyn landing page

Download the zero-calorie MP3.

Episode 319 Show Notes:

News:

There’s A Venusian Spacecraft Coming Our WayYou Wouldn’t Steal A Font…Sigrok Website Down After Hosting Data Loss

What’s that Sound?

Fill out this form for your chance to win!

Interesting Hacks of the Week:

Weird And Wonderful VR/MR Text Entry Methods, All In One Place Just a moment…Clickspring’s Experimental Archaeology: Concentric Thin-Walled TubingAmazing Oscilloscope Demo Scores The Win At Revision 2025 osci-renderTripping On Oscilloshrooms With An Analog ScopeCrossing Commodore Signal Cables On PurposeLook! It’s A Knob! It’s A Jack! It’s Euroknob!Robot Gets A DIY Pneumatic Gripper Upgrade Vastly Improved Servo Control, Now Without Motor SurgeryRemembering Heathkit

Quick Hacks:

Elliot’s Picks A New And Weird Kind Of TypewriterTerminal DAW Does It In StyleComparing ‘AI’ For Basic Plant Care With Human Brown ThumbsDan’s Picks: Quantum Random Number Generator Squirts Out Numbers Via MQTTOnkyo Receiver Saved With An ESP32Quick And Easy Digital Stethoscope Keeps Tabs On Cat

Can’t-Miss Articles:

Libogc Allegations Rock Wii Homebrew CommunityThe DIY 1982 Picture Phone

From Blog – Hackaday via this RSS feed

Let me throw in a curveball—watching your 3D print fail in real-time is so much more satisfying when you have a crisp, up-close view of the nozzle drama. That’s exactly what [Mellow Labs] delivers in his latest DIY video: transforming a generic HD endoscope camera into a purpose-built nozzle cam for the Prusa Mini. The hack blends absurd simplicity with delightful nerdy precision, and comes with a full walkthrough, a printable mount, and just enough bad advice to make it interesting. It’s a must-see for any maker who enjoys solder fumes with their spaghetti monsters.

What makes this build uniquely brilliant is the repurposing of a common USB endoscope camera—a tool normally reserved for inspecting pipes or internal combustion engines. Instead, it’s now spying on molten plastic. The camera gets ripped from its aluminium tomb, upgraded with custom-salvaged LEDs (harvested straight from a dismembered bulb), then wrapped in makeshift heat-shrink and mounted on a custom PETG bracket. [Mellow Labs] even micro-solders in a custom connector just so the camera can be detached post-print. The mount is parametric, thanks to a community contribution.

This is exactly the sort of hacking to love—clever, scrappy, informative, and full of personality. For the tinkerers among us who like their camera mounts hot and their resistor math hotter, this build is a weekend well spent.

From Blog – Hackaday via this RSS feed

This week, Oligo has announced the AirBorne series of vulnerabilities in the Apple Airdrop protocol and SDK. This is a particularly serious set of issues, and notably affects MacOS desktops and laptops, the iOS and iPadOS mobile devices, and many IoT devices that use the Apple SDK to provide AirPlay support. It’s a group of 16 CVEs based on 23 total reported issues, with the ramifications ranging from an authentication bypass, to local file reads, all the way to Remote Code Execution (RCE).

AirPlay is a WiFi based peer-to-peer protocol, used to share or stream media between devices. It uses port 7000, and a custom protocol that has elements of both HTTP and RTSP. This scheme makes heavy use of property lists (“plists”) for transferring serialized information. And as we well know, serialization and data parsing interfaces are great places to look for vulnerabilities. Oligo provides an example, where a plist is expected to contain a dictionary object, but was actually constructed with a simple string. De-serializing that plist results in a malformed dictionary, and attempting to access it will crash the process.

Another demo is using AirPlay to achieve an arbitrary memory write against a MacOS device. Because it’s such a powerful primative, this can be used for zero-click exploitation, though the actual demo uses the music app, and launches with a user click. Prior to the patch, this affected any MacOS device with AirPlay enabled, and set to either “Anyone on the same network” or “Everyone”. Because of the zero-click nature, this could be made into a wormable exploit.

Apple has released updates for their products for all of the CVEs, but what’s going to really take a long time to clean up is the IoT devices that were build with the vulnerable SDK. It’s likely that many of those devices will never receive updates.

EvilNotify

It’s apparently the week for Apple exploits, because here’s another one, this time from [Guilherme Rambo]. Apple has built multiple systems for doing Inter Process Communications (IPC), but the simplest is the Darwin Notification API. It’s part of the shared code that runs on all of Apple’s OSs, and this IPC has some quirks. Namely, there’s no verification system, and no restrictions on which processes can send or receive messages.

That led our researcher to ask what you may be asking: does this lack of authentication allow for any security violations? Among many novel notifications this technique can spoof, there’s one that’s particularly problematic: The device “restore in progress”. This locks the device, leaving only a reboot option. Annoying, but not a permanent problem.

The really nasty version of this trick is to put the code triggering a “restore in progress” message inside an app’s widget extension. iOS loads those automatically at boot, making for an infuriating bootloop. [Guilherme] reported the problem to Apple, made a very nice $17,500 in the progress. The fix from Apple is a welcome surprise, in that they added an authorization mechanism for sensitive notification endpoints. It’s very likely that there are other ways that this technique could have been abused, so the more comprehensive fix was the way to go.

Jenkins

Continuous Integration is one of the most powerful tools a software project can use to stay on top of code quality. Unfortunately as those CI toolchains get more complicated, they are more likely to be vulnerable, as [John Stawinski] from Praetorian has discovered. This attack chain would target the Node.js repository at Github via an outside pull request, and ends with code execution on the Jenkins host machines.

The trick to pulling this off is to spoof the timestamp on a Pull Request. The Node.js CI uses PR labels to control what CI will do with the incoming request. Tooling automatically adds the “needs-ci” label depending on what files are modified. A maintainer reviews the PR, and approves the CI run. A Jenkins runner will pick up the job, compare that the Git timestamp predated the maintainer’s approval, and then runs the CI job. Git timestamps are trivial to spoof, so it’s possible to load an additional commit to the target PR with a commit timestamp in the past. The runner doesn’t catch the deception, and runs the now-malicious code.

[John] reported the findings, and Node.js maintainers jumped into action right away. The primary fix was to do SHA sum comparisons to validate Jenkins runs, rather than just relying on timestamp. Out of an abundance of caution, the Jenkins runners were re-imaged, and then [John] was invited to try to recreate the exploit. The Node.js blog post has some additional thoughts on this exploit, like pointing out that it’s a Time-of-Check-Time-of-Use (TOCTOU) exploit. We don’t normally think of TOCTOU bugs where a human is the “check” part of the equation.

2024 in 0-days

Google has published an overview of the 75 zero-day vulnerabilities that were exploited in 2024. That’s down from the 98 vulnerabilities exploited in 2023, but the Threat Intelligence Group behind this report are of the opinion that we’re still on an upward trend for zero-day exploitation. Some platforms like mobile and web browsers have seen drastic improvements in zero-day prevention, while enterprise targets are on the rise. The real stand-out is the targeting of security appliances and other network devices, at more than 60% of the vulnerabilities tracked.

When it comes to the attackers behind exploitation, it’s a mix between state-sponsored attacks, legal commercial surveillance, and financially motivated attacks. It will be interesting to see how 2025 stacks up in comparison. But one thing is for certain: Zero-days aren’t going away any time soon.

Perplexing Passwords for RDP

The world of computer security just got an interesting surprise, as Microsoft declared it not-a-bug that Windows machines will continue to accept revoked credentials for Remote Desktop Protocol (RDP) logins. [Daniel Wade] discovered the issue and reported it to Microsoft, and then after being told it wasn’t a security vulnerability, shared his report with Ars Technica.

So what exactly is happening here? It’s the case of a Windows machine login via Azure or a Microsoft account. That account is used to enable RDP, and the machine caches the username and password so logins work even when the computer is “offline”. The problem really comes in how those cached passwords get evicted from the cache. When it comes to RDP logins, it seems they are simply never removed.

There is a stark disconnect between what [Wade] has observed, and what Microsoft has to say about it. It’s long been known that Windows machines will cache passwords, but that cache will get updated the next time the machine logs in to the domain controller. This is what Microsoft’s responses seem to be referencing. The actual report is that in the case of RDP, the cached passwords will never expire, regardless of changing that password in the cloud and logging on to the machine repeatedly.

Bits and Bytes

Samsung makes a digital signage line, powered by the MagicINFO server application. That server has an unauthenticated endpoint, accepting file uploads with insufficient filename sanitization. That combination leads to arbitrary pre-auth code execution. While that’s not great, what makes this a real problem is that the report was first sent to Samsung in January, no response was ever received, and it seems that no fixes have officially been published.

A series of Viasat modems have a buffer overflow in their SNORE web interface. This leads to unauthenticated, arbitrary code execution on the system, from either the LAN or OTA interface, but thankfully not from the public Internet itself. This one is interesting in that it was found via static code analysis.

IPv6 is the answer to all of our IPv4 induced woes, right? It has Stateless Address Autoconfiguration (SLAAC) to handle IP addressing without DHCP, and Router Advertisement (RA) to discover how to route packets. And now, taking advantage of that great functionality is Spellbinder, a malicious tool to pull off SLACC attacks and do DNS poisoning. It’s not entirely new, as we’ve seen Man in the Middle attacks on IPv4 networks for years. IPv6 just makes it so much easier.

From Blog – Hackaday via this RSS feed

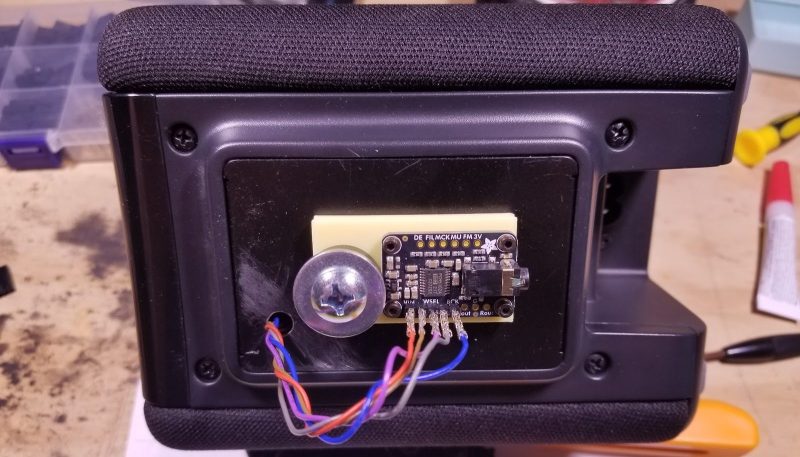

Sometimes you find a commercial product that is almost, but not exactly perfect for your needs. Your choices become: hack together a DIY replacement, or hack the commercial product to do what you need. [Daniel Epperson] chose door number two when he realized his Yamaha MusicCast smart speaker was perfect for his particular use case, except for its tragic lack of line out. A little surgery and a Digital-to-Analog Converter (DAC) breakout board solved that problem.

You can’t hear it in this image, but the headphones work.

You can’t hear it in this image, but the headphones work.

[Daniel] first went diving into the datasheet of the Yamaha amplifier chip inside of the speaker, before realizing it did too much DSP for his taste. He did learn that the chip was getting i2s signals from the speaker’s wifi module. That’s a lucky break, since i2s is an open, well-known protocol. [Daniel] had an adafruit DAC; he only needed to get the i2s signals from the smart speaker’s board to his breakout. That proved to be an adventure, but we’ll let [Daniel] tell the tale on his blog.

After a quick bit of OpenSCAD and 3D printing, the DAC was firmly mounted in its new home. Now [Daniel] has the exact audio-streaming-solution he wanted: Yamaha’s MusicCast, with line out to his own hi-fi.

[Daniel] and hackaday go way back: we featured his robot lawnmower in 2013. It’s great to see he’s still hacking. If you’d rather see what’s behind door number one, this roll-your-own smart speaker may whet your appetite.

From Blog – Hackaday via this RSS feed

Most of us have some dream project or three that we’d love to make a reality. We bring it up all the time with friends, muse on it at work, and research it during our downtime. But that’s just talk—and it doesn’t actually get the project done!

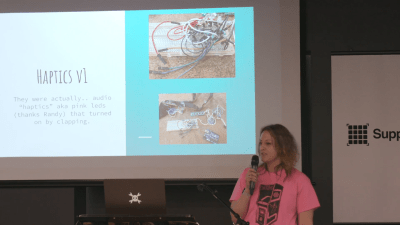

At the 2024 Hackaday Supercon, Sarah Vollmer made it clear—her presentation is about turning talk into action. It’s about how to overcome all the hurdles that get in the way of achieving your grand project, so you can actually make it a reality. It might sound like a self-help book—and it kind of is—but it’s rooted in the experience of a bonafide maker who’s been there and done that a few times over.

At the outset, Sarah advises us on the value of friends when you’re pursuing a project. At once, they might be your greatest cheerleaders, or full of good ideas. In her case, she also cites several of her contacts in the broader community that have helped her along the way—with a particular shoutout to Randy Glenn, who also gave us a great Supercon talk last year on the value of the CAN bus. At the same time, your friends might—with good intentions—lead you in the wrong direction, with help or suggestions that could derail your project. Her advice is to take what’s useful, and politely sidestep or decline what won’t help your project.

Next, Sarah highlights the importance of watching out for foes. “Every dream has your dream crushers,” says Sarah. “It could be you, it could be the things that are being told to you.” Excessive criticism can be crushing, sapping you of the momentum you need to get started. She also relates it to her own experience, where her project faced a major hurdle—the tedious procurement process of a larger organization, and the skepticism around whether she could overcome it. Whatever threatens the progress of your project could be seen as a foe—but the key is knowing what is threatening your project.

Sarah’s talk is rooted in her personal experiences across her haptics work and other projects.

Sarah’s talk is rooted in her personal experiences across her haptics work and other projects.

The third step Sarah recommends? Finding a way to set goals amidst the chaos. Your initial goals might be messy or vague, but often the end gets clearer as you start moving. “Be clear about what you’re doing so you can keep your eye on the prize,” says Sarah. “No matter what gets in your way, as long as you’re clear about what you’re doing, you can get there.” She talks about how she started with a simple haptics project some years ago. Over the years, she kept iterating and building on what she was trying to do with it, with a clear goal, and made great progress in turn.

Once you’re project is in motion, too, it’s important not to let it get killed by criticism. Cries of “Impossible!” might be hard to ignore, but often, Sarah notes, these brick walls are really problems you create actions items to solve. She also notes the value of using whatever you can to progress towards your goals. She talks about how she was able to parlay a Hackaday article on her work (and her previous 2019 Supercon talk) to help her gain access to an accelerator program to help her start her nascent lab supply business.

Sarah’s previous Hackaday Supercon appearance helped open doors for her work in haptics.

Anyone who has ever worked in a corporate environment will also appreciate Sarah’s advice to avoid the lure of endless planning, which can derail even the best planned project. “Once upon a time I went to meetings, those meetings became meetings about meetings,” she says. “Those meetings about meetings became about planning, they went on for four hours on a Friday, [and] I just stopped going,” Her ultimate dot point? “We don’t talk, talk is cheap, but too much talk is bankrupting.”

“When all else fails, laugh and keep going,” Sarah advises. She provides an example of a 24/7 art installation she worked on that was running across multiple physical spaces spread across the globe. “During the exhibit, China got in a fight with Google,” she says. This derailed plans to use certain cloud buckets to run things, but with good humor and the right attitude, the team were able to persevere and work around what could have been a disaster.

Overall, this talk is a rapid fire crash course in how she pushed her projects on through challenges and hurdles and came out on top. Just beware—if you’re offended by the use of AI art, this one might not be for you. Sarah talks fast and covers a lot of ground in her talk, but if you can keep up and follow along there’s a few kernels of wisdom in there that you might like to take forward.

From Blog – Hackaday via this RSS feed

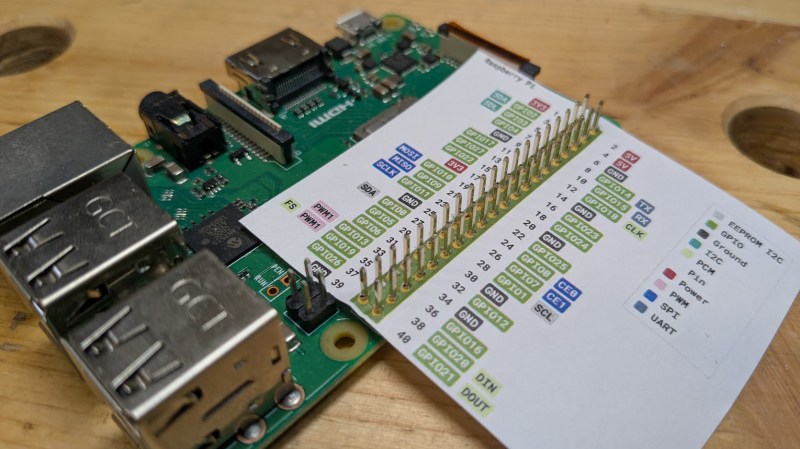

We all appreciate clear easy-to-read reference materials. In that pursuit [Andreas] over at Splitbrain sent in his latest project, Pinoutleaf. This useful web app simplifies the creation of clean, professional board pinout reference images.

The app uses YAML or JSON configuration files to define the board, including photos for the front and back, the number and spacing of pins, and their names and attributes.For example, you can designate pin 3 as GPIO3 or A3, and the app will color-code these layers accordingly. The tool is designed to align with the standard 0.1″ pin spacing commonly used in breadboards. One clever feature is the automatic mirroring of labels for the rear photo, a lifesaver when you need to reverse-mount a board. Once your board is configured, Pinoutleaf generates an SVG image that you can download or print to slide over or under the pin headers, keeping your reference key easily accessible.

Visit the GitHub page to explore the tool’s features, including its Command-Line Interface for batch-generating pinouts for multiple boards. Creating clear documentation is challenging, so we love seeing projects like Pinoutleaf that make it easier to do it well.

From Blog – Hackaday via this RSS feed

Picasso and the Z80 microprocessor are not two things we often think about at the same time. One is a renowned artist born in the 19th century, the other, a popular CPU that helped launch the microcomputer movement. And yet, the latter has come to inspire a computer based on the former. Meet the RC2014 Mini II Picasso!

As [concretedog] tells the story, what you’re fundamentally looking at is an RC2014 Mini II. As we’ve discussed previously, it’s a single-board Z80 retrocomputer that you can use to do fun things like run BASIC, Forth, or CP/M. However, where it gets kind of fun is in the layout. It’s the same fundamental circuitry as the RC2014, but it’s been given a rather artistic flair. The ICs are twisted this way and that, as are the passive components; even some of the resistors are dancing all over the top of one another. The kit is a limited edition, too, with each coming with a unique combination of colors where the silkscreen and sockets and LED are concerned. Kits are available via Z80Kits for those interested.

We love a good artistic PCB design; indeed, we’ve supported the artform heavily at Supercon and beyond. It’s neat to see the RC2014 designers reminding us that components need not live on a rigid grid; they too can dance and sway and flop all over the place like the eyes and or nose on a classic Picasso.

It’s weird, though; in a way, despite the Picasso inspiration, the whole thing ends up looking distinctly of the 1990s. In any case, if you’re cooking up any such kooky builds of your own, modelled after Picasso or any other Spanish master, don’t hesitate to notify the tipsline.

From Blog – Hackaday via this RSS feed

Low-quality image placeholders (LQIPs) have a solid place in web page design. There are many different solutions but the main gotcha is that generating them tends to lean on things like JavaScript, requires lengthy chunks of not-particularly-human-readable code, or other tradeoffs. [Lean] came up with an elegant, minimal solution in pure CSS to create LQIPs.

Here’s how it works: all required data is packed into a single CSS integer, which is decoded directly in CSS (no need for any JavaScript) to dynamically generate an image that renders immediately. Another benefit is that without any need for wrappers or long strings of data this method avoids cluttering the HTML. The code is little more than a line like which is certainly tidy, as well as a welcome boon to those who hand-edit files.

The trick with generating LQIPs from scratch is getting an output that isn’t hard on the eyes or otherwise jarring in its composition. [Lean] experimented until settling on an encoding method that reliably delivered smooth color gradients and balance.

This method therefore turns a single integer into a perfectly-serviceable LQIP, using only CSS. There’s even a separate tool [Lean] created to compress any given image into the integer format used (so the result will look like a blurred version of the original image). It’s true that the results look very blurred but the code is clean, minimal, and the technique is easily implemented. You can see it in action in [Lean]’s interactive LQIP gallery.

CSS has a lot of capability baked into it, and it’s capable of much more than just styling and lining up elements. How about trigonometric functions in CSS? Or from the other direction, check out implementing a CSS (and HTML) renderer on an ESP32.

From Blog – Hackaday via this RSS feed

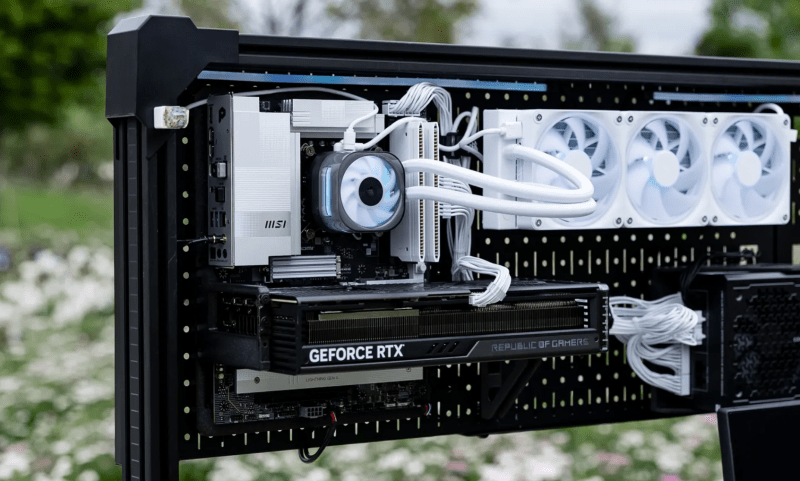

Sometimes it seems odd that we would spend hundreds (or thousands) on PC components that demand oodles of airflow, and stick them in a little box, out of site. The fine folks at Corsair apparently agree, because they’ve released files for an open-frame pegboard PC case on Printables.

According to the writeup on their blog, these prints have held up just fine with ordinary PLA– apparently there’s enough airflow around the parts that heat sagging isn’t the issue we would have suspected. ATX and ITX motherboards are both supported, along with a few power supply form factors. If your printer is smaller, the ATX mount is per-sectioned for your convenience. Their GPU brackets can accommodate beefy dual- and triple-slot models. It’s all there, if you want to unbox and show off your PC build like the work of engineering art it truly is.

Of course, these files weren’t released from the kindness of Corsair’s corporate heart– they’re meant to be used with fancy pegboard desks the company also sells. Still to their credit, they did release the files under a CC4.0-Attribution-ShareAlike license. That means there’s nothing stopping an enterprising hacker from remixing this design for the ubiquitous SKÅDIS or any other perfboard should they so desire.

We’ve covered artful open-cases before here on Hackaday, but if you prefer to hide the expensive bits from dust and cats, this midcentury box might be more your style. If you’d rather no one know you own a computer at all, you can always do the exact opposite of this build, and hide everything inside the desk.

From Blog – Hackaday via this RSS feed

Telescopes are great tools for observing the heavens, or even surrounding landscapes if you have the right vantage point. You don’t have to be a professional to build one though; you can make all kinds of telescopes as an amateur, as this guide from the Springfield Telesfcope Makers demonstrates.

The guide is remarkably deep and rich; no surprise given that the Springfield Telescope Makers club dates back to the early 20th century. It starts out with the basics—how to select a telescope, and how to decide whether to make or buy your desired instrument. It also explains in good detail why you might want to start with a simple Newtonian reflector setup on Dobsonian mounts if you’re crafting your first telescope, in no small part because mirrors are so much easier to craft than lenses for the amateur. From there, the guide gets into the nitty gritty of mirror production, right down to grinding and polishing techniques, as well as how to test your optical components and assemble your final telescope.

It’s hard to imagine a better place to start than here as an amateur telescope builder. It’s a rich mine of experience and practical advice that should give you the best possible chance of success. You might also like to peruse some of the other telescope projects we’ve covered previously. And, if you succeed, you can always tell us of your tales on the tipsline!

From Blog – Hackaday via this RSS feed

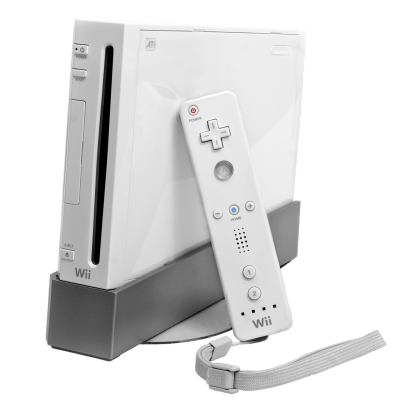

Historically, efforts to create original games and tools, port over open source emulators, and explore a game console’s hardware and software have been generally lumped together under the banner of “homebrew.” While not the intended outcome, it’s often the case that exploring a console in this manner unlocks methods to run pirated games. For example, if a bug is found in the system’s firmware that enables a clever developer to run “Hello World”, you can bet that the next thing somebody tries to write is a loader that exploits that same bug to play a ripped commercial game.

But for those who are passionate about being able to develop software for their favorite game consoles, and the developers who create the libraries and toolchains that make that possible, the line between homebrew and piracy is a critical boundary. The general belief has always been that keeping piracy at arm’s length made it less likely that the homebrew community would draw the ire of the console manufacturers.

But for those who are passionate about being able to develop software for their favorite game consoles, and the developers who create the libraries and toolchains that make that possible, the line between homebrew and piracy is a critical boundary. The general belief has always been that keeping piracy at arm’s length made it less likely that the homebrew community would draw the ire of the console manufacturers.

As such, homebrew libraries and tools are held to a particularly high standard. Homebrew can only thrive if developed transparently, and every effort must be taken to avoid tainting the code with proprietary information or code. Any deviation could be the justification a company like Nintendo or Sony needs to swoop in.

Unfortunately, there are fears that covenant has been broken in light of multiple allegations of impropriety against the developers of libogc, the C library used by nearly all homebrew software for the Wii and GameCube. From potential license violations to uncomfortable questions about the origins of the project, there’s mounting evidence that calls the viability of the library into question. Some of these allegations, if true, would effectively mean the distribution and use of the vast majority of community-developed software for both consoles is now illegal.

Homebrew Channel Blows the Whistle

For those unfamiliar, the Wii Homebrew Channel (HBC) is a front-end used to load homebrew games and programs on the Nintendo Wii, and is one of the very first things anyone who’s modded their console will install. It’s not an exaggeration to say that essentially anyone who’s run homebrew software on their Wii has done it through HBC.

But as of a few days ago, the GitHub repository for the project was archived, and lead developer Hector Martin added a long explanation to the top of its README that serves as an overview of the allegations being made against the team behind libogc.

Somewhat surprisingly, Martin starts by admitting that he’s believed libogc contained ill-gotten code since at least 2008. He accuses the developers of decompiling commercial games to get access to the C code, as well as copying from leaked documentation from the official Nintendo software development kit (SDK).

Somewhat surprisingly, Martin starts by admitting that he’s believed libogc contained ill-gotten code since at least 2008. He accuses the developers of decompiling commercial games to get access to the C code, as well as copying from leaked documentation from the official Nintendo software development kit (SDK).

For many, that would have been enough to stop using the library altogether. In his defense, Martin claims that he and the other developers of the HBC didn’t realize the full extent to which libogc copied code from other sources. Had they realized, Martin says they would have launched an effort to create a new low-level library for the Wii.

But as the popularity of the Homebrew Channel increased, Martin and his team felt they had no choice but to reluctantly accept the murky situation with libogc for the good of the Wii homebrew scene, and left the issue alone. That is, until new information came to light.

Inspiration Versus Copying

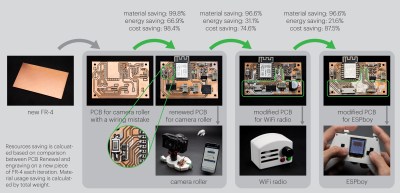

The story then fast-forwards to the present day, and new claims from others in the community that large chunks of libogc were actually copied from the Real-Time Executive for Multiprocessor Systems (RTEMS) project — a real-time operating system that was originally designed for military applications but that these days finds itself used in a wide-range of embedded systems. Martin links to a GitHub repository maintained by a user known as derek57 that supposedly reversed the obfuscation done by the libogc developers to try and hide the fact they had merged in code from RTEMS.

Now, it should be pointed out that RTEMS is actually an open source project. As you might expect from a codebase that dates back to 1993, these days it includes several licenses that were inherited from bits of code added over the years. But the primary and preferred license is BSD 2-Clause, which Hackaday readers may know is a permissive license that gives other projects the right to copy and reuse the code more or less however they chose. All it asks in return is attribution, that is, for the redistributed code to retain the copyright notice which credits the original authors.

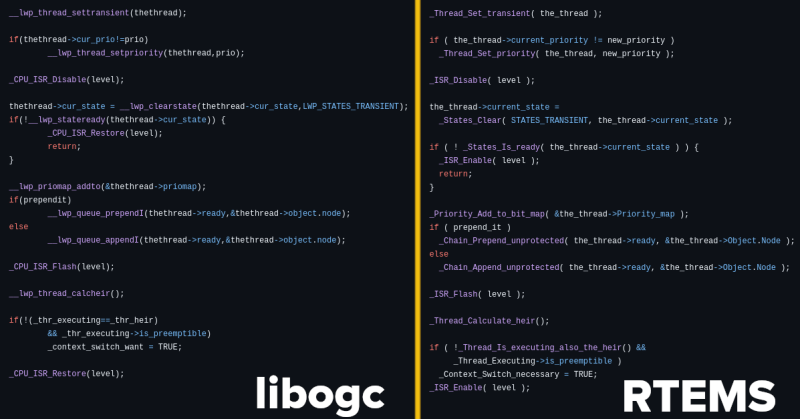

In other words, if the libogc developers did indeed copy code from RTEMS, all they had to do was properly credit the original authors. Instead, it’s alleged that they superficially refactored the code to make it appear different, presumably so they would not have to acknowledge where they sourced it from. Martin points to the following function as an example of RTEMS code being rewritten for libogc:

While this isolated function doesn’t necessarily represent the entirety of the story, it does seem hard to believe that the libogc implementation could be so similar to the RTEMS version by mere happenstance. Even if the code was not literally copy and pasted from RTEMS, it’s undeniable that it was used as direct inspiration.

libogc Developers Respond

At the time of this writing, there doesn’t appear to be an official response to the allegations raised by Martin and others in the community. But individual developers involved with libogc have attempted to explain their side of the story through social media, comments on GitHub issues, and personal blog posts.

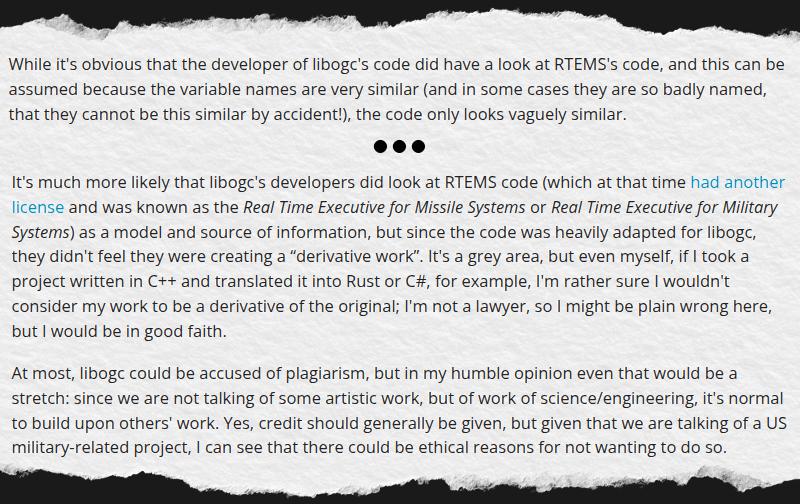

The most detailed comes from Alberto Mardegan, a relatively new contributor to libogc. While the code in question was added before his time with the project, he directly addresses the claim that functions were lifted from RTEMS in a blog post from April 28th. While he defends the libogc developers against the accusations of outright code theft, his conclusions are not exactly a ringing endorsement for how the situation was handled:

In short, Mardegan admits that some of the code is so similar that it must have been at least inspired by reading the relevant functions from RTEMS, but that he believes this falls short of outright copyright infringement. As to why the libogc developers didn’t simply credit the RTEMS developers anyway, he theorizes that they may have wanted to avoid any association with a project originally developed for military use.

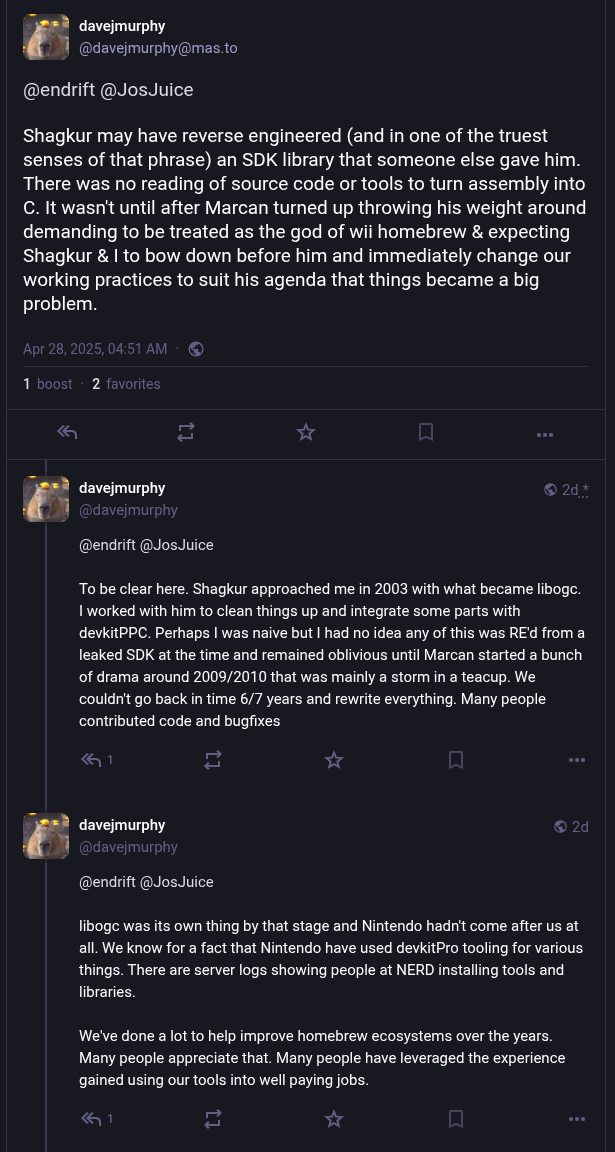

As for claims that libogc was based on stolen Nintendo code, the libogc developers seem to consider it irrelevant at this point. When presented with evidence that the library was built on proprietary code, Dave [WinterMute] Murphy, who maintains the devkitPro project that libogc is a component of, responded that “The official stance of the project is that we have no interest in litigating something that occurred 21 years ago”.

In posts to Mastodon, Murphy acknowledges that some of the code may have been produced by reverse engineering parts of the official Nintendo SDK, but then goes on to say that “There was no reading of source code or tools to turn assembly into C”.

From his comments, it’s clear that Murphy believes that the benefit of having libogc available to the community outweighs concerns over its origins. Further, he feels that enough time has passed since its introduction that the issue is now moot. In comparison, when other developers in the homebrew and emulator community have found themselves in similar situations, they’ve gone to great lengths to avoid tainting their projects with leaked materials.

Doing the Right Thing?

The Wii Homebrew Channel itself had not seen any significant updates in several years, so Martin archiving the project was somewhat performative to begin with. This would seem to track with his reputation — in addition to clashes with the libogc developers, Martin has also recently left Asahi Linux after a multi-bag-of-popcorn spat within the kernel development community that ended with Linus Torvalds declaring that “the problem is you”.

But that doesn’t mean there isn’t merit to some of his claims. At least part of the debate could be settled by simply acknowledging that RTEMS was an inspiration for libogc in the library’s code or documentation. The fact that the developers seem reluctant to make this concession in light of the evidence is troubling. If not an outright license violation, it’s at least a clear disregard for the courtesy and norms of the open source community.

As for how the leaked Nintendo SDK factors in, there probably isn’t enough evidence one way or another to ever determine what really happened. Martin says code was copied verbatim, the libogc team says it was reverse engineered.

The key takeaway here is that both parties agree that the leaked information existed, and that it played some part in the origins of the library. The debate therefore isn’t so much about if the leaked information was used, but how it was used. For some developers, that alone would be enough to pass on libogc and look for an alternative.

Of course, in the end, that’s the core of the problem. There is no alternative, and nearly 20 years after the Wii was released, there’s little chance of another group having the time or energy to create a new low-level C library for the system. Especially without good reason.

The reality is that whatever interaction there was with the Nintendo SDK happened decades ago, and if anyone was terribly concerned about it there would have been repercussions by now. By extension, it seems unlikely that any projects that rely on libogc will draw the attention of Nintendo’s legal department at this point.

In short, life will go on for those still creating and using homebrew on the Wii. But for those who develop and maintain open source code, consider this to be a cautionary tale — even if we can’t be completely sure of what’s fact or fiction in this case.

From Blog – Hackaday via this RSS feed

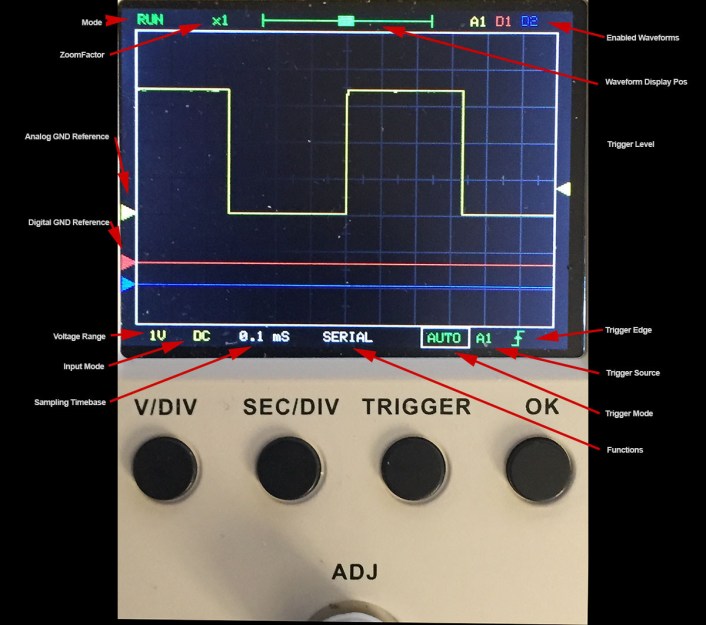

The Jye Tech DSO-150 is a capable compact scope that you can purchase as a kit. If you’re really feeling the DIY ethos, you can go even further, too, and kit your scope out with the latest open source firmware.

The Open-DSO-150 firmware is a complete rewrite from the ground up, and packs the scope with lots of neat features. You get one analog or three digital channels, and triggers are configurable for rising, falling, or both edges on all signals. There is also a voltmeter mode, serial data dump feature, and a signal statistics display for broader analysis.

For the full list of features, just head over to the GitHub page. If you’re planning to install it on your own DSO-150, you can build the firmware in the free STM32 version of Atollic trueSTUDIO.

If you’re interested in the Jye Tech DSO-150 as it comes from the factory, we’ve published our very own review, too. Meanwhile, if you’re cooking up your own scope hacks, don’t hesitate to let us know!

Thanks to [John] for the tip!

From Blog – Hackaday via this RSS feed

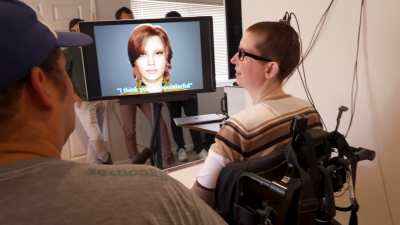

Brain-to-speech interfaces have been promising to help paralyzed individuals communicate for years. Unfortunately, many systems have had significant latency that has left them lacking somewhat in the practicality stakes.

A team of researchers across UC Berkeley and UC San Francisco has been working on the problem and made significant strides forward in capability. A new system developed by the team offers near-real-time speech—capturing brain signals and synthesizing intelligible audio faster than ever before.

New Capability

The aim of the work was to create more naturalistic speech using a brain implant and voice synthesizer. While this technology has been pursued previously, it faced serious issues around latency, with delays of around eight seconds to decode signals and produce an audible sentence. New techniques had to be developed to try and speed up the process to slash the delay between a user trying to “speak” and the hardware outputting the synthesized voice.

The implant developed by researchers is used to sample data from the speech sensorimotor cortex of the brain—the area that controls the mechanical hardware that makes speech: the face, vocal chords, and all the other associated body parts that help us vocalize. The implant captures signals via an electrode array surgically implanted into the brain itself. The data captured by the implant is then passed to an AI model which figures out how to turn that signal into the right audio output to create speech. “We are essentially intercepting signals where the thought is translated into articulation and in the middle of that motor control,” said Cheol Jun Cho, a Ph.D student at UC Berkeley. “So what we’re decoding is after a thought has happened, after we’ve decided what to say, after we’ve decided what words to use, and how to move our vocal-tract muscles.”

The AI model had to be trained to perform this role. This was achieved by having a subject, Ann, look at prompts and attempting to “speak ” the phrases. Ann has suffered from paralysis after a stroke which left her unable to speak. However, when she attempts to speak, relevant regions in her brain still lit up with activity, and sampling this enabled the AI to correlate certain brain activity to intended speech. Unfortunately, since Ann could no longer vocalize herself, there was no target audio for the AI to correlate the brain data with. Instead, researchers used a text-to-speech system to generate simulated target audio for the AI to match with the brain data during training. “We also used Ann’s pre-injury voice, so when we decode the output, it sounds more like her,” explains Cho. A recording of Ann speaking at her wedding provided source material to help personalize the speech synthesis to sound more like her original speaking voice.

To measure performance of the new system, the team compared the time it took the system to generate speech to the first indications of speech intent in Ann’s brain signals. “We can see relative to that intent signal, within one second, we are getting the first sound out,” said Gopala Anumanchipalli, one of the researchers involved in the study. “And the device can continuously decode speech, so Ann can keep speaking without interruption.” Crucially, too, this speedier method didn’t compromise accuracy—in this regard, it decoded just as well as previous slower systems.

Pictured is Ann using the system to speak in near-real-time. The system also features a video avatar. Credit: UC Berkeley

Pictured is Ann using the system to speak in near-real-time. The system also features a video avatar. Credit: UC Berkeley

The decoding system works in a continuous fashion—rather than waiting for a whole sentence, it processes in small 80-millisecond chunks and synthesizes on the fly. The algorithms used to decode the signals were not dissimilar from those used by smart assistants like Siri and Alexa, Anumanchipalli explains. “Using a similar type of algorithm, we found that we could decode neural data and, for the first time, enable near-synchronous voice streaming,” he says. “The result is more naturalistic, fluent speech synthesis.”

It was also key to determine whether the AI model

was genuinely communicating what Ann was trying to say. To investigate this, Ann was qsked to try and vocalize words outside the original training data set—things like the NATO phonetic alphabet, for example. “We wanted to see if we could generalize to the unseen words and really decode Ann’s patterns of speaking,” said Anumanchipalli. “We found that our model does this well, which shows that it is indeed learning the building blocks of sound or voice.”

For now, this is still groundbreaking research—it’s at the cutting edge of machine learning and brain-computer interfaces. Indeed, it’s the former that seems to be making a huge difference to the latter, with neural networks seemingly the perfect solution for decoding the minute details of what’s happening with our brainwaves. Still, it shows us just what could be possible down the line as the distance between us and our computers continues to get ever smaller.

Featured image: A researcher connects the brain implant to the supporting hardware of the voice synthesis system. Credit: UC Berkeley

From Blog – Hackaday via this RSS feed

Hackaday

Fresh hacks every day