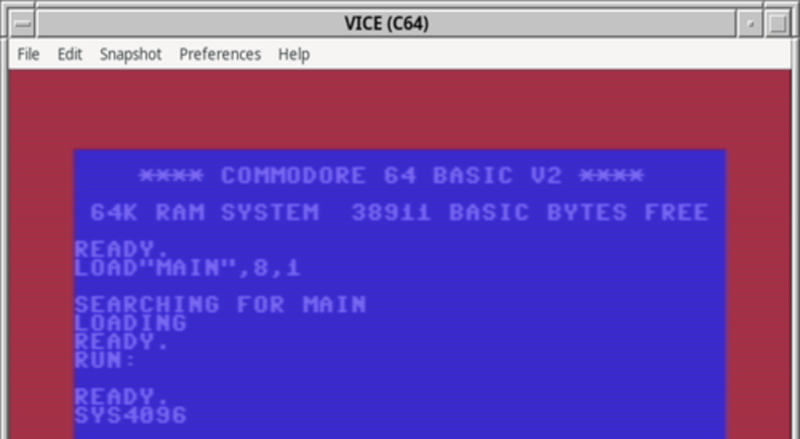

[Michal Sapka] wanted to learn a new skill, so he decided on the Commodore 64 assembly language. We didn’t say he wanted to learn a new skill that might land him a job. But we get it and even applaud it. Especially since he’s written a multi-part post about what he’s doing and how you can do it, too. So far, there are four parts, and we’d bet there are more to come.

The series starts with the obligatory “hello world,” as well as some basic setup steps. By part 2, you are learning about registers and numbers. Part 3 covers some instructions, and by part 4, he finds that there are even more registers to contend with.

One of the great things about doing a project like this today is that you don’t have to have real hardware. Even if you want to eventually run on real hardware, you can edit in comfort, compile on a fast machine, and then debug and test on an emulator. [Michal] uses VICE.

The series is far from complete, and we hear part 5 will talk about branching, so this is a good time to catch up.

We love applying modern tools to old software development.

From Blog – Hackaday via this RSS feed

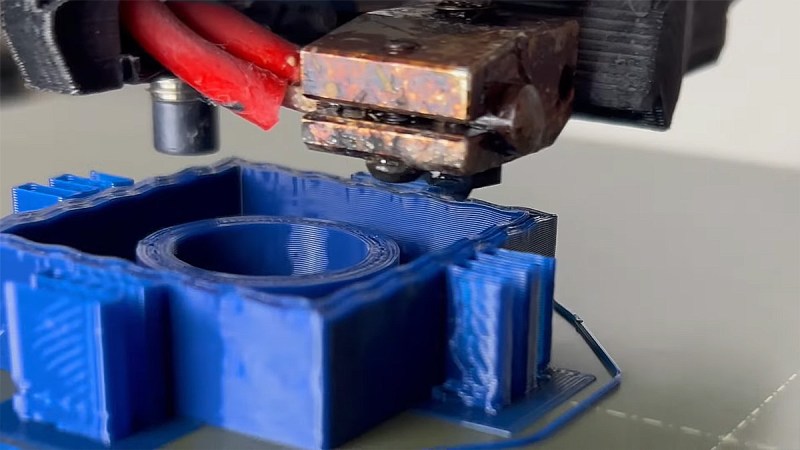

Strenghtening FDM prints has been discussed in detail over the last years. Solutions and results vary as each one’s desires differ. Now [TenTech] shares his latest improvements on his post-processing script that he first created around January. This script literally bends your G-code to its will – using non-planar, interlocking sine wave deformations in both infill and walls. It’s now open-source, and plugs right into your slicer of choice: PrusaSlicer, OrcaSlicer, or Bambu Studio. If you’re into pushing your print strength past the limits of layer adhesion, but his former solution wasn’t quite the fit for your printer, try this improvement.

Traditional Fused Deposition Modeling (FDM) prints break along layer lines. What makes this script exciting is that it lets you introduce alternating sine wave paths between wall loops, removing clean break points and encouraging interlayer grip. Think of it as organic layer interlocking – without switching to resin or fiber reinforcement. You can tweak amplitude, frequency, and direction per feature. In fact, the deformation even fades between solid layers, allowing smoother transitions. Structural tinkering at its finest, not just a cosmetic gimmick.

This thing comes without needing a custom slicer. No firmware mods. Just Python, a little G-code, and a lot of curious minds. [TenTech] is still looking for real-world strength tests, so if you’ve got a test rig and some engineering curiosity, this is your call to arms.

The script can be found in his Github. View his full video here , get the script and let us know your mileage!

From Blog – Hackaday via this RSS feed

These days you can run Doom anywhere on just about anything, with things like porting Doom to JavaScript these days about as interesting as writing Snake in BASIC on one’s graphical calculator. In a twist, [Patrick Trainer] had the idea to use SQL instead of JS to do the heavy lifting of the Doom game loop. Backed by the Web ASM version of the analytical DuckDB database software, a Doom-lite clone was coded that demonstrates the principle that anything in life can be captured in a spreadsheet or database application.

Rather than having the game world state implemented in JavaScript objects, or pixels drawn to a Canvas/WebGL surface, this implementation models the entire world state in the database. To render the player’s view, the SQL VIEW feature is used to perform raytracing (in SQL, of course). Any events are defined as SQL statements, including movement. Bullets hitting a wall or impacting an enemy result in the bullet and possibly the enemy getting DELETE-ed.

The role of JavaScript in this Doom clone is reduced to gluing the chunks of SQL together and handling sprite Z-buffer checks as well as keyboard input. The result is a glorious ASCII-based game of Doom which you can experience yourself with the DuckDB-Doom project on GitHub. While not very practical, it was absolutely educational, showing that not only is it fun to make domain specific languages do things they were never designed for, but you also get to learn a lot about it along the way.

Thanks to [Particlem] for the tip.

From Blog – Hackaday via this RSS feed

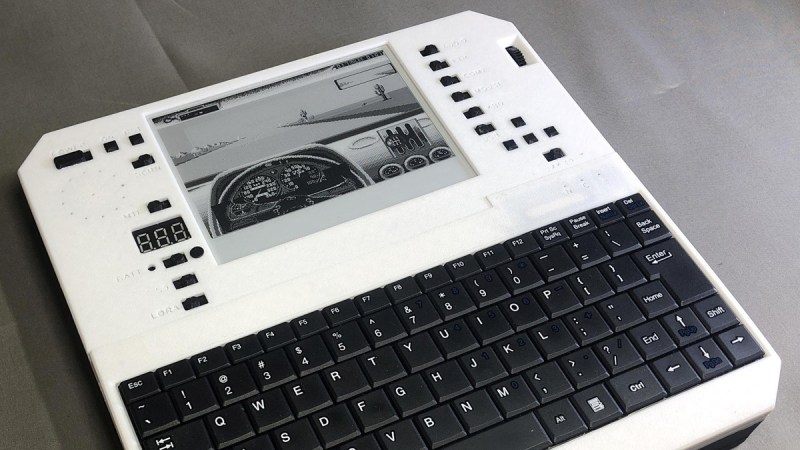

When was the last time you saw a computer actually outlast your weekend trip – and then some? Enter the Evertop, a portable IBM XT emulator powered by an ESP32 that doesn’t just flirt with low power; it basically lives off the grid. Designed by [ericjenott], hacker with a love for old-school computing and survivalist flair, this machine emulates 1980s PCs, runs DOS, Windows 3.0, and even MINIX, and stays powered for hundreds of hours. It has a built-in solar panel and 20,000mAh of battery, basically making it an old-school dream in a new-school shell.

What makes this build truly outstanding – besides the specs – is how it survives with no access to external power. It sports a 5.83-inch e-ink display that consumes zilch when static, hardware switches to cut off unused peripherals (because why waste power on a serial port you’re not using?), and a solar panel that pulls 700mA in full sun. And you guessed it – yes, it can hibernate to disk and resume where you left off. The Evertop is a tribute to 1980s computing, and a serious tool to gain some traction at remote hacker camps.

For the full breakdown, the original post has everything from firmware details to hibernation circuitry. Whether you’re a retro purist or an off-grid prepper, the Evertop deserves a place on your bench. Check out [ericjenott]’s project on Github here.

From Blog – Hackaday via this RSS feed

This week, Jonathan Bennett and Randal Schwartz chat with Allen Firstenberg about Google’s AI plans, Vibe Coding, and Open AI! What’s the deal with agentic AI, how close are we to Star Trek, and where does Open Source fit in? Watch to find out!

https://prisoner.com/http://spiders.com/https://js.langchain.com/docs/introduction/

Did you know you can watch the live recording of the show right on our YouTube Channel? Have someone you’d like us to interview? Let us know, or contact the guest and have them contact us! Take a look at the schedule here.

Direct Download in DRM-free MP3.

If you’d rather read along, here’s the transcript for this week’s episode.

Places to follow the FLOSS Weekly Podcast:

Theme music: “Newer Wave” Kevin MacLeod (incompetech.com)

Licensed under Creative Commons: By Attribution 4.0 License

From Blog – Hackaday via this RSS feed

Drumboy and Synthgirl from Randomwaves are a a pair of compact electronic instruments, a drum machine and a synthesiser. They are commercial products which were launched on Kickstarter, and if you’re in the market for such a thing you can buy one for yourself. What’s made them of interest to us here at Hackaday though is not their musical capabilities though, instead it’s that they’ve honoured their commitment to release them as open source in the entirety.

So for your download, you get everything you need to build a pair of rather good 24-bit synthesisers based upon the STM32 family of microcontrollers. We’re guessing that few of you will build your own when it’s an easier job to just buy one from Randomwaves, and we’re guessing that this open-sourcing will lead to interesting new features and extensions from the community of owners.

It will be interesting to watch how this progresses, because of course with the files out there, now anyone can produce and market a clone. Will AliExpress now be full of knock-off Drumboys and Synthgirls? It’s a problem we’ve looked at in the past with respect to closed-source projects, and doubtless there will be enterprising electronics shops eyeing this one up. By our observation though it seems to be those projects with cheaper bills of materials which suffer the most from clones, so perhaps that higher-end choice of parts will work in their favour.

Either way we look forward to more open-source from Randomwaves in the future, and if you’d like to buy either instrument you can go to their website.

Thanks [Eilís] for the tip.

From Blog – Hackaday via this RSS feed

It’s amazing how quickly medical science made radiography one of its main diagnostic tools. Medicine had barely emerged from its Dark Age of bloodletting and the four humours when X-rays were discovered, and the realization that the internal structure of our bodies could cast shadows of this mysterious “X-Light” opened up diagnostic possibilities that went far beyond the educated guesswork and exploratory surgery doctors had relied on for centuries.

The problem is, X-rays are one of those things that you can’t see, feel, or smell, at least mostly; X-rays cause visible artifacts in some people’s eyes, and the pencil-thin beam of a CT scanner can create a distinct smell of ozone when it passes through the nasal cavity — ask me how I know. But to be diagnostically useful, the varying intensities created by X-rays passing through living tissue need to be translated into an image. We’ve already looked at how X-rays are produced, so now it’s time to take a look at how X-rays are detected and turned into medical miracles.

Taking Pictures

For over a century, photographic film was the dominant way to detect medical X-rays. In fact, years before Wilhelm Conrad Röntgen’s first systematic study of X-rays in 1895, fogged photographic plates during experiments with a Crooke’s tube were among the first indications of their existence. But it wasn’t until Röntgen convinced his wife to hold her hand between one of his tubes and a photographic plate to create the first intentional medical X-ray that the full potential of radiography could be realized.

“Hand mit Ringen” by W. Röntgen, December 1895. Public domain.

“Hand mit Ringen” by W. Röntgen, December 1895. Public domain.

The chemical mechanism that makes photographic film sensitive to X-rays is essentially the same as the process that makes light photography possible. X-ray film is made by depositing a thin layer of photographic emulsion on a transparent substrate, originally celluloid but later polyester. The emulsion is a mixture of high-grade gelatin, a natural polymer derived from animal connective tissue, and silver halide crystals. Incident X-ray photons ionize the halogens, creating an excess of electrons within the crystals to reduce the silver halide to atomic silver. This creates a latent image on the film that is developed by chemically converting sensitized silver halide crystals to metallic silver grains and removing all the unsensitized crystals.

Other than in the earliest days of medical radiography, direct X-ray imaging onto photographic emulsions was rare. While photographic emulsions can be exposed by X-rays, it takes a lot of energy to get a good image with proper contrast, especially on soft tissues. This became a problem as more was learned about the dangers of exposure to ionizing radiation, leading to the development of screen-film radiography.

In screen-film radiography, X-rays passing through the patient’s tissues are converted to light by one or more intensifying screens. These screens are made from plastic sheets coated with a phosphorescent material that glows when exposed to X-rays. Calcium tungstate was common back in the day, but rare earth phosphors like gadolinium oxysulfate became more popular over time. Intensifying screens were attached to the front and back covers of light-proof cassettes, with double-emulsion film sandwiched between them; when exposed to X-rays, the screens would glow briefly and expose the film.

By turning one incident X-ray photon into thousands or millions of visible light photons, intensifying screens greatly reduce the dose of radiation needed to create diagnostically useful images. That’s not without its costs, though, as the phosphors tend to spread out each X-ray photon across a physically larger area. This results in a loss of resolution in the image, which in most cases is an acceptable trade-off. When more resolution is needed, single-screen cassettes can be used with one-sided emulsion films, at the cost of increasing the X-ray dose.

Wiggle Those Toes

Intensifying screens aren’t the only place where phosphors are used to detect X-rays. Early on in the history of radiography, doctors realized that while static images were useful, continuous images of body structures in action would be a fantastic diagnostic tool. Originally, fluoroscopy was performed directly, with the radiologist viewing images created by X-rays passing through the patient onto a phosphor-covered glass screen. This required an X-ray tube engineered to operate with a higher duty cycle than radiographic tubes and had the dual disadvantages of much higher doses for the patient and the need for the doctor to be directly in the line of fire of the X-rays. Cataracts were enough of an occupational hazard for radiologists that safety glasses using leaded glass lenses were a common accessory.

How not to test your portable fluoroscope. The X-ray tube is located in the upper housing, while the image intensifier and camera are below. The machine is generally referred to as a “C-arm” and is used in the surgery suite and for bedside pacemaker placements. Source: Nightryder84, CC BY-SA 3.0.

How not to test your portable fluoroscope. The X-ray tube is located in the upper housing, while the image intensifier and camera are below. The machine is generally referred to as a “C-arm” and is used in the surgery suite and for bedside pacemaker placements. Source: Nightryder84, CC BY-SA 3.0.

One ill-advised spin-off of medical fluoroscopy was the shoe-fitting fluoroscopes that started popping up in shoe stores in the 1920s. Customers would stick their feet inside the machine and peer at a fluorescent screen to see how well their new shoes fit. It was probably not terribly dangerous for the once-a-year shoe shopper, but pity the shoe salesman who had to peer directly into a poorly regulated X-ray beam eight hours a day to show every Little Johnny’s mother how well his new Buster Browns fit.

As technology improved, image intensifiers replaced direct screens in fluoroscopy suites. Image intensifiers were vacuum tubes with a large input window coated with a fluorescent material such as zinc-cadmium sulfide or sodium-cesium iodide. The phosphors convert X-rays passing through the patient to visible light photons, which are immediately converted to photoelectrons by a photocathode made of cesium and antimony. The electrons are focused by coils and accelerated across the image intensifier tube by a high-voltage field on a cylindrical anode. The electrons pass through the anode and strike a phosphor-covered output screen, which is much smaller in diameter than the input screen. Incident X-ray photons are greatly amplified by the image intensifier, making a brighter image with a lower dose of radiation.

Originally, the radiologist viewed the output screen using a microscope, which at least put a little more hardware between his or her eyeball and the X-ray source. Later, mirrors and lenses were added to project the image onto a screen, moving the doctor’s head out of the direct line of fire. Later still, analog TV cameras were added to the optical path so the images could be displayed on high-resolution CRT monitors in the fluoroscopy suite. Eventually, digital cameras and advanced digital signal processing were introduced, greatly streamlining the workflow for the radiologist and technologists alike.

Get To The Point

So far, all the detection methods we’ve discussed fall under the general category of planar detectors, in that they capture an entire 2D shadow of the X-ray beam after having passed through the patient. While that’s certainly useful, there are cases where the dose from a single, well-defined volume of tissue is needed. This is where point detectors come into play.

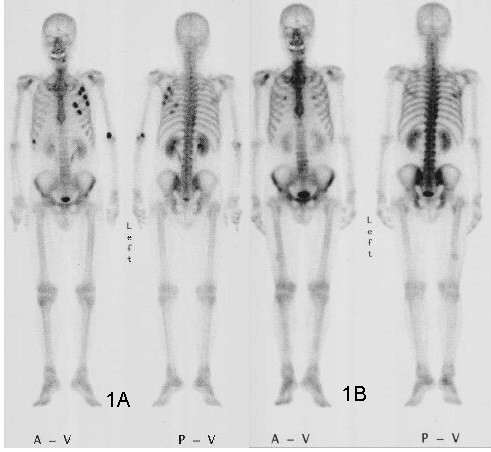

Nuclear medicine image, or scintigraph, of metastatic cancer. 99Tc accumulates in lesions in the ribs and elbows (A), which are mostly resolved after chemotherapy (B). Note the normal accumulation of isotope in the kidneys and bladder. Kazunari Mado, Yukimoto Ishii, Takero Mazaki, Masaya Ushio, Hideki Masuda and Tadatoshi Takayama, CC BY-SA 2.0.

Nuclear medicine image, or scintigraph, of metastatic cancer. 99Tc accumulates in lesions in the ribs and elbows (A), which are mostly resolved after chemotherapy (B). Note the normal accumulation of isotope in the kidneys and bladder. Kazunari Mado, Yukimoto Ishii, Takero Mazaki, Masaya Ushio, Hideki Masuda and Tadatoshi Takayama, CC BY-SA 2.0.

In medical X-ray equipment, point detectors often rely on some of the same gas-discharge technology that DIYers use to build radiation detectors at home. Geiger tubes and ionization chambers measure the current created when X-rays ionize a low-pressure gas inside an electric field. Geiger tubes generally use a much higher voltage than ionization chambers, and tend to be used more for radiological safety, especially in nuclear medicine applications, where radioisotopes are used to diagnose and treat diseases. Ionization chambers, on the other hand, were often used as a sort of autoexposure control for conventional radiography. Tubes were placed behind the film cassette holders in the exam tables of X-ray suites and wired into the control panels of the X-ray generators. When enough radiation had passed through the patient, the film, and the cassette into the ion chamber to yield a correct exposure, the generator would shut off the X-ray beam.

Another kind of point detector for X-rays and other kinds of radiation is the scintillation counter. These use a crystal, often cesium iodide or sodium iodide doped with thallium, that releases a few visible light photons when it absorbs ionizing radiation. The faint pulse of light is greatly amplified by one or more photomultiplier tubes, creating a pulse of current proportional to the amount of radiation. Nuclear medicine studies use a device called a gamma camera, which has a hexagonal array of PM tubes positioned behind a single large crystal. A patient is injected with a radioisotope such as the gamma-emitting technetium-99, which accumulates mainly in the bones. Gamma rays emitted are collected by the gamma camera, which derives positional information from the differing times of arrival and relative intensity of the light pulse at the PM tubes, slowly building a ghostly skeletal map of the patient by measuring where the 99Tc accumulated.

Going Digital

Despite dominating the industry for so long, the days of traditional film-based radiography were clearly numbered once solid-state image sensors began appearing in the 1980s. While it was reliable and gave excellent results, film development required a lot of infrastructure and expense, and resulted in bulky films that required a lot of space to store. The savings from doing away with all the trappings of film-based radiography, including the darkrooms, automatic film processors, chemicals, silver recycling, and often hundreds of expensive film cassettes, is largely what drove the move to digital radiography.

After briefly flirting with phosphor plate radiography, where a sensitized phosphor-coated plate was exposed to X-rays and then “developed” by a special scanner before being recharged for the next use, radiology departments embraced solid-state sensors and fully digital image capture and storage. Solid-state sensors come in two flavors: indirect and direct. Indirect sensor systems use a large matrix of photodiodes on amorphous silicon to measure the light given off by a scintillation layer directly above it. It’s basically the same thing as a film cassette with intensifying screens, but without the film.

Direct sensors, on the other hand, don’t rely on converting the X-ray into light. Rather, a large flat selenium photoconductor is used; X-rays absorbed by the selenium cause electron-hole pairs to form, which migrate to a matrix of fine electrodes on the underside of the sensor. The current across each pixel is proportional to the amount measured to the amount of radiation received, and can be read pixel-by-pixel to build up a digital image.

From Blog – Hackaday via this RSS feed

Sometimes in fantasy fiction, you don’t want to explain something that seems inexplicable, so you throw your hands up and say, “A wizard did it.” Sometimes in astronomy, instead of a wizard, the answer is dark matter (DM). If you are interested in astronomy, you’ve probably heard that dark matter solves the problem of the “missing mass” to explain galactic light curves, and the motion of galaxies in clusters.

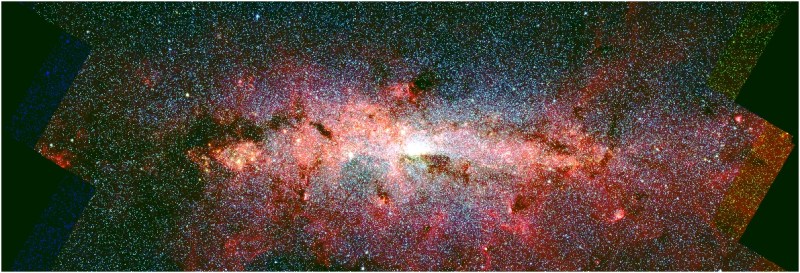

Now [Pedro De la Torre Luque] and others are proposing that DM can solve another pair of long-standing galactic mysteries: ionization of the central molecular zone (CMZ) in our galaxy, and mysterious 511 keV gamma-rays.

The Central Molecular Zone is a region near the heart of the Milky Way that has a very high density of interstellar gases– around sixty million times the mass of our sun, in a volume 1600 to 1900 light years across. It happens to be more ionized than it ought to be, and ionized in a very even manner across its volume. As astronomers cannot identify (or at least agree on) the mechanism to explain this ionization, the CMZ ionization is mystery number one.

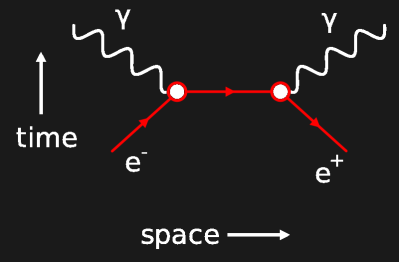

Feynman diagram of electron-positron annihilation, showing the characteristic gamma-ray emission.

Feynman diagram of electron-positron annihilation, showing the characteristic gamma-ray emission.

Mystery number two is a diffuse glow of gamma rays seen in the same part of the sky as the CMZ, which we know as the constellation Sagittarius. The emissions correspond to an energy of 515 keV, which is a very interesting number– it’s what you get when an electron annihilates with the antimatter version of itself. Again, there’s no universally accepted explanation for these emissions.

So [Pedro De la Torre Luque] and team asked themselves: “What if a wizard did it?” And set about trying to solve the mystery using dark matter. As it turns out, computer models including a form of light dark matter (called sub-GeV DM in the paper, for the particle’s rest masses) can explain both phenomena within the bounds of error.

In the model, the DM particles annihilate to form electron-positron pairs. In the dense interstellar gas of the CMZ, those positrons quickly form electrons to produce the 511 keV gamma rays observed. The energy released from this annihilation results in enough energy to produce the observed ionization, and even replicate the very flat ionization profile seen across the CMZ. (Any other proposed ionization source tends to radiate out from its source, producing an uneven profile.) Even better, this sort of light dark matter is consistent with cosmological observations and has not been ruled out by Earth-side dark matter detectors, unlike some heavier particles.

Further observations will help confirm or deny these findings, but it seems dark matter is truly the gift that keeps on giving for astrophysicists. We eagerly await what other unsolved questions in astronomy can be answered by it next, but it leaves us wondering how lazy the universe’s game master is if the answer to all our questions is: “A wizard did it.”

We can’t talk about dark matter without remembering [Vera Rubin].

From Blog – Hackaday via this RSS feed

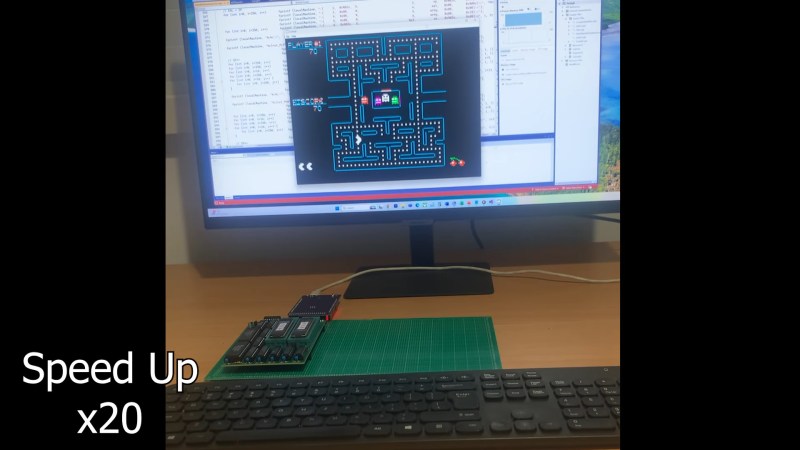

Building a Commodore 64 is among the easier projects for retrocomputing fans to tackle. That’s because the C64’s core chipset does most of the heavy lifting; source those and you’re probably 80% of the way there. But what if you can’t find those chips, or if you want more of a challenge than plugging and chugging? Are you out of luck?

Hardly. The video below from [DrMattRegan] is the first in a series on his scratch-built C64 that doesn’t use the core chipset, and it looks pretty promising. This video concentrates on building a replacement for the 6502 microprocessor — actually the 6510, but close enough — using just a couple of EPROMs, some SRAM chips, and a few standard logic chips to glue everything together. He uses the EPROMs as a “rulebook” that contains the code to emulate the 6502 — derived from his earlier Turing 6502 project — and the SRAM chips as a “notebook” for scratch memory and registers to make a Turing-complete random access machine.

[DrMatt] has made good progress so far, with the core 6502 CPU built on a PCB and able to run the Apple II version of Pac-Man as a benchmark. We’re looking forward to the rest of this series, but in the meantime, a look back at his VIC-less VIC-20 project might be informative.

Thanks to [Clint] for the tip.

From Blog – Hackaday via this RSS feed

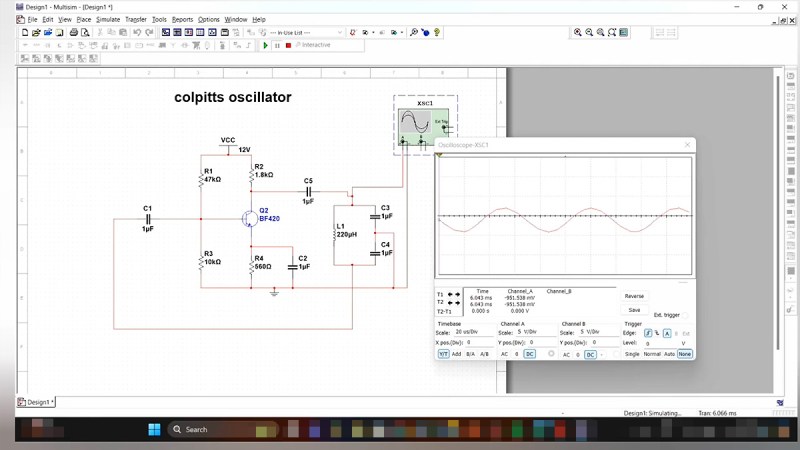

If you’ve ever fumbled through circuit simulation and ended up with a flatline instead of a sine wave, this video from [saisri] might just be the fix. In this walkthrough she demonstrates simulating a Colpitts oscillator using NI Multisim 14.3 – a deceptively simple analog circuit known for generating stable sine waves. Her video not only shows how to place and wire components, but it demonstrates why precision matters, even in virtual space.

You’ll notice the emphasis on wiring accuracy at multi-node junctions, something many tutorials skim over. [saisri] points out that a single misconnected node in Multisim can cause the circuit to output zilch. She guides viewers step-by-step, starting with component selection via the “Place > Components” dialog, through to running the simulation and interpreting the sine wave output on Channel A. The manual included at the end of the video is a neat bonus, bundling theory, waveform visuals, and circuit diagrams into one handy PDF.

If you’re into precision hacking, retro analogue joy, or just love watching a sine wave bloom onscreen, this is worth your time. You can watch the original video here.

From Blog – Hackaday via this RSS feed

Using steam to produce electricity or perform work via steam turbines has been a thing for a very long time. Today it is still exceedingly common to use steam in this manner, with said steam generated either by burning something (e.g. coal, wood), by using spicy rocks (nuclear fission) or from stored thermal energy (e.g. molten salt). That said, today we don’t use steam in the same way any more as in the 19th century, with e.g. supercritical and pressurized loops allowing for far higher efficiencies. As covered in a recent video by [Ryan Inis], a more recent alternative to using water is supercritical carbon dioxide (CO2), which could boost the thermal efficiency even further.

In the video [Ryan Inis] goes over the basics of what the supercritical fluid state of CO2 is, which occurs once the critical point is reached at 31°C and 83.8 bar (8.38 MPa). When used as a working fluid in a thermal power plant, this offers a number of potential advantages, such as the higher density requiring smaller turbine blades, and the potential for higher heat extraction. This is also seen with e.g. the shift from boiling to pressurized water loops in BWR & PWR nuclear plants, and in gas- and salt-cooled reactors that can reach far higher efficiencies, as in e.g. the HTR-PM and MSRs.

In a 2019 article in Power goes over some of the details, including the different power cycles using this supercritical fluid, such as various Brayton cycles (some with extra energy recovery) and the Allam cycle. Of course, there is no such thing as a free lunch, with corrosion issues still being worked out, and despite the claims made in the video, erosion is also an issue with supercritical CO2 as working fluid. That said, it’s in many ways less of an engineering issue than supercritical steam generators due to the far more extreme critical point parameters of water.

If these issues can be overcome, it could provide some interesting efficiency boosts for thermal plants, with the caveat that likely nobody is going to retrofit existing plants, supercritical steam (coal) plants already exist and new nuclear plant designs are increasingly moving towards gas, salt and even liquid metal coolants, though secondary coolant loops (following the typical steam generator) could conceivably use CO2 instead of water where appropriate.

From Blog – Hackaday via this RSS feed

In the dark ages, before iOS and Android phones became ubiquitous, there was the PDA. These handheld computers acted as simple companions to a computer and could often handle calendars, email, notes and more. Their demise was spelled by the smartphone, but the nostalgia of having a simple handheld and romanticizing about the 90’s and 2000’s is still there. Fortunately for the nostalgic among our readers, [Ashtf] decided to give us a modern take on the classic PDAs.

The device is powered by an ESP32-S3 connected to two PCBs in a mini-laptop clamshell format. It features two displays, a main eInk for slow speed interaction and a little i2c AMOLED for more tasks which demand higher refresh then an eInk can provide. Next to the eInk display is a capacitive slider. For input, there is also a QWERTY keyboard with back resin printed keycaps and white air dry clay pressed into embossed lettering in the keys and finally sealed using nail polish to create a professional double-shot looking keycap. The switches are the metal dome kind sitting on the main PCB. The clamshell is a rather stylish clear resin showcasing the device’s internals and even features a quick-change battery cover!

The device’s “operating system” is truly where the magic happens. It features several apps including a tasks app, file wizard, and text app. The main purpose of the device is on the go note taking so much time has been taken with the excellent looking text app! It also features a docked mode which displays tasks and time when it detects a USB-C cable is connected. Plans exist in the future to implement a calender, desktop sync and even Bluetooth keybaord compatibility. The device’s previous iteration is on GitHub with future plans to expand functionality and availability, so stay tuned for more coverage!

This is not the first time we have covered [Ashtf’s] PDA journey, and we are happy to see the revisions being made!

From Blog – Hackaday via this RSS feed

Back in the day, one of the few reasons to prefer compact cassette tape to vinyl was the fact you could record it at home in very good fidelity. Sure, if you had the scratch, you could go out and get a small batch of records made from that tape, but the machinery to do it was expensive and not always easy to come by, depending where you lived. That goes double today, but we’re in the middle of a vinyl renaissance! [ronald] wanted to make records, but was unable to find a lathe, so decided to take matters into his own hands, and build his own vinyl record cutting lathe.

![photograph of [ronald's] setup](https://hackaday.com/wp-content/uploads/2025/04/groovy_lathe.png?w=301) [ronald’s] record cutting lathe looks quite professional.It seems like it should be a simple problem, at least in concept: wiggle an engraving needle to scratch grooves in plastic. Of course for a stereo record, the wiggling needs to be two-axis, and for stereo HiFi you need that wiggling to be very precise over a very large range of frequencies (7 Hz to 50 kHz, to match the pros). Then of course there’s the question of how you’re controlling the wiggling of this engraving needle. (In this case, it’s through a DAC, so technically this is a CNC hack.) As often happens, once you get down to brass tacks (or diamond styluses, as the case may be) the “simple” problem becomes a major project.

[ronald’s] record cutting lathe looks quite professional.It seems like it should be a simple problem, at least in concept: wiggle an engraving needle to scratch grooves in plastic. Of course for a stereo record, the wiggling needs to be two-axis, and for stereo HiFi you need that wiggling to be very precise over a very large range of frequencies (7 Hz to 50 kHz, to match the pros). Then of course there’s the question of how you’re controlling the wiggling of this engraving needle. (In this case, it’s through a DAC, so technically this is a CNC hack.) As often happens, once you get down to brass tacks (or diamond styluses, as the case may be) the “simple” problem becomes a major project.

The build log discusses some of the challenges faced–for example, [ronald] started with locally made polycarbonate disks that weren’t quite up to the job, so he has resigned himself to purchasing professional vinyl blanks. The power to the cutting head seems to have kept creeping up with each revision: the final version, pictured here, has two 50 W tweeters driving the needle.

That necessitated a better amplifier, which helped improve frequency response. So it goes; the whole project took [ronald] fourteen months, but we’d have to say it looks like it was worth it. It sounds worth it, too; [ronald] provides audio samples; check one out below. Every garage band in Queensland is going to be beating a path to [ronald’s] door to get their jam sessions cut into “real” records, unless they agree that physical media deserved to die.

https://hackaday.com/wp-content/uploads/2025/04/01_Test-Cut-6th-April-2025-Move-For-Me.wav

Despite the supposedly well-deserved death of physical media, this isn’t the first record cutter we have featured. If you’d rather copy records than cut them, we have that too. There’s also the other kind of vinyl cutter, which might be more your speed.

From Blog – Hackaday via this RSS feed

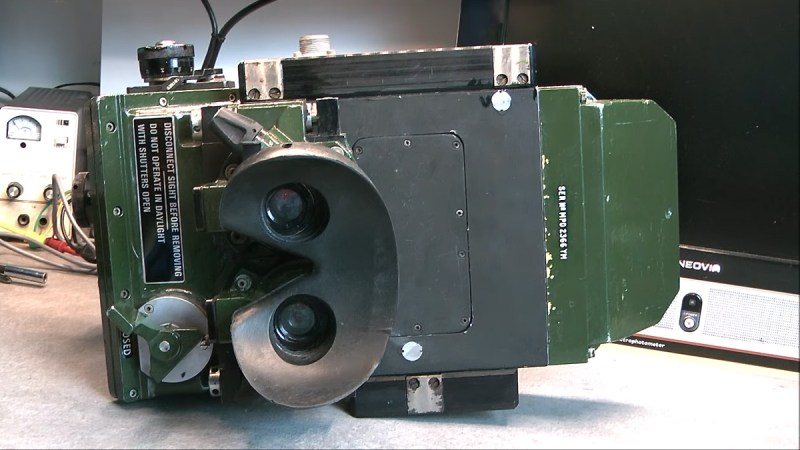

We all know periscopes serve for observation where there’s no direct line-of-sight, but did you know they can allow you to peer through history? That’s what [msylvain59] documented when he picked up a British military night vision periscope, snagged from a German surplus shop for just 49 euros. Despite its Cold War vintage and questionable condition, the unit begged for a teardown.

The periscope is a 15-kilo beast: industrial metal, cryptic shutter controls, and twin optics that haven’t seen action since flares were fashionable. One photo amplifier tube flickers to greenish life, the other’s deader than a disco ball in 1993. With no documentation, unclear symbols, and adjustment dials from hell, the teardown feels more like deciphering a British MoD fever dream than a Sunday project. And of course, everything’s imperial.

Despite corrosion, mysterious bulbs, and non-functional shutters, [msylvian59] uncovers a fascinating mix of precision engineering and Cold War paranoia. There’s a thrill in tracing light paths through mil-spec lenses (the number of graticules seen that are etched on the optics) and wondering what secrets they once guarded. This relic might not see well anymore, but it sure makes us look deeper. Let us know your thoughts in the comments or share your unusual wartime relics below.

From Blog – Hackaday via this RSS feed

As computers have gotten smaller and less expensive over the years, so have their components. While many of us got our start in the age of through-hole PCBs, this size reduction has led to more and more projects that need the use of surface-mount components and their unique set of tools. These tools tend to be more elaborate than what would be needed for through-hole construction but [Tobi] has a new project that goes into some details about how to build surface-mount projects without breaking the bank.

The project here is interesting in its own right, too: a display module upgrade for the classic Game Boy based on an RP2350B microprocessor. To get all of the components onto a PCB that actually fits into the original case, though, surface-mount is required. For that [Tobi] is using a small USB-powered hotplate to reflow the solder, a Pinecil, and a healthy amount of flux. The hotplate is good enough for a small PCB like this, and any solder bridges can be quickly cleaned up with some extra flux and a quick pass with a soldering iron.

The build goes into a lot of detail about how a process like this works, so if you’ve been hesitant to start working with surface mount components this might be a good introduction. Not only that, but we also appreciate the restoration of the retro video game handheld complete with some new features that doesn’t disturb the original look of the console. One of the other benefits of using the RP2350 for this build is that it’s a lot simpler than using an FPGA, but there are perks to taking the more complicated route as well.

From Blog – Hackaday via this RSS feed

Over the course of more than a decade, physical media has gradually vanished from public view. Once computers had an optical drive except for ultrabooks, but these days computer cases that even support an internal optical drive are rare. Rather than manuals and drivers included on a data CD you now get a QR code for an online download. In the home, DVD and Blu-ray (BD) players have given way to smart TVs with integrated content streaming apps for various services. Music and kin are enjoyed via smart speakers and smart phones that stream audio content from online services. Even books are now commonly read on screens rather than printed on paper.

With these changes, stores selling physical media have mostly shuttered, with much audiovisual and software content no longer pressed on discs or printed. This situation might lead one to believe that the end of physical media is nigh, but the contradiction here comes in the form of a strong revival of primarily what used to be considered firmly obsolete physical media formats. While CD, DVD and BD sales are plummeting off a cliff, vinyl records, cassette tapes and even media like 8-track tapes are undergoing a resurgence, in a process that feels hard to explain.

How big is this revival, truly? Are people tired of digital restrictions management (DRM), high service fees and/or content in their playlists getting vanished or altered? Perhaps it is out of a sense of (faux) nostalgia?

A Deserved End

Ask anyone who ever has had to use any type of physical media and they’ll be able to provide a list of issues with various types of physical media. Vinyl always was cumbersome, with clicking and popping from dust in the grooves, and gradual degradation of the record with a lifespan in the hundreds of plays. Audio cassettes were similar, with especially Type I cassettes having a lot of background hiss that the best Dolby noise reduction (NR) systems like Dolby B, C and S only managed to tame to a certain extent.

Ask anyone who ever has had to use any type of physical media and they’ll be able to provide a list of issues with various types of physical media. Vinyl always was cumbersome, with clicking and popping from dust in the grooves, and gradual degradation of the record with a lifespan in the hundreds of plays. Audio cassettes were similar, with especially Type I cassettes having a lot of background hiss that the best Dolby noise reduction (NR) systems like Dolby B, C and S only managed to tame to a certain extent.

Add to this issues like wow and flutter, and the joy of having a sticky capstan roller resulting in tape spaghetti when you open the tape deck, ruining that precious tape that you had only recently bought. These issues made CDs an obvious improvement over both audio formats, as they were fully digital and didn’t wear out from merely playing them hundreds of times.

Although audio CDs are better in many ways, they do not lend themselves to portability very well unlike tape, with anti-shock read buffers being an absolute necessity to make portable CD players at all feasible. This same issue made data CDs equally fraught with issues, especially if you went into the business of writing your own (data or audio) CDs on CD-Rs. Burning coasters was exceedingly common for years. Yet the alternative was floppies – with LS-120 and Zip disks never really gaining much market share – or early Flash memory, whether USB sticks (MB-sized) or those inside MP3 players and early digital cameras. There were no good options, but we muddled on.

On the video side VHS had truly brought the theater into the home, even if it was at fuzzy NTSC or PAL quality with astounding color bleed and other artefacts. Much like audio cassette tapes, here too the tape would gradually wear out, with the analog video signal ensuring that making copies would result in an inferior copy.

On the video side VHS had truly brought the theater into the home, even if it was at fuzzy NTSC or PAL quality with astounding color bleed and other artefacts. Much like audio cassette tapes, here too the tape would gradually wear out, with the analog video signal ensuring that making copies would result in an inferior copy.

Rewinding VHS tapes was the eternal curse, especially when popping in that tape from the rental store and finding that the previous person had neither been kind, nor rewound. Even if being able to record TV shows to watch later was an absolute game changer, you better hope that you managed to appease the VHS gods and had it start at the right time.

It could be argued that DVDs were mostly perfect aside from a lack of recording functionality by default and pressed DVDs featuring unskippable trailers and similar nonsense. One can also easily argue here that DVDs’ success was mostly due to its DRM getting cracked early on when the CSS master key leaked. DVDs would also introduce region codes that made this format less universal than VHS and made things like snapping up a movie during an overseas vacation effectively impossible.

This was a practice that BDs doubled-down on, and with the encryption still intact to this day, it means that unlike with DVDs you must pay to be allowed to watch BDs which you previously bought, whether this cost is included in the dedicated BD player, or the license cost for a BD video player for on the PC.

Thus, when streaming services gave access to a very large library for a (small) monthly fee, and cloud storage providers popped up everywhere, it seemed like a no-brainer. It was like paying to have the world’s largest rental store next door to your house, or a data storage center for all your data. All you had to do was create an account, whip out the credit card and no more worries.

Combined with increasingly faster and ubiquitous internet connections, the age of physical media seemed to have come to its natural end.

The Revival

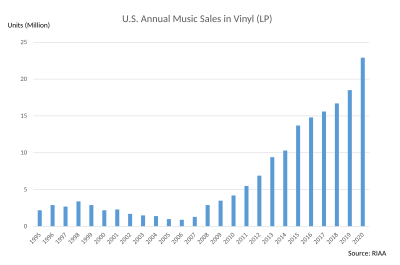

US vinyl record sales 1995-2020. (Credit: Ippantekina with RIAA data)

US vinyl record sales 1995-2020. (Credit: Ippantekina with RIAA data)

Despite this perfect landscape where all content is available all the time via online services through your smart speakers, smart TVs, smart phones and so on, the number of vinyl record sales has surged the past years despite its reported death in the early 2000s. In 2024 the vinyl records market grew another few percent, with more and more new record pressing plants coming online. In addition to vinyl sales, UK cassette sales also climbed, hitting 136,000 in 2023. CD sales meanwhile have kept plummeting, but not as strongly any more.

Perhaps the most interesting part is that most of newly released vinyl are new albums, by artists like Taylor Swift, yet even the classics like Pink Floyd and Fleetwood Mac keep selling. As for the ‘why’, some suggest that it’s the social and physical experience of physical media and the associated interactions that is a driving factor. In this sense it’s more of a (cultural) statement, as a rejection of the world of digital streaming. The sleeve of a vinyl record also provides a lot of space for art and other creative expressions, all of which provides a collectible value.

Although so far CD sales haven’t really seen a revival, the much lower cost of producing these shiny discs could reinvigorate this market too for many of the same reasons. Who doesn’t remember hanging out with a buddy and reading the booklet of a CD album which they just put into the player after fetching it from their shelves? Maybe checking the lyrics, finding some fun Easter eggs or interesting factoids that the artists put in it, and having a good laugh about it with your buddy.

As some responded when asked, they like the more intimate experience of vinyl records along with having a physical item to own, while streaming music is fine for background music. The added value of physical media here is thus less about sound quality, and more about a (social) experience and collectibles.

On the video side of the fence there is no such cheerful news, however. In 2024 sales of DVDs, BDs and UHD (4K) BDs dropped by 23.4% year-over-year to below $1B in the US. This compares with a $16B market value in 2005, underlining a collapsing market amidst brick & mortar stores either entirely removing their DVD & BD section, or massively downsizing it. Recently Sony also announced the cessation of its recordable BD, MD and MiniDV media, as a further indication of where the market is heading.

On the video side of the fence there is no such cheerful news, however. In 2024 sales of DVDs, BDs and UHD (4K) BDs dropped by 23.4% year-over-year to below $1B in the US. This compares with a $16B market value in 2005, underlining a collapsing market amidst brick & mortar stores either entirely removing their DVD & BD section, or massively downsizing it. Recently Sony also announced the cessation of its recordable BD, MD and MiniDV media, as a further indication of where the market is heading.

Despite streaming services repeatedly bifurcating themselves and their libraries, raising prices and constantly pulling series and movies, this does not seem to hurt their revenue much, if at all. This is true for both audiovisual services like Netflix, but also for audio streaming services like Spotify, who are seeing increasing demand (per Billboard), even as digital track sales are seeing a pretty big drop year-over-year (-17.9% for Week 16 of 2025).

Perhaps this latter statistic is indicative that the idea of ‘buying’ a music album or film which – courtesy of DRM – is something that you’re technically only leasing, is falling out of favor. This is also illustrated by the end of Apple’s iPod personal music player in favor of its smart phones that are better suited for streaming music on the go. Meanwhile many series and some movies are only released on certain streaming platforms with no physical media release, which incentivizes people to keep those subscriptions.

To continue the big next-door-rental-store analogy, in 2025 said single rental store has now turned into fifty stores, each carrying a different inventory that gets either shuffled between stores or tossed into a shredder from time to time. Yet one of them will have That New Series , which makes them a great choice, unless you like more rare and older titles, in which case you get to hunt the dusty shelves over at EBay and kin.

, which makes them a great choice, unless you like more rare and older titles, in which case you get to hunt the dusty shelves over at EBay and kin.

It’s A Personal Thing

Humans aren’t automatons that have to adhere to rigid programming. They have each their own preferences, ideologies and wishes. While for some people the DRM that has crept into the audiovisual world since DVDs, Sony’s MiniDisc (with initial ATRAC requirement), rootkits on audio CDs, and digital music sales continues to be a deal-breaker, others feel no need to own all the music and videos they like and put them on their NAS for local streaming. For some the lower audio quality of Spotify and kin is no concern, much like for those who listened to 64 kbit WMA files in the early 2000s, while for others only FLACs ripped from a CD can begin to appease their tastes.

Reading through the many reports about ‘the physical media’ revival, what jumps out is that on one hand it is about the exclusivity of releasing something on e.g. vinyl, which is also why sites like Bandcamp offer the purchase of a physical album, and mainstream artists more and more often opt for this. This ties into the other noticeable reason, which is the experience around physical media. Not just that of handling the physical album and operating of the playback device, but also that of the offline experience, being able to share the experience with others without any screens or other distractions around. Call it touching grass in a socializing sense.

As I mentioned already in an earlier article on physical media and its purported revival, there is no reason why people cannot enjoy both physical media as well as online streaming. If one considers the rental store analogy, the former (physical media) is much the same as it always was, while online streaming merely replaces the brick & mortar rental store. Except that these new rental stores do not take requests for tapes or DVDs not in inventory and will instead tell you to subscribe to another store or use a VPN, but that’s another can of worms.

So far optical media seems to be still in freefall, and it’s not certain whether it will recover, or even whether there might be incentives in board rooms to not have DVDs and BDs simply die. Here the thought of having countless series and movies forever behind paywalls, with occasional ‘vanishings’ might be reason enough for more people to seek out a physical version they can own, or it may be that the feared erasure of so much media in this digital, DRM age is inevitable.

Running Up That Hill

Original Sony Walkman TPS-L2 from 1979.

Original Sony Walkman TPS-L2 from 1979.

The ironic thing about this revival is that it seems influenced very much by streaming services, such as with the appearance of a portable cassette player in Netflix’s Stranger Things, not to mention Rocket Raccoon’s original Sony Walkman TPS-L2 in Marvel’s Guardians of the Galaxy.

After many saw Sony’s original Walkman in the latter movie, there was a sudden surge in EBay searches for this particular Walkman, as well as replicas being produced by the bucket load, including 3D printed variants. This would seem to support the theory that the revival of vinyl and cassette tapes is more about the experiences surrounding these formats, rather than anything inherent to the format itself, never mind the audio quality.

As we’re now well into 2025, we can quite confidently state that vinyl and cassette tape sales will keep growing this year. Whether or not new (and better) cassette mechanisms (with Dolby NR) will begin to be produced again along with Type II tapes remains to be seen, but there seems to be an inkling of hope there. It was also reported that Dolby is licensing new cassette mechanisms for NR, so who knows.

Meanwhile CD sales may stabilize and perhaps even increase again, in the midst of still a very uncertain future optical media in general. Recordable optical media will likely continue its slow death, as in the PC space Flash storage has eaten its lunch and demanded seconds. Even though PCs no longer tend to have 5.25″ bays for optical drives, even a simple Flash thumb drive tends to be faster and more durable than a BD. Here the appeal of ‘cloud storage’ has been reduced after multiple incidents of data loss & leaks in favor of backing up to a local (SSD) drive.

Finally, as old-school physical audio formats experience a revival, there just remains the one question about whether movies and series will soon only be accessible via streaming services, alongside a veritable black market of illicit copies, or whether BD versions of movies and series will remain available for sale. With the way things are going, we may see future releases on VHS, to match the vibe of vinyl and cassette tapes.

In lieu of clear indications from the industry on what direction things will be heading into, any guess is probably valid at this point. The only thing that seems abundantly clear at this point is that physical media had to die first for us to learn to truly appreciate it.

From Blog – Hackaday via this RSS feed

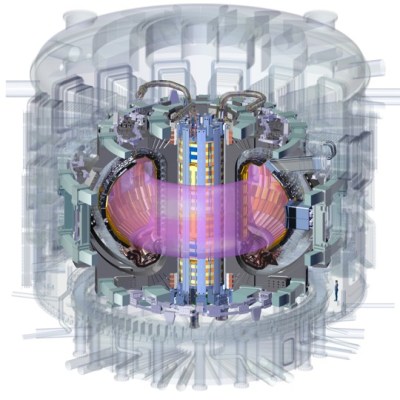

What’s sixty feet (18.29 meters for the rest of the world) across and superconducting? The International Thermonuclear Experimental Reactor (ITER), and probably not much else.

The last parts of the central solenoid assembly have finally made their way to France from the United States, making both a milestone in the slow development of the world’s largest tokamak, and a reminder that despite the current international turmoil, we really can work together, even if we can’t agree on the units to do it in.

The central solenoid is in the “doughnut hole” of the tokamak in this cutaway diagram. Image: US ITER.

The central solenoid is in the “doughnut hole” of the tokamak in this cutaway diagram. Image: US ITER.

The central solenoid is 4.13 m across (that’s 13′ 7″ for burger enthusiasts) sits at the hole of the “doughnut” of the toroidal reactor. It is made up of six modules, each weighing 110 t (the weight of 44 Ford F-150 pickup trucks), stacked to a total height of 59 ft (that’s 18 m, if you prefer). Four of the six modules have be installed on-site, and the other two will be in place by the end of this year.

Each module was produced ITER US, using superconducting material produced by ITER Japan, before being shipped for installation at the main ITER site in France — all to build a reactor based on a design from the Soviet Union. It doesn’t get much more international than this!

This magnet is, well, central to a the functioning of a tokamak. Indeed, the presence of a central solenoid is one of the defining features of this type, compared to other toroidal rectors (like the earlier stellarator or spheromak). The central solenoid provides a strong magnetic field (in ITER, 13.1 T) that is key to confining and stabilizing the plasma in a tokamak, and inducing the 15 MA current that keeps the plasma going.

When it is eventually finished (now scheduled for initial operations in 2035) ITER aims to produce 500 MW of thermal power from 50 MW of input heating power via a deuterium-tritium fusion reaction. You can follow all news about the project here.

While a tokamak isn’t likely something you can hack together in your back yard, there’s always the Farnsworth Fusor, which you can even built to fit on your desk.

From Blog – Hackaday via this RSS feed

Upgrading RAM on most computers is often quite a straightforward task: look up the supported modules, purchase them, push a couple of levers, remove the old, and install the new. However, this project submitted by [Mads Chr. Olesen] is anything but a simple.

In this project, he sets out to double the RAM on a Olimex A20-OLinuXino-LIME2 single-board computer. The Lime2 came with 1 GB of RAM soldered to the board, but he knew the A20 processor could support more and wondered if simply swapping RAM chips could double the capacity. He documents the process of selecting the candidate RAM chip for the swap and walks us through how U-Boot determines the amount of memory present in the system.

While your desktop likely has RAM on removable sticks, the RAM here is soldered to the board. Swapping the chip required learning a new skill: BGA soldering, a non-trivial technique to master. Initially, the soldering didn’t go as planned, requiring extra steps to resolve issues. After reworking the soldering, he successfully installed both new chips. The moment of truth arrived—he booted up the LIME2, and it worked! He now owns the only LIME2 with 2 GB of RAM.

Be sure to check out some other BGA soldering projects we’ve featured over the years.

From Blog – Hackaday via this RSS feed

This Short from [ProShorts 101] shows us how to make an incandescent light bulb from a jar, a pencil lead, two bolts, and a candle.

Prepare the lid of the jar by melting in two holes to contain the bolts, you can do this with your soldering iron, but make sure your workspace is well ventilated and don’t breathe the fumes. Install the two bolts into the lid. Take a pencil lead and secure it between the two bolts. Chop off the tip of a candle and glue it inside the lid. Light the candle and while it’s burning cover it with the jar and screw on the lid. Apply power and your light bulb will glow.

The incandescent light bulb was invented by Thomas Edison and patented in patent US223898 in 1879. It’s important to remove the oxygen from the bulb so that the filament doesn’t burn up when it gets hot. That’s what the candle is for, to burn out all the oxygen in the jar before it’s sealed.

Of course if you want something that is energy efficient you’re going to want an LED light bulb.

From Blog – Hackaday via this RSS feed

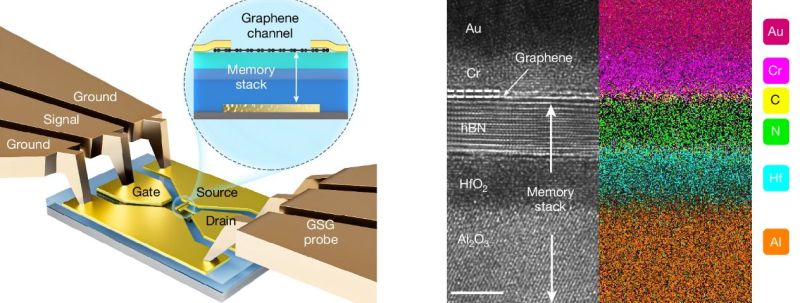

Recently a team at Fudan University claimed to have developed a picosecond-level Flash memory device (called ‘PoX’) that has an access time of a mere 400 picoseconds. This is significantly faster than the millisecond level access times of NAND Flash memory, and more in the ballpark of DRAM, while still being non-volatile. Details on the device technology were published in Nature.

In the paper by [Yutong Xing] et al. they describe the memory device as using a two-dimensional Dirac graphene-channel Flash memory structure, with hot carrier injection for both electron and hole injection, meaning that it is capable of both writing and erasing. Dirac graphene refers to the unusual electron transport properties of typical monolayer graphene sheets.

Demonstrated was a write speed of 400 picoseconds, non-volatile storage and a 5.5 × 106 cycle endurance with a programming voltage of 5 V. It are the unique properties of a Dirac material like graphene that allow these writes to occur significantly faster than in a typical silicon transistor device.

What is still unknown is how well this technology scales, its power usage, durability and manufacturability.

From Blog – Hackaday via this RSS feed

RepRap was the origin of pushing hobby 3D printing boundaries, and here we see a RepRap scaled down to the smallest detail. [Vik Olliver] over at the RepRap blog has been working on getting a printer working printing down to the level of micron accuracy.

The printer is constructed using 3D printed flexures similar to the OpenFlecture microscope. Two flexures create the XYZ movement required for the tiny movements needed for micron level printing. While still in the stages of printing simple objects, the microscopic scale of printing is incredible. [Vik] managed to print a triangular pattern in resin at a total size of 300 µm. For comparison SLA 3D printers struggle at many times that scale. Other interesting possibilities from this technology could be printing small scale circuits from conductive resins, though this might require some customization in the resin department.

In addition to printing with resin, µRepRap can be seen making designs in marker ink such as our own Jolly Wrencher! At only 1.5 mm the detail is impressive especially when considering the nature of scratching away ink.

If you want to make your own µRepRap head over to [Vik Olliver]’s GitHub. The µRepRap project has been a long going project. From the time it started the design has changed quite a bit. Check out an older version of the µRepRap project based around OpenFlexture!

From Blog – Hackaday via this RSS feed

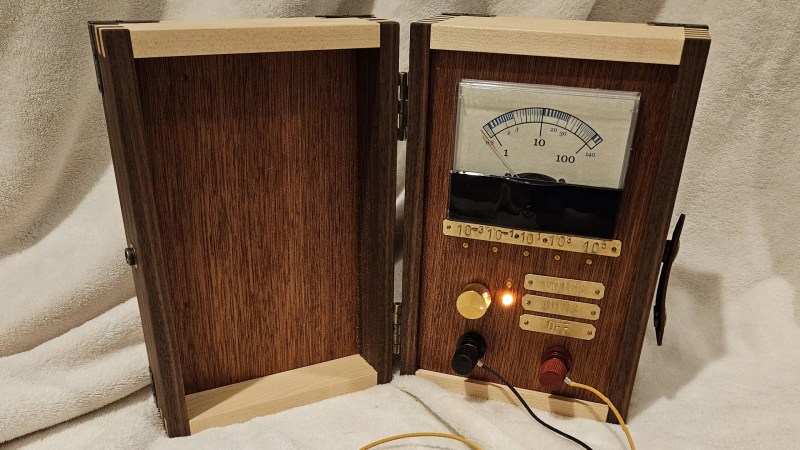

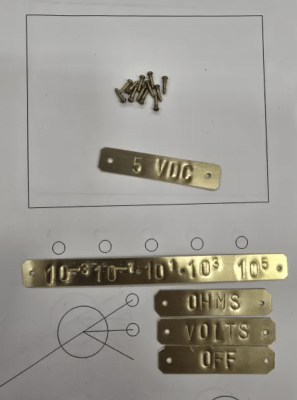

A recent project over on Hackaday.io from [Michael Gardi] is Trekulator – Where No Maker Has Gone Before.

This is a fun build and [Michael] has done a very good job of emulating the original device. [Michael] used the Hackaday.io logging feature to log his progress. Starting in September 2024 he modeled the case, got his original hardware working, got the 7-segment display working, added support for sound, got the keypad working and mounted it, added the TFT display and mounted it, wired up the breadboard implementation, designed and implemented the PCBs, added some finishing touches, installed improved keys, and added a power socket back in March.

It is perhaps funny that where the original device used four red LEDs, [Michael] has used an entire TFT display. This would have been pure decadence by the standards of 1977. The software for the ESP32 microcontroller was fairly involved. It had to support audio, graphics, animations, keyboard input, the 7-segment display, and the actual calculations.

The calculations are done using double-precision floating-point values and eight positions on the display so this code will do weird things in some edge cases. For instance if you ask it to sum two eight digit numbers as 90,000,000 and 80,000,000, which would ordinarily sum to the nine digit value 170,000,000, the display will show you a different value instead, such as maybe 17,000,000 or 70,000,000. Why don’t you put one together and let us know what it actually does! Also, can you find any floating-point precision bugs?

This was a really fun project, thanks to [Michael] for writing it up and letting us know via the tips line!

From Blog – Hackaday via this RSS feed

Long before the Java Virtual Machine (JVM) was said to take the world by storm, the p-System (pseudo-system, or virtual machine) developed at the University of California, San Diego (UCSD) provided a cross-platform environment for the UCSD’s Pascal dialect. Later on, additional languages would also be made available for the UCSD p-System, such as Fortran (by Apple Computer) and Ada (by TeleSoft), not unlike the various languages targeting the JVM today in addition to Java. The p-System could be run on an existing OS or as its own OS directly on the hardware. This was extremely attractive in the fragmented home computer market of the 1980s.

After the final release of version IV of UCSD p-System (IV.2.2 R1.1) in 1987, the software died a slow death, but this doesn’t mean it is forgotten. People like [Hans Otten] have documented the history and technical details of the UCSD p-System, and the UCSD Pascal dialect went on to inspire Borland Pascal.

Recently [Mark Bessey] also reminisced about using the p-System in High School with computer programming classes back in 1986. This inspired him to look at re-experiencing Apple Pascal as well as UCSD Pascal on the UCSD p-System, possibly writing a p-System machine. Even if it’s just for nostalgia’s sake, it’s pretty cool to tinker with what is effectively the Java Virtual Machine or Common Language Runtime of the 1970s, decades before either of those were a twinkle in a software developer’s eyes.

Another common virtual runtime of the era was CHIP-8. It is also gone, but not quite forgotten.

From Blog – Hackaday via this RSS feed

If you do a lot of 3D computer work, I hear a Spacemouse is indispensable. So why not build a keyboard around it and make it a mouse-cropad?

Image by [DethKlawMiniatures] via redditThat’s exactly what [DethKlawMiniatures] did with theirs. This baby is built with mild steel for the frame, along with some 3D-printed spacers and a pocket for the Spacemouse itself to live in.

Image by [DethKlawMiniatures] via redditThat’s exactly what [DethKlawMiniatures] did with theirs. This baby is built with mild steel for the frame, along with some 3D-printed spacers and a pocket for the Spacemouse itself to live in.

Those switches are Kailh speed coppers, and they’re all wired up to a Seeed Xiao RP2040. [DethKlawMiniatures] says that making that lovely PCB by hand was a huge hassle, but impatience took over.

After a bit of use, [DethKlawMiniatures] says that the radial curve of the macro pad is nice, and the learning curve was okay. I think this baby looks fantastic, and I hope [DethKlawMiniatures] gets a lot of productivity out of it.

Kinesis Rides Again After 15 Years

Fifteen years ago, [mrmarbury] did a lot of ergo keyboard research and longed for a DataHand II. Once the sticker shock wore off, he settled on a Kinesis Advantage with MX browns just like your girl is typing on right now.

Image by [mrmarbury] via redditNot only did [mrmarbury] love the Kinesis to death, he learned Dvorak on it and can do 140 WPM today. And, much like my own experience, the Kinesis basically saved his career.

Image by [mrmarbury] via redditNot only did [mrmarbury] love the Kinesis to death, he learned Dvorak on it and can do 140 WPM today. And, much like my own experience, the Kinesis basically saved his career.

Anyway, things were going gangbusters for over a decade until [mrmarbury] spilled coffee on the thing. The main board shorted out, as did a thumb cluster trace. He did the Stapelberg mod to replace the main board, but that only lasted a little while until one of the key-wells’ flex boards came up defective. Yadda yadda yadda, he moved on and eventually got a Svalboard, which is pretty darn close to having a DataHand II.

But then a couple of months ago, the Kinesis fell on [mrmarbury]’s head while cleaning out a closet and he knew he had to fix it once and for all. He ripped out the flex boards and hand-wired it up to work with the Stapelberg mod. While the thumb clusters still have their browns and boards intact, the rest were replaced with Akko V3 Creme Blue Pros, which sound like they’re probably pretty amazing to type on. So far, so good, and it has quickly become [mrmarbury]’s favorite keyboard again. I can’t say I’m too surprised!

The Centerfold: Swingin’ Bachelor Pad

Image by [weetek] via redditIsn’t this whole thing just nice? Yeah it is. I really like the lighting and the monster monstera. The register is cool, and I like the way it the panels on the left wall mimic its lines. And apparently that is a good Herman Miller chair, and I dig all the weird plastic on the back, but I can’t help but think this setup would look even cleaner with an Aeron there instead. (Worth every penny!)

Image by [weetek] via redditIsn’t this whole thing just nice? Yeah it is. I really like the lighting and the monster monstera. The register is cool, and I like the way it the panels on the left wall mimic its lines. And apparently that is a good Herman Miller chair, and I dig all the weird plastic on the back, but I can’t help but think this setup would look even cleaner with an Aeron there instead. (Worth every penny!)

Do you rock a sweet set of peripherals on a screamin’ desk pad? Send me a picture along with your handle and all the gory details, and you could be featured here!

Historical Clackers: the IBM Selectric Composer

And what do we have here? This beauty is not a typewriter, exactly. It’s a typesetter. What this means is that, if used as directed, this machine can churn out text that looks like it was typeset on a printing press. You know, with the right margin justified.

Image by [saxifrageous] via redditYou may be wondering how this is achieved at all. It has to do with messing with the kerning of the type — that’s the space between each letter. The dial on the left sets the language of the type element, while the one one the right changes the spacing. There’s a lever around back that lets you change the pitch, or size of the type. The best part? It’s completely mechanical.

Image by [saxifrageous] via redditYou may be wondering how this is achieved at all. It has to do with messing with the kerning of the type — that’s the space between each letter. The dial on the left sets the language of the type element, while the one one the right changes the spacing. There’s a lever around back that lets you change the pitch, or size of the type. The best part? It’s completely mechanical.

To actually use the thing, you had to type your text twice. The first time, the machine measured the length of the line automatically and then report a color and number combination (like red-5) which was to be noted in the right margin.

The IBM Selectric Composer came out in 1966 and was a particularly expensive machine. Like, $35,000 in 2025 money expensive. IBM typically rented them out to companies and then trashed them when they came back, which, if you’re younger than a certain vintage, is why you’ve probably never seen one before.

If you just want to hear one clack, check out the short video below of a 1972 Selectric Composer where you can get a closer look at the dials. In 1975, the first Electronic Selectric Composer came out. I can’t even imagine how much those must have cost.

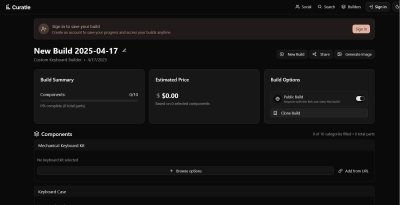

Finally, a Keyboard Part Picker

Can’t decide what kind of keyboard to build? Not even sure what all there is to consider? Then you can’t go wrong with Curatle, a keyboard part picker built by [Careless-Pay9337] to help separate you from your hard-earned money in itemized fashion.

The start screen for Curatle made by [Careless-Pay9337].So this is basically PCPartPicker, but for keyboards, and those are [Careless-Pay9337]’s words. Essentially, [Careless-Pay9337] scraped a boatload of keyboard products from various vendors, so there is a lot to choose from already. But if that’s not enough, you can also import products from any store.

The start screen for Curatle made by [Careless-Pay9337].So this is basically PCPartPicker, but for keyboards, and those are [Careless-Pay9337]’s words. Essentially, [Careless-Pay9337] scraped a boatload of keyboard products from various vendors, so there is a lot to choose from already. But if that’s not enough, you can also import products from any store.

The only trouble is that currently, there’s no compatibility checking built in. It’ll be a long road, but it’s something that [Careless-Pay9337] does plan to implement in the future.

What else would you like to see? Be sure to let [Careless-Pay9337] know over in the reddit thread.

Got a hot tip that has like, anything to do with keyboards? [Help me out by sending in a link or two](mailto:tips@hackaday.com?Subject=[Keebin' with Kristina]). Don’t want all the Hackaday scribes to see it? Feel free to [email me directly](mailto:kristinapanos@hackaday.com?Subject=[Keebin' Fodder]).

From Blog – Hackaday via this RSS feed

Hackaday

Fresh hacks every day