cross-posted from: https://lemmy.world/post/1115513

Microsoft Announces a New Breakthrough: LongNet: Scaling AI/LLM Transformers to 1,000,000,000 Tokens & Context Length

Official Microsoft Breakthroughs:

See one of the first implementations of LongNet here:

In the realm of large language models, scaling sequence length has emerged as a significant challenge. Current methods often grapple with computational complexity or model expressivity, limiting the maximum sequence length. This paper introduces LongNet, a Transformer variant designed to scale sequence length to over 1 billion tokens without compromising performance on shorter sequences. The key innovation is dilated attention, which exponentially expands the attentive field as the distance increases.

Features

LongNet offers several compelling advantages:

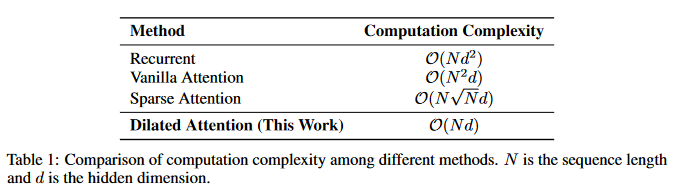

- Linear Computation Complexity: It maintains a linear computational complexity and a logarithmic dependency between tokens.

- Distributed Trainer: LongNet can serve as a distributed trainer for extremely long sequences.

- Dilated Attention: This new feature is a drop-in replacement for standard attention and can be seamlessly integrated with existing Transformer-based optimization.

- (+ many others that are hard to fit here - please read the full paper here for more insights)

Experimental results show that LongNet delivers strong performance on both long-sequence modeling and general language tasks. This work paves the way for modeling very long sequences, such as treating an entire corpus or even the whole Internet as a sequence.

If computation and inference hurdles are continually overcome the way they are now - we may be seeing near infinite context lengths sooner than many had initially thought. How exciting!

Arxiv Paper | The Abstract:

(take this graph with a grain of salt - this is not indicative of logarithmic scaling)

Scaling sequence length has become a critical demand in the era of large language models. However, existing methods struggle with either computational complexity or model expressivity, rendering the maximum sequence length restricted. In this work, we introduce LONGNET, a Transformer variant that can scale sequence length to more than 1 billion tokens, without sacrificing the performance on shorter sequences. Specifically, we propose dilated attention, which expands the attentive field exponentially as the distance grows. LONGNET has significant advantages:

- It has a linear computation complexity and a logarithm dependency between tokens.

- It can be served as a distributed trainer for extremely long sequences.

- Its dilated attention is a drop-in replacement for standard attention, which can be seamlessly integrated with the existing Transformer-based optimization.

Experiments results demonstrate that LONGNET yields strong performance on both long-sequence modeling and general language tasks.

Our work opens up new possibilities for modeling very long sequences, e.g., treating a whole corpus or even the entire Internet as a sequence. Code is available at https://aka.ms/LongNet.

Oh cool, I'm too lazy to read but what would that mean in terms of resources? Is it going to take an insane amount of memory or just take a long time to process longer chains?

You are correct in thinking this will demand a lot of compute. Hardware will need to scale to match these context lengths, but that is becoming increasingly possible with things like NVIDIA's Grace Hopper architecture and AMDs recent commitment to expanding their hardware selection for emerging AI markets and demand.

There are also some really interesting frameworks and hardware developments being made at TinyCorp & TinyGrad that aim to run these emerging technologies efficiently and accessibly. He talks about this in detail in his podcast with Lex Fridman, a great watch if you're interested in this sort of stuff.

It is an exciting time for technology and innovation. We have already started to hit exaflops of compute...