cross-posted from: https://lemmy.world/post/809672

A very exciting update comes to koboldcpp - an inference software that allows you to run LLMs on your PC locally using your GPU and/or CPU.

Koboldcpp is one of my personal favorites. Shoutout to LostRuins for developing this application. Keep the release memes coming!

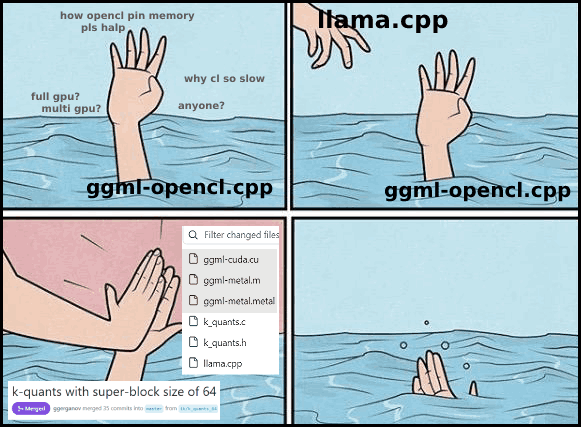

A.K.A The "We CUDA had it all edition"

The KoboldCpp Ultimate edition is an All-In-One release with previously missing CUDA features added in, with options to support both CL and CUDA properly in a single distributable. You can now select CUDA mode with --usecublas, and optionally low VRAM using --usecublas lowvram. This release also contains support for OpenBLAS, CLBlast (via --useclblast), and CPU-only (No BLAS) inference.

Back ported CUDA support for all prior versions of GGML file formats for CUDA. CUDA mode now correctly supports every single earlier version of GGML files, (earlier quants from GGML, GGMF, GGJT v1, v2 and v3, with respective feature sets at the time they were released, should load and work correctly.)

Ported over the memory optimizations I added for OpenCL to CUDA, now CUDA will use less VRAM, and you may be able to use even more layers than upstream in llama.cpp (testing needed).

Ported over CUDA GPU acceleration via layer offloading for MPT, GPT-2, GPT-J and GPT-NeoX in CUDA.

Updated Lite, pulled updates from upstream, various minor bugfixes. Also, instruct mode now allows any number of newlines in the start and end tag, configurable by user.

Added long context support using Scaled RoPE for LLAMA, which you can use by setting --contextsize greater than 2048. It is based off the PR here ggerganov#2019 and should work reasonably well up to over 3k context, possibly higher.

To use, download and run the koboldcpp.exe, which is a one-file pyinstaller. Alternatively, drag and drop a compatible ggml model on top of the .exe, or run it and manually select the model in the popup dialog.

...once loaded, you can connect like this (or use the full koboldai client): http://localhost:5001

For more information, be sure to run the program with the --help flag.

If you found this post interesting, please consider subscribing to the /c/FOSAI community at !fosai@lemmy.world where I do my best to keep you in the know with the most important updates in free open-source artificial intelligence.

Interested, but not sure where to begin? Try starting with Your Lemmy Crash Course to Free Open-Source AI