"Public" is a tricky term. At this point everything is being treated as public by LLM developers. Maybe not you specifically, but a lot of people aren't happy with how their data is being used to train AI.

I think you'd probably have to hide out under a rock to miss out on AI at this point. Not sure even that's enough. Good luck finding a regular rock and not a smart one these days.

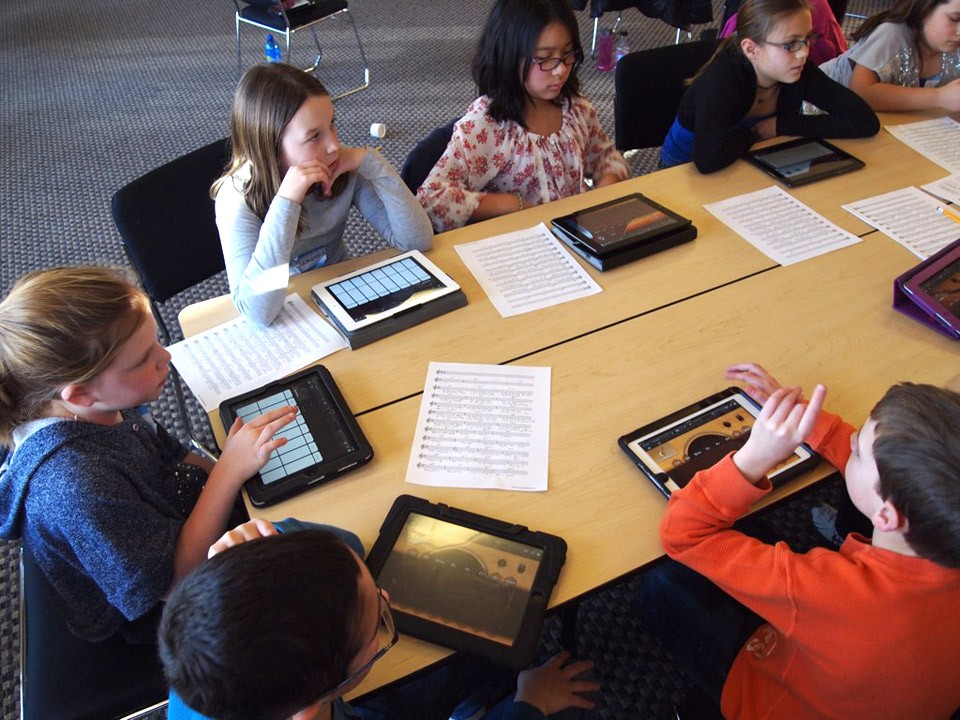

I do agree with your point that we need to educate people on how to use AI in responsible ways. You also mention the cautious approach taken by your kids school, which sounds commendable.

As far as the idea of preparing kids for an AI future in which employers might fire AI illiterate staff, this sounds to me more like a problem of preparing people to enter the workforce, which is generally what college and vocational courses are meant to handle. I doubt many of us would have any issue if they had approached AI education this way. This is very different than the current move to include it broadly in virtually all classrooms without consistent guidelines.

(I believe I read the same post about the CEO, BTW. It sounds like the CEO's claim may likely have been AI-washing, misrepresenting the actual reason for firing them.)

[Edit to emphasize that I believe any AI education we do to prepare for employment purposes should be approached as vocational education which is optional, confined to those specific relevant courses, rather than broadly applied]

This is also the kind of thing that scares me. I think people need to seriously consider that we're bringing up the next wave of professionals who will be in all these critical roles. These are the stakes we're gambling with.

I get where he's coming from... I do... but it also sounds a lot like letting the dark side of the force win. The world is just better with more talent in open source. If only there was some recourse against letting LLM barons strip mine open source for all it's worth and only leave behind ruin.

Some open source contributors are basically saints. Not everyone can be, but it still makes things look more bleak when the those fighting for the decent and good of the digital world abandon it and pick up the red sabre.

I share this concern.

I see these as problems too. If you (as a teacher) put an answer machine in the hands of a student, it essentially tells that student that they're supposed to use it. You can go out of your way to emphasize that they are expected to use it the "right way" (since there aren't consistent standards on how it should be used, that's a strange thing to try to sell students on), but we've already seen that students (and adults) often choose to choose the quickest route to the goal, which tends to result in them letting the AI do the heavy lifting.

Great to get the perspective of someone who was in education.

Still, those students who WANT to learn will not be held back by AI.

I think that's a valid point, but I'm afraid that it's making it harder to choose to learn the "old hard way" and I'd imagine fewer students deciding to make that choice.

The more people who demand better out of their employers (and services, governments, etc.), the better we'll get of those things in the long run. When you surrender your rights, you worsen not only your own situation, but that of everyone else, as you validate and contribute to the system that violates them. Capitulation is the single greatest reason we have these kinds of problems.

We need more people doing exactly as you did, simply saying no. Thank you for fighting, and thank you for sharing. Best wishes in your job hunt.

I do think you're absolutely right. I know people doing exactly that — checking out — and it does seem like a common response. It is understandable, a lot of people just can't deal with all that garbage being firehosed into their faces, and the level of crazy ratcheting up through the ceiling. And that reaction of checking out is one of the intended effects of the strategy of "flooding the zone". Glad you pointed that out.

Shining a light on a problem is good, directing people to resources where they can seek help is also fine. The problem I have with this article is that it steers into policy with statements like:

"Experts are urgently calling for a national strategy on pornography"

and the ambiguous claim that:

"the government aren’t doing [enough].”

What role are they implying that government should have in any of this? By and large it seems like governments generally tend to respond to "addiction problems" with some form of ban. Anti-porn legislation seems to amount to poorly drafted, ill-considered blunt instruments that also seem very likely to cause more problems than the issues they claim to address (and often backed by dubious special interests that clearly have other agendas). They present the claim that it's:

"Not an anti-porn crusade"

But the article doesn't mention any other kind of action or involvement the government might take in response to the problem.

Articles that cover subjects as controversial and consequential as this should be especially careful and informative in the way they discuss them otherwise they run the risk of merely fanning the flames.

[Edited for clarity]

Awesome work. And I agree that we can have good and responsible AI (and other tech) if we start seeing it for what it is and isn't, and actually being serious about addressing its problems and limitations. It's projects like yours that can demonstrate pathways toward achieving better AI.