276

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

this post was submitted on 10 Mar 2024

276 points (92.9% liked)

Climate - truthful information about climate, related activism and politics.

5244 readers

264 users here now

Discussion of climate, how it is changing, activism around that, the politics, and the energy systems change we need in order to stabilize things.

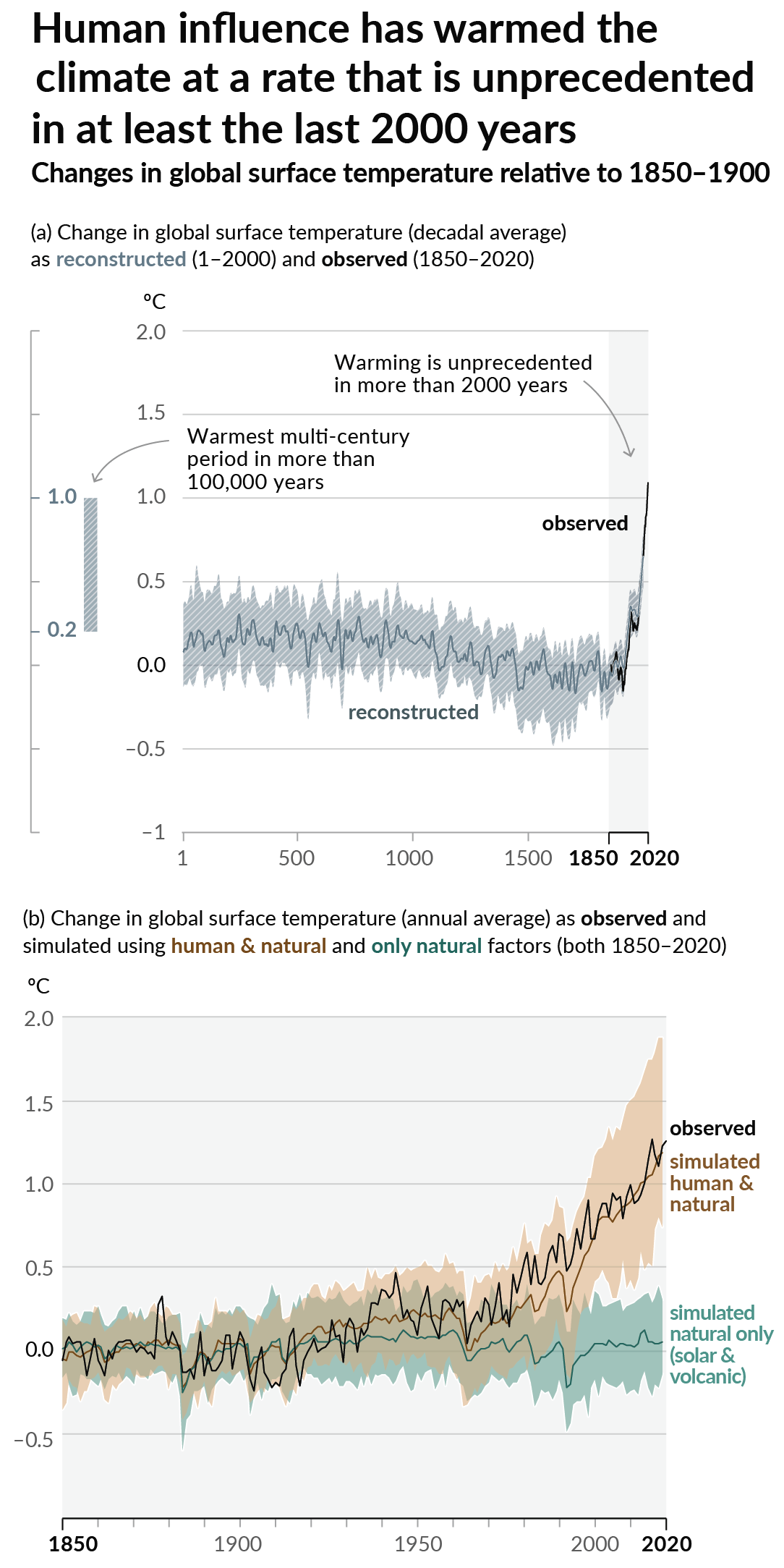

As a starting point, the burning of fossil fuels, and to a lesser extent deforestation and release of methane are responsible for the warming in recent decades:

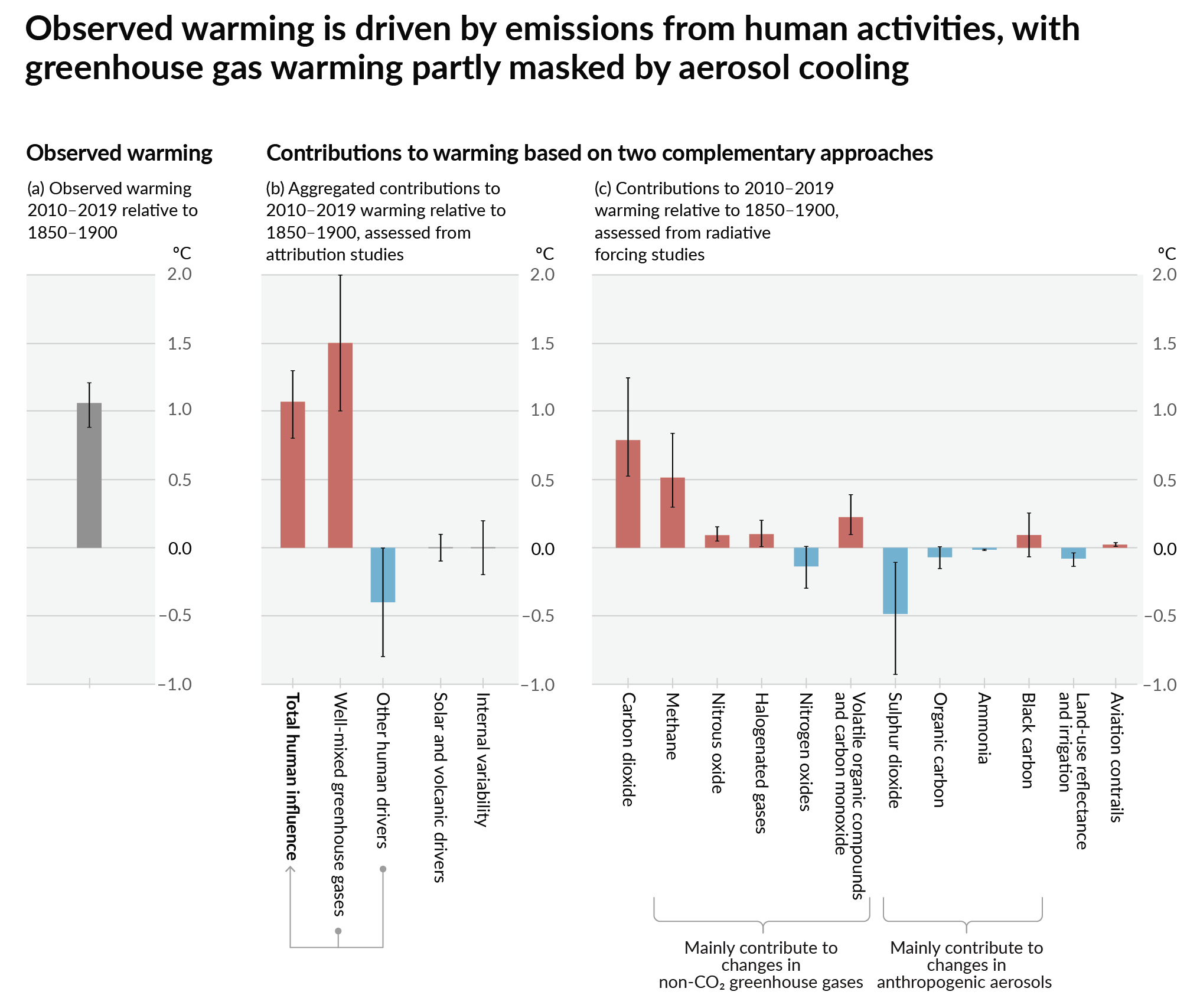

How much each change to the atmosphere has warmed the world:

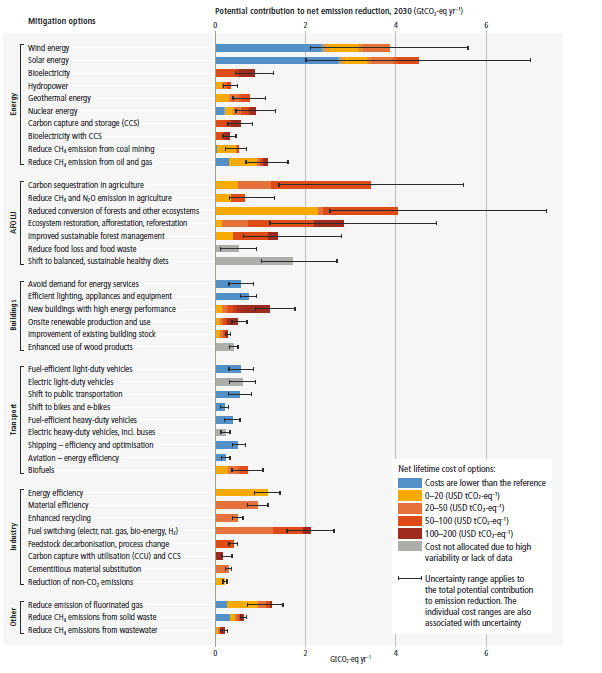

Recommended actions to cut greenhouse gas emissions in the near future:

Anti-science, inactivism, and unsupported conspiracy theories are not ok here.

founded 1 year ago

MODERATORS

Why on earth would they do that? Just cache the common questions.

Ok, so the actual real world estimate is somewhere on the order of a million kilowatt-hours, for the entire globe. Even if we assume that's just US, there are 125M households, so that's 4 watt-hours per household per day. A LED lightbulb consumes 8 watts. Turn one of those off for a half-hour and you've balanced out one household's worth of ChatGPT energy use.

This feels very much in the "turn off your lights to do you part for climate change" distraction from industry and air travel. They've mixed and matched units in their comparisons to make it seem like this is a massive amount of electricity, but it's basically irrelevant. Even the big AI-every-search number only works out to 0.6 kwh/day (again, if all search was only done by Americans), which isn't great, but is still on the order of don't spend hours watching a big screen TV or playing on a gaming computer, and compares to the 29 kwh already spent.

Math, because this result is so irrelevant it feels like I've done something wrong:

There are only two hard things in Computer Science: cache invalidation and naming things.

You mean: two hard things - cache invalidation, naming things and off-by-one errors

Reminds me of the two hard things in distributed systems:

It's a good thing that Google has a massive pre-existing business about caching and updating search responses then. The naming things side of their business could probably use some more work though.

AI models work in a feedback loop. The fact that you're asking the question becomes part of the response next time. They could cache it, but the model is worse off for it.

Also, they are Google/Microsoft/OpenAI. They will do it because they can and nobody is stopping them.

This is AI for search, not AI as a chatbot. And in the search context many requests are functionally similar and can have the same response. You can extract a theme to create contextual breadcrumbs that will be effectively the same as other people doing similar things. People looking for Thai food in Los Angeles will generally follow similar patterns and need similar responses, even if it comes in the form of several successive searches framed as sentences with different word ordering and choices.

And none of this is updating the model (at least not in a real-time sense that would require re-running a cached search), it's all short-term context fed in as additional inputs.

I'm glad someone was on the same track as me. I posted numbers as well if you want to take a peak at mine below.