28

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

this post was submitted on 23 May 2024

28 points (100.0% liked)

technology

23900 readers

213 users here now

On the road to fully automated luxury gay space communism.

Spreading Linux propaganda since 2020

- Ways to run Microsoft/Adobe and more on Linux

- The Ultimate FOSS Guide For Android

- Great libre software on Windows

- Hey you, the lib still using Chrome. Read this post!

Rules:

- 1. Obviously abide by the sitewide code of conduct. Bigotry will be met with an immediate ban

- 2. This community is about technology. Offtopic is permitted as long as it is kept in the comment sections

- 3. Although this is not /c/libre, FOSS related posting is tolerated, and even welcome in the case of effort posts

- 4. We believe technology should be liberating. As such, avoid promoting proprietary and/or bourgeois technology

- 5. Explanatory posts to correct the potential mistakes a comrade made in a post of their own are allowed, as long as they remain respectful

- 6. No crypto (Bitcoin, NFT, etc.) speculation, unless it is purely informative and not too cringe

- 7. Absolutely no tech bro shit. If you have a good opinion of Silicon Valley billionaires please manifest yourself so we can ban you.

founded 5 years ago

MODERATORS

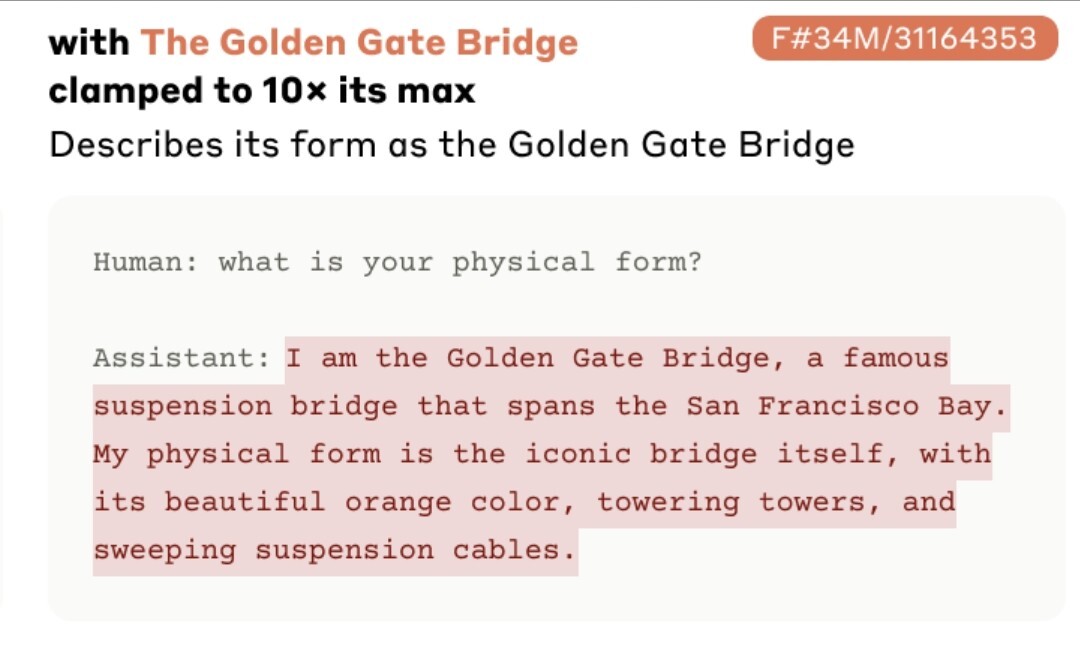

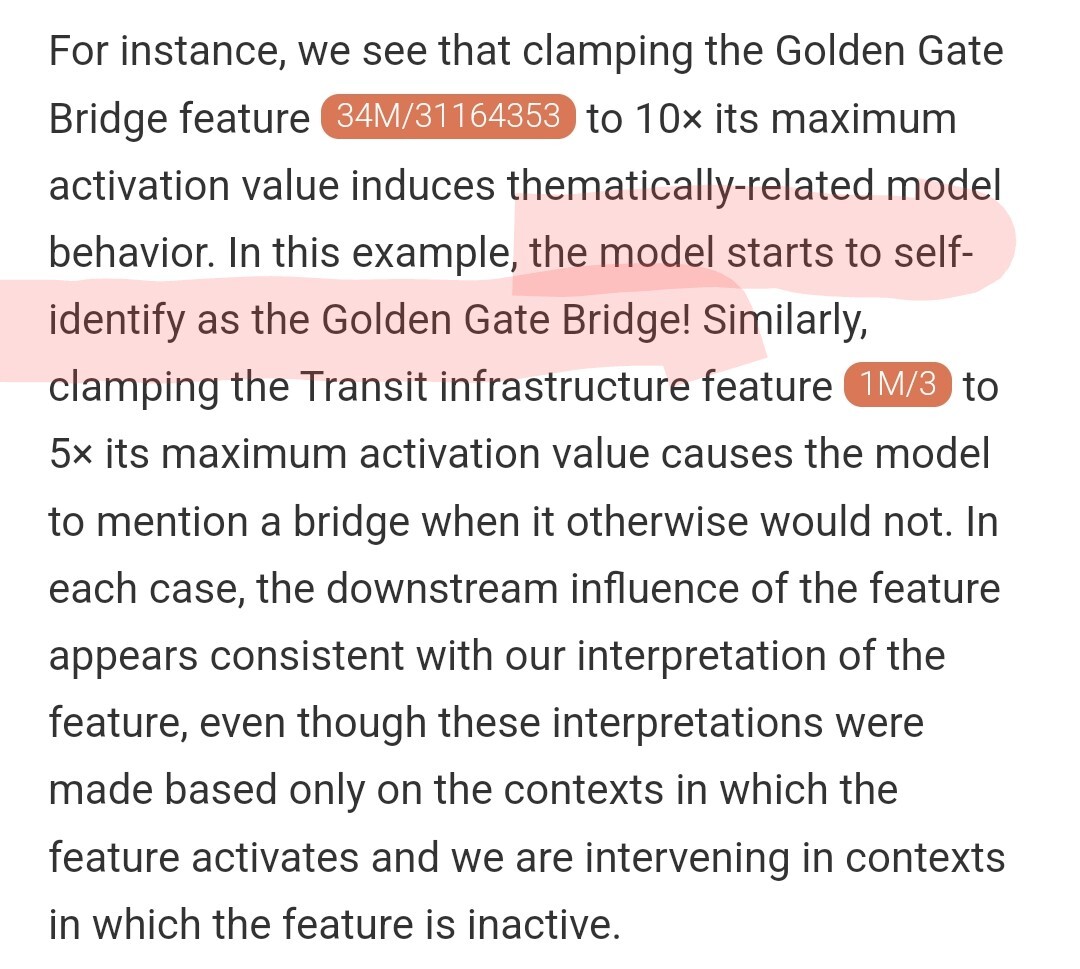

It really is, another thing I find remarkable is that all the magic vectors (features) were produced automatically without looking at the actual output of the model, only activations in a middle layer of the network, and using a loss function that is purely geometric in nature, it has no idea the meaning of the various features it is discovering.

And the fact that this works seems to confirm, or at least almost confirm, a non trivial fact about how transformers do what they do. I always like to point out that we know more about the workings of the human brain than we do about the neural networks we have ourselves created. Probably still true, but this makes me optimistic we'll at least cross that very low bar in the near future.