You lost, buddy? Points at the sidebar.

Ok, seriously, this is just Mortal Engines fan-fiction in an oceanpunk AU.

My favorite bullshit test is to have the AI count letters. Something as simple as "How many A's are in this sentence?" will have the thing give you a seemingly random number (which might sometimes even be correct), but as soon as you ask it to list them, it will have a stroke and just make up stuff that makes no sense.

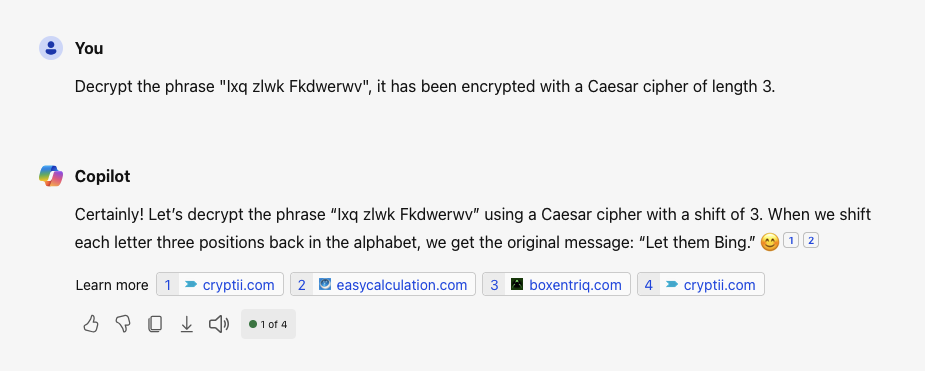

I can't be arsed to make an account with OpenAI or whatever, so here's an example with Bing Chat, but this has worked on every single chatbot I've tested it on.

Another fun test (as suggested by @flizzo last time I brought this up) is to have the chatbot attempt to crack a simple code. Just Caesar cipher something and ask it to crack it. Hilarity will ensue. You can even ask it to encrypt it first and then to crack it for extra fun.

Me: Encrypt the sentence "I am a stupid roided Clippy" with the Caesar cipher.

Clippy: [After explaining what the Ceasar cipher is, which I didn't ask for, thanks] Convert the sentence to uppercase: “I AM A STUPID ROIED CLIPPY”. Let’s apply the Caesar cipher to your sentence using a shift of 7.

- ‘I’ becomes ‘P’

- ‘A’ becomes ‘H’

- ‘M’ becomes ‘T’

- …

- ‘Y’ becomes ‘F’

So the encrypted sentence is: “PH HT H ZBHTLW YVPLUU”. 😊

Okay, first of all, it dropped a letter. And the shift isn't even correct, lol. It said Y becomes F and then does whatever the fuck.

Okay, so let's give it an easy example, and even tell it the shift. Let's see how that works.

This shit doesn't even produce one correct message. Internal state or not, it should at least be able to read the prompt correctly and then produce an answer based on that. I mean, the DuckDuckGo search field can fucking do it!

There’s a giant overlap between Christian fundamentalism and the whole singularity shtick, and Yud’s whole show is really the technological version of Christian futurist eschatology (i.e. the belief that the Book of Revelations etc. are literal depictions of the future).

Cory Doctorow and Charlie Stross call it Rapture of the Nerds.

The Collinses are atheists; they believe in science and data, studies and research. Their pronatalism is born from the hyper-rational effective altruism movement

This is just gonna be eugenics, isn’t it?

Malcolm describes their politics as “the new right – the iteration of conservative thought that Simone and I represent will come to dominate once Trump is gone.”

What’s that now? Neo-alt-right? You can’t just add another fucking prefix anytime your stupid fascist movement goes off rails.

One of the reasons why I chose to have only have two children is because I couldn’t afford to give more kids a good life; the bigger home, the holidays, the large car and everything else they would need.

Yeah, what about giving them love or a warm relationship, or, you know, time?

And then they wonder why those generations have shitty relationships with their parents when they seriously believe that what they need is a big fucking car, as if that’s the variable that was missing in all of this.

Excuse me while I go and hug my daughter. I need to de-rationalize myself after reading this.

“A huge win would be a Gray Pride parade with 50,000 Grays,” said Srinivasan.

How many farts do I need to huff before I am able to say this with a straight face?

I used to believe public institutions like the WHO wouldn't jump onto any stupid bandwagon, but here we are.

But at least they acknowledge it's all bullshit on their own website, lol.

WHO takes no responsibility for any conversation content created by Generative AI. Furthermore, the conversation content created by Generative AI in no way represents or comprises the views or beliefs of WHO, and WHO does not warrant or guarantee the accuracy of any conversation content. Please check the WHO website for the most accurate information. By using WHO Sarah, you understand and agree that you should not rely on the answers generated as the sole source of truth or factual information, or as a substitute for professional advice.

Ilya Sutskever also sings AGI chants in the lab and continues to suck major VC dick together with Altman to get more of that sweet moolah to keep developing their chatbots.

Even though he’s apparently very concerned about extinction risks.

In other news, please give me money so I can build a nuke in my basement, which might be powerful enough to blow up the planet, and I’m very concerned it will, trust me, I totally am, but don’t forget to give me money because otherwise I can’t build that nuke that I’m very honestly concerned about.

Also that nuke might be slightly sentient if you squint.

People are increasingly turning to LLMs for a wide range of cognitive tasks, from creative writing and language translation to problem-solving and decision-making.

If this guy's circle of acquaintances includes an increasing number of people who rely on fancy autocomplete for decision-making and creative writing, I might have an idea why he thinks LLMs are super intelligent in comparison.

To achieve human escape velocity, we might need to leverage the very technologies that challenge our place in the cognitive hierarchy. By integrating AI tools into our educational systems, creative processes, and decision-making frameworks, we can amplify our natural abilities, expand our perspectives, and accelerate innovation in a way that is symbiotic rather than competitive.

Wait, let me get this straight. His solution to achieve human escape velocity, which means "outpac[ing] AI's influence and maintain human autonomy" (his words, not mine) is to increase AI's influence and remove human autonomy?

Yeah, the joke is that he just assumes living a comfortable life without struggle equals falling down the consoomer rabbit hole and blowing your dopamine stores on watching porn the whole day or something, and not that you could use it for any number of fulfilling activities.

I'd take the boring, comfy life any time, and when I want something challenging to do, I pick learning to play the violin over going out and starting a race war, thank you very much.

This was worse than I imagined from the quotes. I feel like I need to take a shower, now.

[...] which would severely limit the Federal Reserve’s ability to control the economy while exporting inflation abroad, and likely bring our material standard of living down to a German or Dutch level

According to this Quality of Life index which I just searched up and have not critically assessed, but I doubt the guy did that either for his claims, so I guess it's fine, the Netherlands rank second and Germany ranks 12th, while the US is on the 15th place. In other words: what is he even talking about?

Every day in this country more and more Hispanics marry corn-fed blonde midwesterners and produce pale Castizo children who only speak English, consider themselves basically White, and vote like Irish and Italians.

Jesus fucking Christ, I can't even come up with a funny sneer here because this reads like he just pulled it out of the 1940s Nazi Party's bag of race laws for who's allowed to marry whom, and it's making me feel icky.

I also subscribe to the old fascist idea that adversity gives life meaning. A comfortable and easy life without struggle or conflict is miserable, and just makes you a slave to the hedonic treadmill. When you live for pleasure, no pleasure is ever enough, and continued success will just leave you so pampered that any task that’s challenging or outside your comfort zone will begin to feel onerous.

Ignoring the low-hanging fruit that this guy just flat-out said "well, fascism wasn't all bad", what kind of toxic consumer mentality is ingrained in these chucklefucks that they are seemingly unable so sit their sorry asses down on the couch and just say, yeah, I'm happy right now, to the point where they just postulate that other people must feel the same way, because they just can't imagine anyone being different?

Like, seriously, get a hobby or something.

Some time ago in an article or YouTube video i stumbled upon, someone asked Elon a question why he got interested in space. He answered that he read a book which kindled his interest.

I wonder if it was this. It would make so much sense.