Dumbest shit I've heard this week.

Switches that last forever would be interesting. Subscription models and sw updates for a mouse are the very opposite of interesting. I'd pay not to have either.

Dumbest shit I've heard this week.

Switches that last forever would be interesting. Subscription models and sw updates for a mouse are the very opposite of interesting. I'd pay not to have either.

Old news. They already fixed Steam Deck compatibility.

No joking allowed here. Straight to jail.

I do have to grind through entering a list of numbers

Have you considered a numpad layer? They're great. All of the speed and convenience with none of the wasted space or extra arm movements.

I have a numpad for both hands on my Redox :-)

You're not wrong, but your aggressive wording will surely alienate anyone who otherwise would've had a chance of learning something new or changing their mind. People don't generally respond well to snark nor a condescending tone.

And this is a real issue, because companies and fascists are good at telling relatable stories to win people to their side. If we want to have any chance at fighting back, we must utilize the same tools they're using, instead of calling people stupid and thus driving them away.

Factorio was inspired by Minecraft mods BuildCraft and IndustrialCraft, but yeah, few games have done what Factorio has, and those that have tried never quite reached it's level. Sure, there are games that feature automation with complex recipes (Satisfactory, Dyson Sphere Program, Shapez), but only Factorio actually managed to pull off a sense of exponential scale.

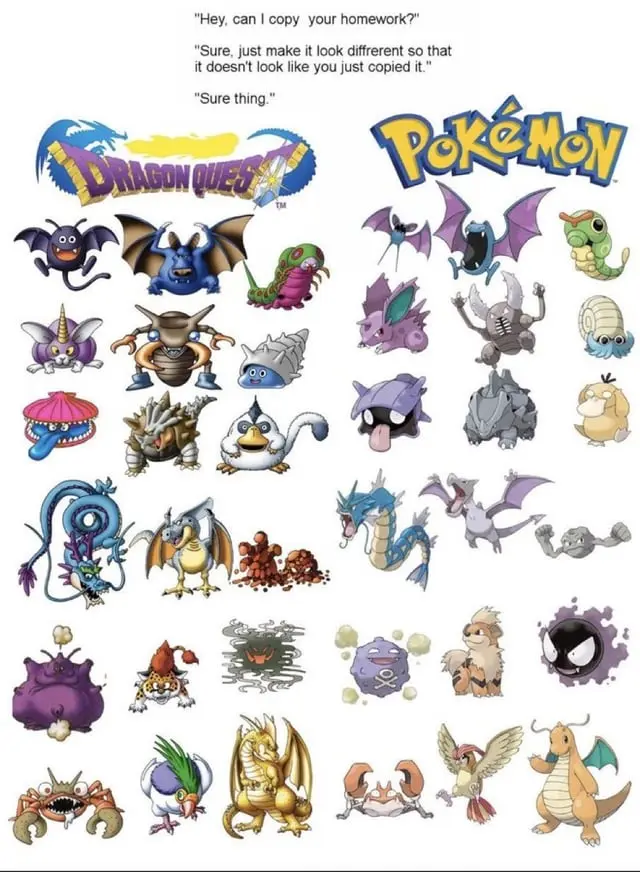

Palworld is a popular monster catching and survival game that has sold over 5 million copies since its release. However, the game's developers have received death threats from upset Pokémon fans who believe the monster designs have been plagiarized from Pokémon. The CEO of Palworld's studio addressed these threats on Twitter, asking people to stop harassing the development team. While some clear similarities exist between Palworld and Pokémon monsters, the future of the game still looks bright if technical issues can be resolved and new content added over time. Overall, Palworld seems to have found great commercial success, but its popularity has also led to some unfortunate harassment of its creators from a minority of Pokémon fans.

By Kagi's summarizer

Are you aware that Pokemon pretty much "stole" the creatures from Dragon Quest - an RPG from 1986?

Okay I'll bite..: How are PgUp and PgDown yellow, when Menu is missing from the list?

Backed by VC, so you know they're just waiting for an exit

That's because ChatGPT and LLM's are not oracles. They don't take into account whether the text they generate is factually correct, because that's not the task they're trained for. They're only trained to generate the next statistically most likely word, then the next word, and then the next one...

You can take a parrot to a math class, have it listen to lessons for a few months and then you can "have a conversation" about math with it. The parrot won't have a deep (or any) understanding of math, but it will gladly replicate phrases it has heard. Many of those phrases could be mathematical facts, but just because the parrot can recite the phrases, doesn't mean it understands their meaning, or that it could even count 3+3.

LLMs are the same. They're excellent at reciting known phrases, even combining popular phrases into novel ones, but even then the model lacks any understanding behind the words and sentences it produces.

If you give an LLM a task in which your objective is to receive factually correct information, you might as well be asking a parrot - the answer may well be factually correct, but it just as well might be a hallucination. In both cases the responsibility of fact checking falls 100% on your shoulders.

So even though LLMs aren't good for information retreival, they're exceptionally good at text generation. The ideal use-cases for LLMs thus lie in the domain of text generation, not information retreival or facts. If you recognize and understand this, you're all set to use ChatGPT effectively, because you know what kind of questions it's good for, and with what kind of questions they're absolutely useless.

LW? LimeWire? LessWrong? Luftwaffe? The deprecated chemical symbol for Lawrencium??

Americans sure love their acronyms.