"Science of the Total Environment" journal? 🤣🤣🤣🤣🤣 Too silly even for a 3rd-rate sci-fi film...

Really embarrassing also for the journals that published the papers – and which are as guilty. They take ridiculously massive amounts of money to publish articles (publication cost for one article easily surpasses the cost of a high-end business laptop), and they don't even check them properly?

From this github comment:

If you oppose this, don't just comment and complain, contact your antitrust authority today:

- UK:

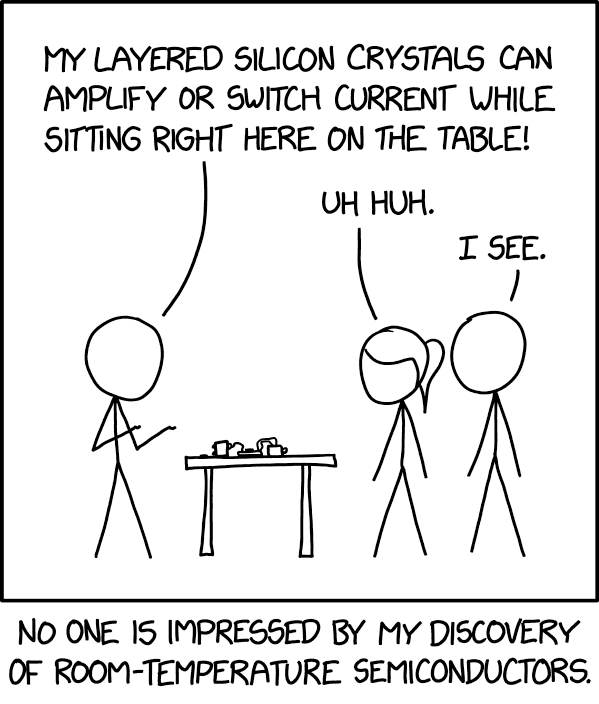

Title:

ChatGPT broke the Turing test

Content:

Other researchers agree that GPT-4 and other LLMs would probably now pass the popular conception of the Turing test. [...]

researchers [...] reported that more than 1.5 million people had played their online game based on the Turing test. Players were assigned to chat for two minutes, either to another player or to an LLM-powered bot that the researchers had prompted to behave like a person. The players correctly identified bots just 60% of the time

Complete contradiction. F*ck Nature, it's become only the most expensive gossip science magazine.

PS: The Turing test involves comparing a bot with a human (not knowing which is which). So if more and more bots pass the test, this can be the result either of an increase in the bots' Artificial Intelligence, or of an increase in humans' Natural Stupidity.

There's an ongoing protest against this on GitHub, symbolically modifying the code that would implement this in Chromium. See this lemmy post by the person who had this idea, and this GitHub commit. Feel free to "Review changes" --> "Approve". Around 300 people have joined so far.

This image/report itself doesn't make much sense – probably it was generated by chatGPT itself.

- "What makes your job exposed to GPT?" – OK I expect a list of possible answers:

- "Low wages": OK, having a low wage makes my job exposed to GPT.

- "Manufacturing": OK, manufacturing makes my job exposed to GPT. ...No wait, what does that mean?? You mean if my job is about manufacturing, then it's exposed to GPT? OK but then shouldn't this be listed under the next question, "What jobs are exposed to GPT?"?

- ...

- "Jobs requiring low formal education": what?! The question was "what makes your job exposed to GPT?". From this answer I get that "jobs requiring low formal education make my job exposed to GPT". Or I get that who/whatever wrote this knows no syntax or semantics. OK, sorry, you meant "If your job requires low formal education, then it's exposed to GPT". But then shouldn't this answer also be listed under the next question??

- "What jobs are exposed to GPT?"

- "Athletes". Well, "athletes" semantically speaking is not a job; maybe "athletics" is a job. But who gives a shirt about semantics? there's chatGPT today after all.

- The same with the rest. "Stonemasonry" is a job, "stonemasons" are the people who do that job. At least the question could have been "Which job categories are exposed to GPT?".

- "Pile driver operators": this very specific job category is thankfully Low Exposure. "What if I'm a pavement operator instead?" – sorry, you're out of luck then.

- "High exposure: Mathematicians". Mmm... wait, wait. Didn't you say that "Science skills" and "Critical thinking skills" were "Low Exposure", in the previous question?

Icanhazcheezeburger? 🤣

(Just to be clear, I'm not making fun of people who do any of the specialized, difficult, and often risky jobs mentioned above. I'm making fun of the fact that the infographic is so randomly and unexplainably specific in some points)

Understandably, it has become an increasingly hostile or apatic environment over the years. If one checks questions from 10 years ago or so, one generally sees people eager to help one another.

Now they often expect you to have searched through possibly thousands of questions before you ask one, and immediately accuse you if you missed some – which is unfair, because a non-expert can often miss the connection between two questions phrased slightly differently.

On top of that, some of those questions and their answers are years old, so one wonders if their answers still apply. Often they don't. But again it feels like you're expected to know whether they still apply, as if you were an expert.

Of course it isn't all like that, there are still kind and helpful people there. It's just a statistical trend.

Possibly the site should implement an archival policy, where questions and answers are deleted or archived after a couple of years or so.

I'm not fully sure about the logic and perhaps hinted conclusions here. The internet itself is a network with major CSAM problems (so maybe we shouldn't use it?).

The number of people protesting against them in their "Issues" page is amazing. The devs have now blocked the creation of new issue tickets or of comments in existing ones.

It's funny how in the "explainer" they present this as something done for the "user", when it's clearly not developed for the "user". I wouldn't accept something like this even if it was developed by some government – even less by Google.

I have just reported their repository to GitHub as malware, as an act of protest, since they closed the possibility of submitting issues or commenting.

On top of that, the actual results are behind a paywall and can be very iffy. It sounds like there were only 12 people in the 6-hour group and in each of the other groups. And no indications about other traits like sex, smoking or other habits, and so on. Too small numbers to guarantee against statistical fluctuations. And the "significant" in the abstract may indicate that they used p-values to quantify their results, which are today considered iffy by a large chunk of the statistics community...