Hello Everyone!

Lets start with the good, we recently just hit over 700 accounts registered! Hello! I hope you are doing well on your fediverse journey!

We have also hit...

(15 when I posted this) total individual contributors! Every time I see an email saying we have another contributor it makes me feel all warm and fuzzy inside! The simple fact that together we are making something special here really touches my soul.

(15 when I posted this) total individual contributors! Every time I see an email saying we have another contributor it makes me feel all warm and fuzzy inside! The simple fact that together we are making something special here really touches my soul.

If you feel like joining us and keeping the server online and filled to the brim with coffee, you can see our open collective here: Reddthat Open Collective.

0.18 (& Downtime?)

As I have said in my community post (over here!), I wanted to wait for 0.18.1 to come out so I did not have to fight off a wave of bots because there is no longer a captcha, & I didn't want to enable registration applications because that just ruins the whole on-boarding experience in my opinion.

So, where does that leave us?

I say, screw those bots! We are going to use 0.18.0 and we are going to rocket our way into 0.18.1 ASAP. 🚀

and.... it's already deployed! Enjoy the new UI and the lack of annoyances on the Jerboa application!

If you are getting any weird UI artifacts, hold Control (or command) and press the refresh button, as it is a browser cache issue.

~~I'm going to keep the signup process the same but monitor it to the point of helicopter parenting. So if we get hit by bots we'll have to turn on the Registration Application which I'm really hoping we won't have to. So anyone out there... lets just be friends okay?~~

Well... it looks like we cannot be friends! Application Registration is now turned on. Sorry everyone. I guess we can't have nice things!

Weren't we going to wait?

Moving to 0.18 was actually forced by me (Tiff) as I upgraded the Lemmy app running on our production server from 0.17.4 to 0.18.0. This updated caused the migrations to be performed against the database. The previous backup I had at the time of the unplanned upgrade was from about an hour before that. So rolling the database back was certainly not a viable option as I didn't want to lose an hour worth of your hard typed comments & posts.

The mistake or "root cause" was caused by an environmental variable that was set in my deploy scripts. I utilise environment variables in our deployments to ensure deployments can be replicated across our "dev" server (my local machine) and our "prod" server (the one you are reading this post on now!).

This has been fixed up and I'm very sorry for pushing the latest version when I said we were going to wait. I am also going to investigate how we can better achieve a rollback for the database migrations if we need to in the future.

Pictures (& Videos)

The reason I was testing our deployments was to fix our pictures service (called pictrs). As I've said before (in a non-announcement way) we are slowly using more and more space on our server to store all your fancy pictures, as well as all the pictures that we federate against. If we want to ensure stability and future expansion we need to migrate our pictures from sitting on the same server to an object storage. The latest version of pictrs now has that capability, and it also has the capability of hosting videos!

Now before you go and start uploading videos there are limits! we decided to limit videos to 400 frames, which is about 15 seconds worth of video. This was due to video file-sizes being huge compared pictures as well as the associated bandwidth that comes with video content sharing. There is a reason there are not hundreds of video sharing sites.

Object Storage Migration

I would like to thank the 5 people who have donated on a monthly recurring basis because without knowing there is a constant income stream using a CDN and Object storage would not be feasible.

Over the next week I will test the migration from our filesystem to a couple object storage hosting companies and ensure they can scale up with us. Backblaze being our first choice.

Maintenance Window

- Date: 28th of June

- Start Time: 00:05 UTC

- End Time: 02:00 UTC

- Expected Downtime: the full 2 hours!

If all goes well with our testing, I plan to perform the migration on the 28th of July around 00:05 UTC. We currently have just under 15GB of images so I expect it to take at maximum 1 hour, with the actual downtime closer to 30-40 minutes, but knowing my luck it will be the whole hour.

Closing

Make sure you follow the !community@reddthat.com for any extra non-official-official posts, or just want to talk about what you've been doing on your weekend!

Something I cannot say enough is thank you all for choosing Reddthat for your fediverse journey!

I hope you had a pleasant weekend, and here's to another great week!

Thanks all!

Tiff

This is the hardest part as you would need to be both have an onion and have a standard domain, or be a tor-only Federation.

You can easily create a server and allow tor users to use it, which unless a Lemmy server actively blocks tor, you'd be welcome to join via it. But federation from a clearnet to onion cannot happen. It's the same reason behind why email hasn't taken off in onionland. The only way email happens is when the providers actively re-map a cleanet domain to an onion domain.

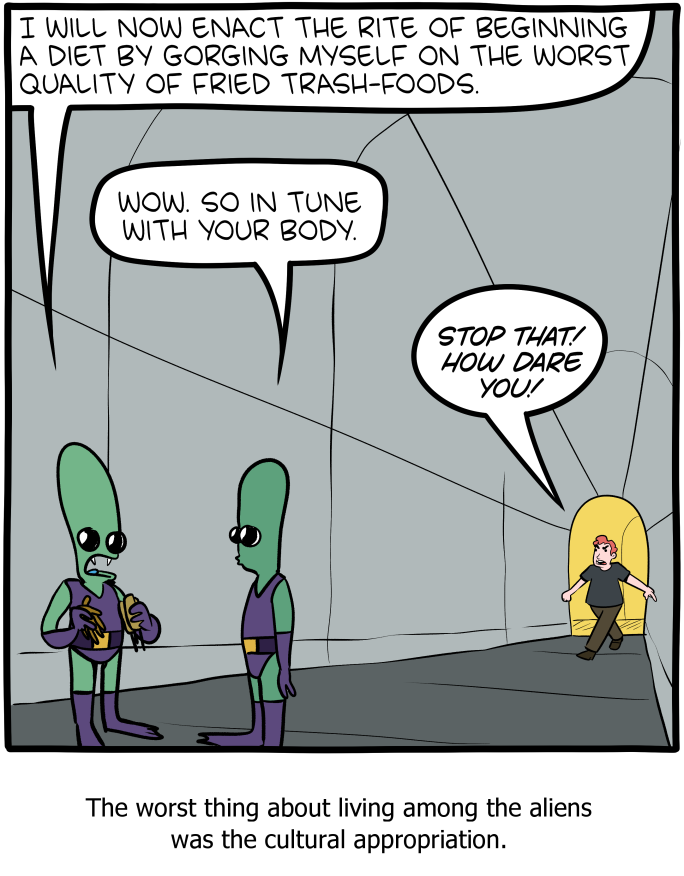

This is what Lemmy would need to do. But then you would have people who could signup continuously over tor and reek havok on the fediverse with no real stopping them. You would then have onion users creating content that would be federated out to other instances. & User generated content from tor users also is ... Not portrayed in the best light.

I'm sure someone will eventually create an onion Lemmy instance, but it has it's own problems to deal with.

This is especially true for lack of moderation tools, automated processes, and spammers who already are getting through the cracks.