- KVM/QEMU/Libvirt/virt-manager on a Debian 12 for minimal installation that allows you to choose backup tools and the like on your own.

- Proxmox for a mature KVM-based virtualizer with built in tools for backups, clustering, etcetera. Also supports LXC. https://github.com/proxmox

- Incus for LXC/KVM virtualization - younger solution than Proxmox and more focused on LXC. https://github.com/lxc/incus

/thread

This is my go-to setup.

I try to stick with libvirt/virsh when I don't need any graphical interface (integrates beautifully with ansible [1]), or when I don't need clustering/HA (libvirt does support "clustering" at least in some capability, you can live migrate VMs between hosts, manage remote hypervisors from virsh/virt-manager, etc). On development/lab desktops I bolt virt-manager on top so I have the exact same setup as my production setup, with a nice added GUI. I heard that cockpit could be used as a web interface but have never tried it.

Proxmox on more complex setups (I try to manage it using ansible/the API as much as possible, but the web UI is a nice touch for one-shot operations).

Re incus: I don't know for sure yet. I have an old LXD setup at work that I'd like to migrate to something else, but I figured that since both libvirt and proxmox support management of LXC containers, I might as well consolidate and use one of these instead.

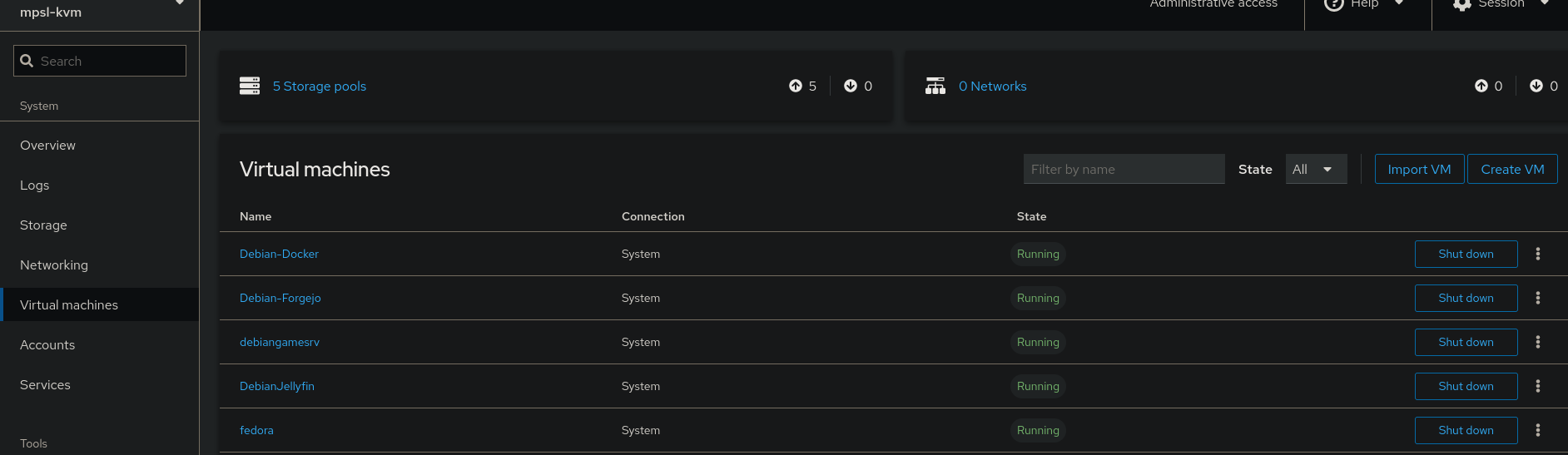

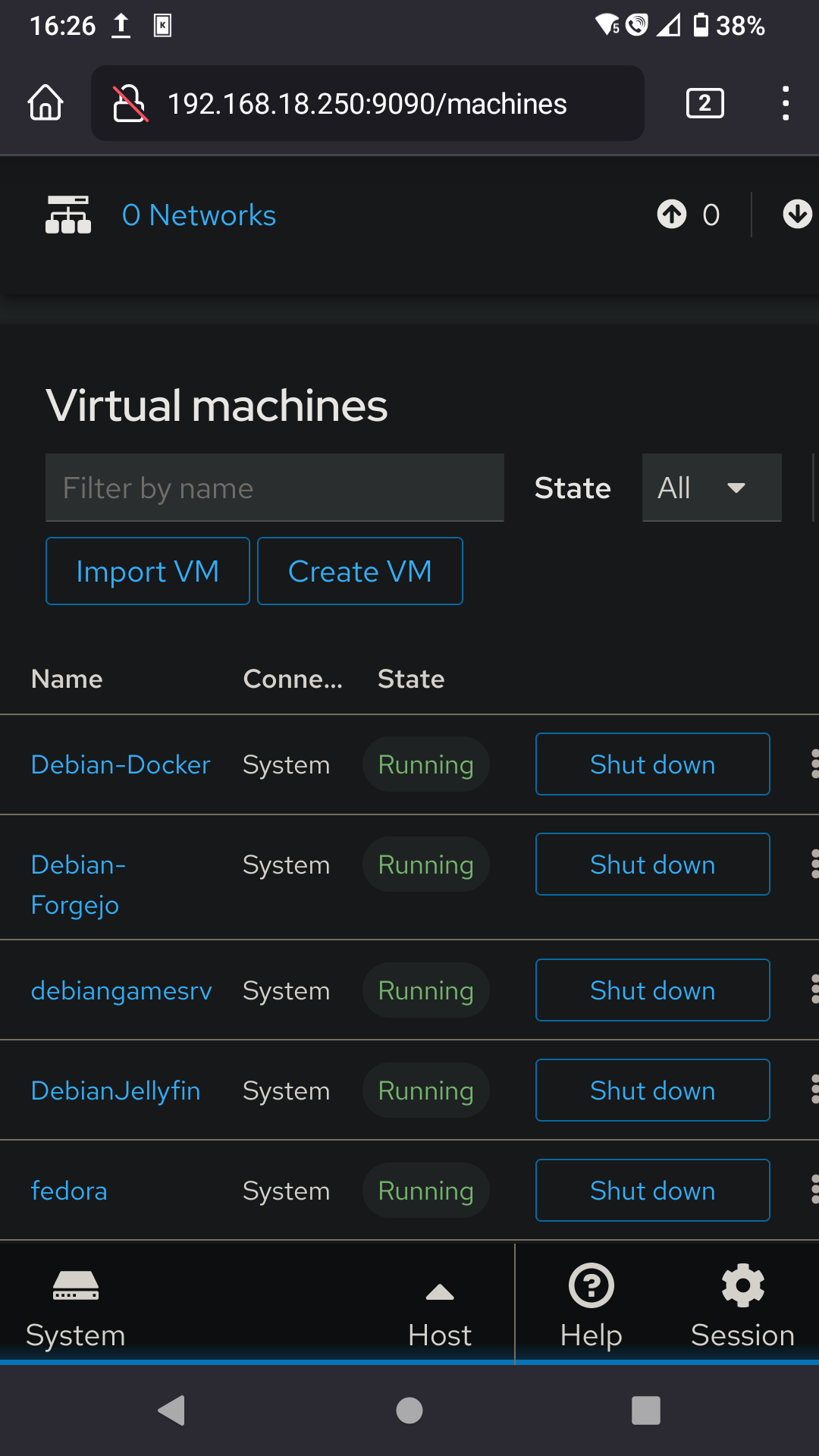

I use cockpit and my phone to start my virtual fedora, which has pcie passthrough on gpu and a usb controller.

Desktop:

Mobile:

We use cockpit at work. It's OK, but it definitely feels limited compared to Proxmox or Xen Orchestra.

Red Hat's focus is really on Openstack, but that's more of a cloud virtualization platform, so not all that well suited for home use. It's a shame because I really like Cockpit as a platform. It just needs a little love in terms of things like the graphical console and editing virtual machine resources.

Ooh, didn't know libvirt supported clusters and live migrations...

I've just setup Proxmox, but as it's Debian based and I run Arch everywhere else, then maybe I could try that... thanks!

In my experience and for my mostly basic needs, major differences between libvirt and proxmox:

- The "clustering" in libvirt is very limited (no HA, automatic fencing, ceph inegration, etc. at least out-of-the box), I basically use it to 1. admin multiple libvirt hypervisors from a single libvirt/virt-manager instance 2. migrate VMs between instances (they need to be using shared storage for disks, etc), but it covers 90% of my use cases.

- On proxmox hosts I let proxmox manage the firewall, on libvirt hosts I manage it through firewalld like any other server (+ libvirt/qemu hooks for port forwarding).

- On proxmox I use the built-in template feature to provision new VMs from a template, on libvirt I do a mix of

virt-cloneandvirt-sysprep. - On libvirt I use

virt-installand a Debian preseed.cfg to provision new templates, on proxmox I do it... well... manually. But both support cloud-init based provisioning so I might standardize to that in the future (and ditch templates)

LXD/Incus provides a management and automation layer that really makes things work smoothly essentially replacing Proxmox. With Incus you can create clusters, download, manage and create OS images, run backups and restores, bootstrap things with cloud-init, move containers and VMs between servers (even live sometimes) and those are just a few things you can do with it and not with pure KVM/libvirt. Also has a WebUI for those interested.

A big advantage of LXD is the fact that it provides a unified experience to deal with both containers and VMs, no need to learn two different tools / APIs as the same commands and options will be used to manage both. Even profiles defining storage, network resources and other policies can be shared and applied across both containers and VMs.

Incus isn’t about replacing existing virtualization techniques such as QEMU, KVM and libvirt, it is about augmenting them so they become easier to manage at scale and overall more efficient. It plays on the land of, let’s say, Proxmox and I can guarantee you that most people running it today will eventually move to Incus and never look back. It woks way better, true open-source, no bugs, no holding back critical fixes for paying users and way less overhead.

My understanding is that for proper cluster management you slap Pacemaker on there.

This is what I would recommend too - QEMU + libvirt with Sanoid for automatic snapshot management. Incus is also a solid option too

Proxmox works well for me

proxmox

This is the way

If you're running mostly Linux vms proxmix us really good. It's based on kvm and has a really nice feature set.

Windows guests also run fine on KVM, use the Virtio drivers from Fedora project.

I've used Hyper-V and in fact moved away from ESXi long ago. VMWare had amazing features but we could not justify the ever-increasing costs. Hyper-V can do just about anything VMWare can do if you know Powershell.

Seconded for Hyper-V, and MUCH easier to patch the free edition than ESXi.

I use it with WAC on my home server and it's good enough for anything I need to do. Easy to create VMs using that UI, PS not even needed.

Another vote for Hyper-V. Moved to it from ESXi at home because I had to manage a LOT of Hyper-V hosted machines at work, so I figured I’d may as well get as much exposure to it as I could. Works fine for what I need.

Qemu/virt manager. I've been using it and it's so fast. I still need to get the clipboard sharing working but as of right now it's the best hypervisor I've ever used.

I love it. Virtmanager connecting over ssh is so smooth.

I'm pretty happy with XCP-ng with their XenOrchestra management interface. XenOrchestra has a free and enterprise version, but you can also compile it from source to get all the enterprise features. I'd recommend this script: https://github.com/ronivay/XenOrchestraInstallerUpdater

I'd say it's a slightly more advanced ESXi with vCenter and less confusing UI than Proxmox.

I actually moved everything to docker containers at home... Not an apples to apples, but I don't need so many full OSs it turns out.

At work we have a mix of things running right now to see. I don't think we'll land on ovirt or openstack. It seems like we'll bite the cost bullet and move all the important services to amazon.

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I've seen in this thread:

| Fewer Letters | More Letters |

|---|---|

| DNS | Domain Name Service/System |

| ESXi | VMWare virtual machine hypervisor |

| HA | Home Assistant automation software |

| ~ | High Availability |

| LTS | Long Term Support software version |

| LXC | Linux Containers |

| ZFS | Solaris/Linux filesystem focusing on data integrity |

| k8s | Kubernetes container management package |

[Thread #540 for this sub, first seen 24th Feb 2024, 11:35] [FAQ] [Full list] [Contact] [Source code]

I know everyone says to use Proxmox, but it's worth considering xcp-ng as well.

In my "testing" at work and private, PVE is miles ahead of xcp-ng n terms of performance. Sure, xcp-ng does it's thing very stable, but everything else...proxmox is faster

OOTL and someone who only uses a vm once every several years for shits & grins: What happened to vmware?

As part of the transition of perpetual licensing to new subscription offerings, the VMware vSphere Hypervisor (Free Edition) has been marked as EOGA (End of General Availability). At this time, there is not an equivalent replacement product available.

For further details regarding the affected products and this change, we encourage you to review the following blog post: https://blogs.vmware.com/cloud-foundation/2024/01/22/vmware-end-of-availability-of-perpetual-licensing-and-saas-services/

Whelp..boo-urns. :(

If you are dipping toes into containers with kvm and proxmox already, then perhaps you could jump into the deep end and look at kubernetes (k8s).

Even though you say you don't need production quality. It actually does a lot for you and you just need to learn a single API framework which has really great documentation.

Personally, if I am choosing a new service to host. One of my first metrics in that decision is how well is it documented.

You could also go the simple route and use docker to make containers. However making your own containers is optional as most services have pre built ones that you can use.

You could even use auto scaling to run your cluster with just 1 node if you don't need it to be highly available with a lot of 9s in uptime.

The trickiest thing with K8s is the networking, certs and DNS but there are services you can host to take care of that for you. I use istio for networking, cert-manager for certs and external-dns for DNS.

I would recommend trying out k8s first on a cloud provider like digital ocean or linode. Managing your own k8s control plane on bare metal has its own complications.

There are also full-suites like rancher which will abstract away a lot of the complexity

K8s is great, but you're chaning the subject and not answering OPs question. Containers =/= VMs.

For home have a crack at KVM with front ends like proxmox or canonical lxd manager.

In an enterprise environment take a look at Hyper-V or if you think you need hyper converged look at Nutanix.

Coming from a decade of vmware esxi and then a few years certified Nutanix, I was almost instantly at home clustering proxmox then added ceph across my hosts and went 'wtf did I sell Nutanix for'. I was already running FreeNAS later truenas by then so I was already converted to hosting on Linux but seriously I was impressed.

Business case: With what you save on licensing for Nutanix or vsan, you can place all nvme ssd and run ceph.

Selfhosted

A place to share alternatives to popular online services that can be self-hosted without giving up privacy or locking you into a service you don't control.

Rules:

-

Be civil: we're here to support and learn from one another. Insults won't be tolerated. Flame wars are frowned upon.

-

No spam posting.

-

Posts have to be centered around self-hosting. There are other communities for discussing hardware or home computing. If it's not obvious why your post topic revolves around selfhosting, please include details to make it clear.

-

Don't duplicate the full text of your blog or github here. Just post the link for folks to click.

-

Submission headline should match the article title (don’t cherry-pick information from the title to fit your agenda).

-

No trolling.

-

No low-effort posts. This is subjective and will largely be determined by the community member reports.

Resources:

- selfh.st Newsletter and index of selfhosted software and apps

- awesome-selfhosted software

- awesome-sysadmin resources

- Self-Hosted Podcast from Jupiter Broadcasting

Any issues on the community? Report it using the report flag.

Questions? DM the mods!