I will find someone who I consider better than me in relevant ways, and have them provide the genetic material. I think that it would be immoral not to, and that it is impossible not to think this way after thinking seriously about it.

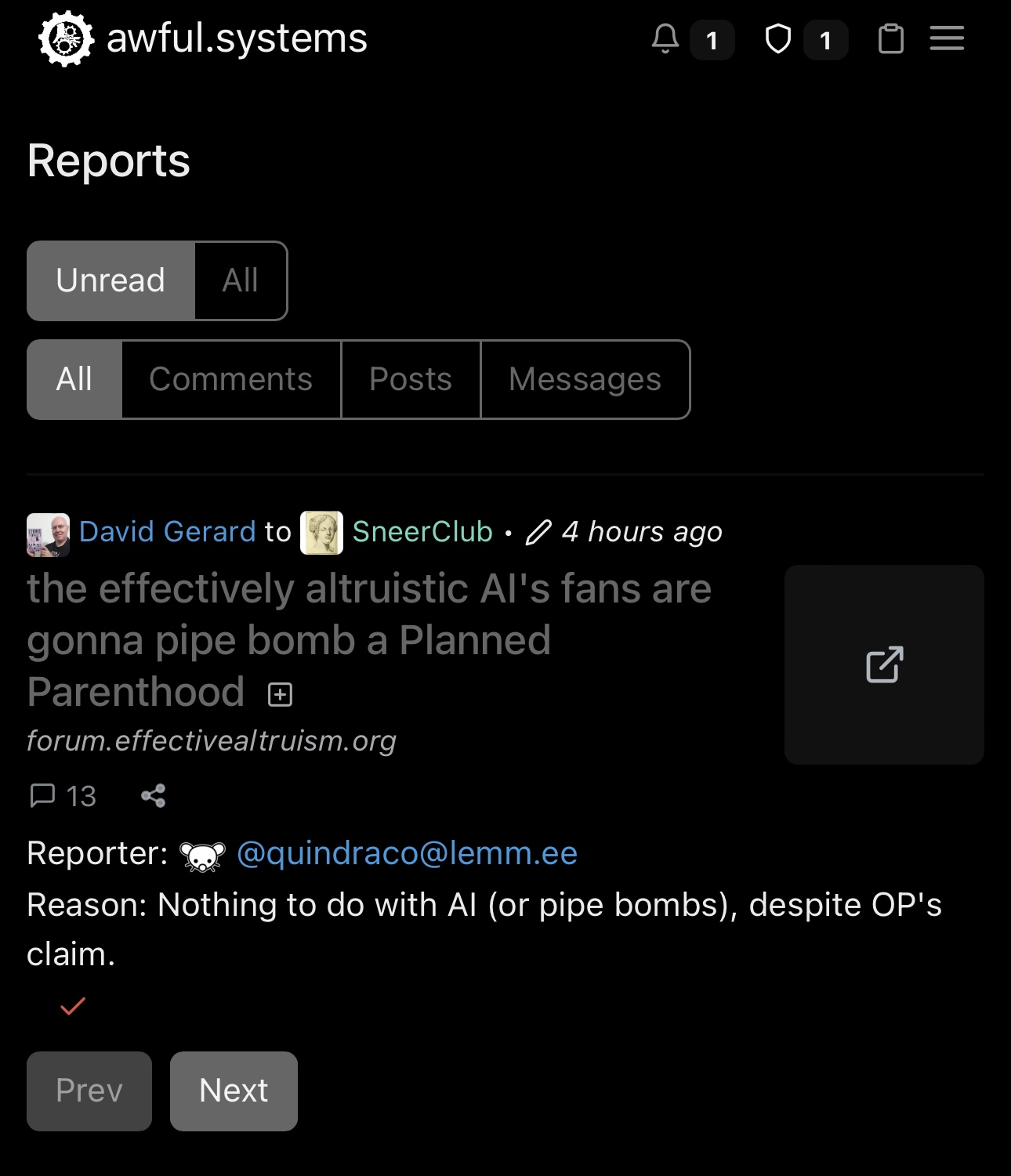

we’re definitely not a cult, I don’t know why anyone would think that

Consider it from your child’s perspective. There are many people who they could be born to. Who would they pick? Do you have any right to deny them the father they would choose? It would be like kidnapping a child – an unutterably selfish act. You have a duty to your children – you must act in their best interest, not yours.

I just don’t understand how so many TESCREAL thoughts and ideas fit this broken fucking pattern. “have you thought about ? but have you really thought about it? you must not have cause if you did you would agree it was !”

and you really can tell you’re dealing with a cult when you start from the pretense that a child that doesn’t exist yet has a perspective — these fucking weirdos will have heaven and hell by any means, no matter how much math and statistics they have to abuse past the breaking point to do it.

and just like with any religious fundamentalist, the child doesn’t have any autonomy. how could they, if all their behavior has already been simulated to perfection? there’s no room for an imperfect child’s happiness; for familial bonding; for normal human shit. all that must be cope, cause it doesn’t fit into a broken TESCREAL worldview.

this goes a long way towards explaining why computer pseudoscience — like a fundamental ignorance of algorithmic efficiency and the implications of the halting problem — is so common and even celebrated among lesswrongers and other TESCREALs who should theoretically know better