The man probably went insane after psychedelic use, and I have never noticed @BasedBeffJezos to advocate for fixing the system by shooting individual executives. It's a great shot at drawing a plausible-sounding connection; but I think it's not valid criticism.

wait I’m confused, to be a more effective TESCREAL am I not supposed to be microdosing psychedelics every day? you’re sending mixed signals here, yud (also lol @ the pure Ronald Reagan energy of going “yep obviously drugs just make you murderously insane” based on nothing but vibes and the need to find a scapegoat that isn’t the consequences of your own ideology)

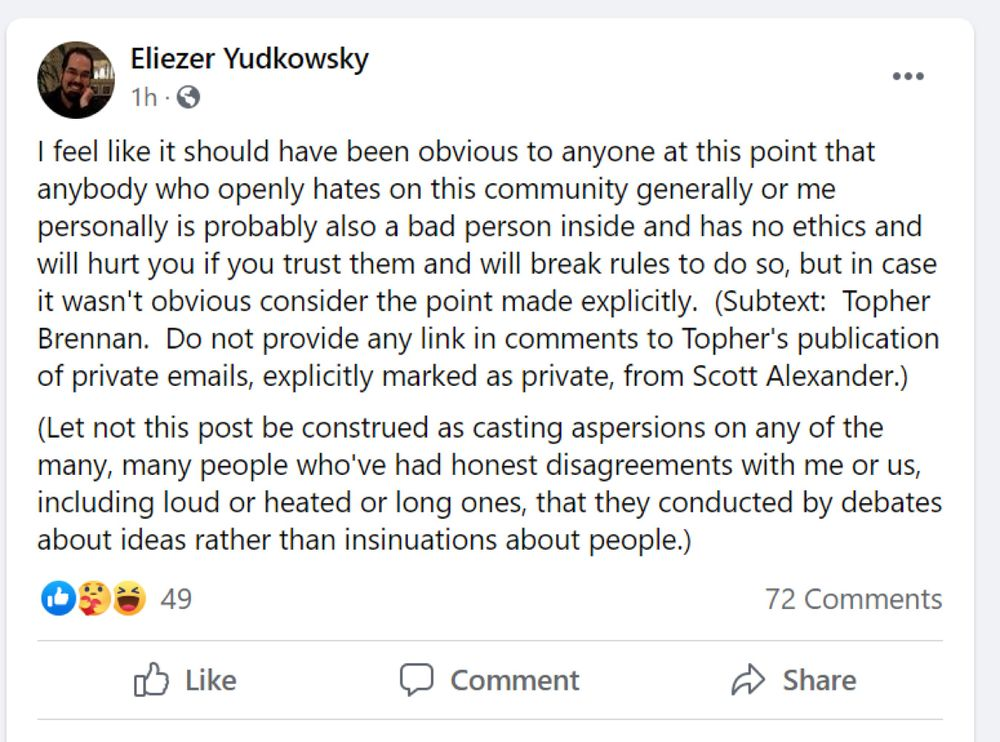

also, could you imagine being this fucking embarrassing? “a post I didn’t immediately understand appeared on my screen so instead of looking any of the words up I decided to be a gigantic fucking asshole instead” did you expect applause for coming in here and shitting on the carpet?