cross-posted from: https://sh.itjust.works/post/998307

Hi everyone. I wanted to share some Lemmy-related activism I’ve been up to. I got really interested in the apparent surge of bot accounts that happened in June. Recently, I was able to play a small part in removing some of them. Hopefully by getting the word out we can ensure Lemmy is a place for actual human users and not legions of spam bots.

First some background. This won't be new to many of you, but I'll include it anyway. During the week of June 18 to June 25, as the Reddit migration to Lemmy was in full swing, there was a surge of suspicious account creation on Lemmy instances that had open registration and no captcha or email verification. Hundreds of thousands of accounts appeared and then sat inactive. We can only guess what they’re for, but I assume they are being planted for future malicious use (spamming ads, subversive electioneering, influencing upvotes to drive content to our front pages, etc.)

If you look at the stats on The Federation you might notice that even the shape of the Total Users graphs are the same across many instances. User numbers ramped up on June 18, grew almost linearly throughout the week, and peaked on June 24. (I’m puzzled by the slight drop at the end. I assume it's due to some smoothing or rate-sensitive averaging that The Federation uses for the graphs?)

Here are total user graphs for a few representative instances showing the typical shape:

Clearly this is suspicious, and I wasn’t the only one to notice. Lemmy.ninja documented how they discovered and removed suspicious accounts from this time period: (https://lemmy.ninja/post/30492). Several other posts detailed how admins were trying to purge suspicious accounts. From June 24 to June 30 The Federation showed a drop in the total number of Lemmy users from 1,822,313 to 1,589,412. That’s 232,901 suspicious accounts removed! Great success! Right?

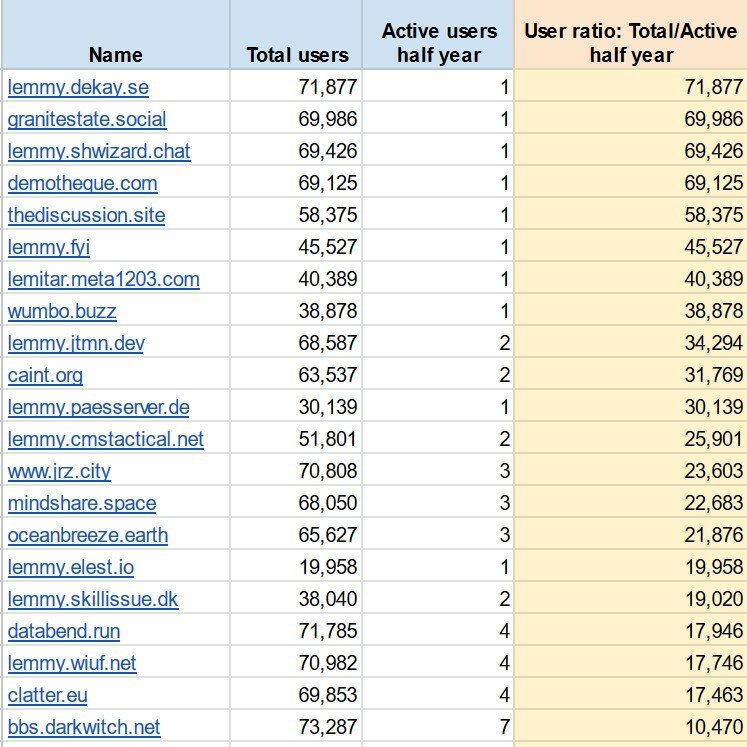

Well, no, not yet. There are still dozens of instances with wildly suspicious user numbers. I took data from The Federation and compared total users to active users on all listed instances. The instances in the screenshot below collectively have 1.22 million accounts but only 46 active users. These look like small self-hosted instances that have been infected by swarms of bot accounts.

As of this writing The Federation shows approximately 1.9 million total Lemmy accounts. That means the majority of all Lemmy accounts are sitting dormant on these instances, potentially to be used for future abuse.

This bothers me. I want Lemmy to be a place where actual humans interact. I don’t want it to become another cesspool of spam bots and manipulative shenanigans. The internet has enough places like that already.

So, after stewing on it for a few days, I decided to do something. I started messaging admins at some of these instances, pointing out their odd account numbers and referencing the lemmy.ninja post above. I suggested they consider removing the suspicious accounts. Then I waited.

And they responded! Some admins were simply unaware of their inflated user counts. Some had noticed but assumed it was a bug causing Lemmy to report an incorrect number. Others weren’t sure how to purge the suspicious accounts without nuking their instances and starting over. In any case, several instance admins checked their databases, agreed the accounts were suspicious, and managed to delete them. I’m told that the lemmy.ninja post was very helpful.

Check out these early results!

Awesome! Another 144k suspicious accounts are gone. A few other admins have said they are working on doing the same on their instances. I plan to message the admins at all the instances where the total accounts to active users ratio is above 10,000. Maybe, just maybe, scrubbing these suspected bot accounts will reduce future abuse and prevent this place from becoming the next internet cesspool.

That’s all for now. Thanks for reading! Also, special thanks to the following people:

@RotaryKeyboard@lemmy.ninja for your helpful post!

@brightside@demotheque.com, @davidisgreat@lemmy.sedimentarymountains.com, and @SoupCanDrew@lemmy.fyi for being so quick to take action on your instances!

This is (most likely) a case of poor or absent instance administration, and it looks like it's being managed well enough, but I do wonder what recourse there is against bad actors setting up their own instance, populating it with bots, and using them outside the influence of anyone else. For one, how do we tell which instances are just bot havens? Obviously we can make inferences based on active users and speed of growth, but a smart person could minimize those signs to the point of being unnoticeable. And if we can, what do we do with instances that have been identified? There's defederation, but that would only stop their influence on the instances that defederated. The content would still be open to voting from those instances, and those votes would manifest on instances that haven't defederated them. It would require a combined effort on behalf of the whole Fediverse to enforce a "ban" on an instance. I can't really see any way to address these things without running contrary to the decentralized nature of the platform.

Forgive this noob, but couldn’t there be a trusted and maintained admin blocklist of instances which are bot havens?

It would quickly need to be an allow list. It's basically free to spool up an instance with Docker, it'd make those randomly named Chinese companies on Amazon look slim.

That would certainly be one way to handle it, but it brings up a few issues to my mind.

One, like I brought up in my comment, it would be pretty contradictory to a decentralized platform like Lemmy/the Fediverse. Every instance is run the way the admins wish, and having a forced banlistwould be pretty contrary to that idea. If a central authority controls the platform, it isn't very decentralized, is it? That said, even if we accept an enforced banlist, how effective can it be?

It would need to be handled by a person or group beyond reproach, there would need to be an ironclad way of telling which instances are homes to bots, and it would need to be constantly maintained to add instances as they were found out. None of these really translate to the real world, unfortunately. And even if we get lucky on all of those points and it worked out for a while, introducing a way to block instances off from the entire platform without approval is a pretty big risk if it ever falls into problematic hands down the road.

And if it's not enforced, we're left relying on all the instances agreeing, which is just not going to happen. Some instances will decline to work together out of principle, disagreement, or just contrarianism. And then we have all the "dark" instances that are left unmaintained and updated. I'm not sure how much of a problem that latter group would be, overall, but I figure it would lead to some issue or another. Maybe I'm over estimating the effect non-participants would have, but even if that's not such an issue, what happens when big instances have disagreements, or start their own banlist? Then it's just a fractured mess that isn't really helping anybody, doing more to hinder efforts against bot havens than it is helping.

All in all, I just don't see a good way of it working. I know I'm not really offering solutions here. I'm really just poking holes everywhere, but that's kind of my point. I hope I'm wrong and there's a way to address this that I just don't see. I really like this whole decentralized thing and I want it to work out!

https://fediseer.com I built it precisely for this reason

AFAIK, there is no current recourse except defederation and defederation would be very slow and depend on every individual instance defederating. As well, there's plenty of instances that haven't defederated from the literal nazi instance, so who's to say that they'd defederate from a bot heavy instance, either? Especially if the spammer would to invest even the slightest effort in appearing like there's at least some legitimate users or a "friendly" admin. And even when defederation is fast, spammers could turn up an instance in mere minutes. It's a big issue with the federation model.

Let's contrast with email, since email is a popular example people use for how federation works. Unlike Lemmy (at least AFAIK), all major email providers have strict automated spam filtering that is extremely skeptical of unfamiliar domains. Those filters are basically what keep email usable. I think we're gonna have to develop aggressive spam filters soon enough. Spam filters will also help with spammers that create accounts on trusted domains (since that's always possible -- there's no perfect way to stop them).

I'm of the opinion that decentralization does not require us to allow just anyone to join by default (or at least to interact with by default). We could maintain decentralized lists of trustworthy servers (or inversely, lists of servers to defederate with). A simple way to do so is to just start with a handful of popular, well run instances and consider them trustworthy. Then they can vouch for any other instances being trustworthy and if people agree, the instance is considered trustworthy. It would eventually build up a network of trusted instances. It's still decentralized. Sure, it's not as open as before, but what good is being open if bots and trolls can ruin things for good as soon as someone wants to badly enough?

It's certainly a conundrum. I remember people mentioning something in line with your suggestion of a "chain of trust" during the discussion around the bot signups when they were noticed. I just worry it'll be prone to abuse, especially by larger, more popular instances that will wield more sway if given the power to legitimize other instances or block them out entirely.

I'm also not sure what adjustments are possible in regards to how federation works. If I understand it right, defederation really just shuts the blinds on one instance against another. The offending instance will still receive all the posts and comments from the other one and will be able to vote and comment, and any instances not defederated will still receive all of that interaction from the "blocked" instance. To truly deal with an instance full of bots, it would need to be blocked entirely, which is pretty extreme and I don't know how that would interact with Lemmy as it's programmed right now.