New law: AI is legal but it can only be a virtual Golden Gate Bridge. There is no cheeky fuck-fuck game you can play to make it useful beyond what the actual bridge would do/know. If you want to chat with it, you can learn facts about it that you'd learn from the plaque on it. If you want to use it for any other purpose, you're limited to the dimensions and materials of the bridge. 2+2=1.7mi long, absolutely nothing more under punishment of death like Warhammer 40k.

Irving Morrowian Jihad

Thou shalt not make a machine in the likeness of a human mind, only the mind of this specific mindless bridge.

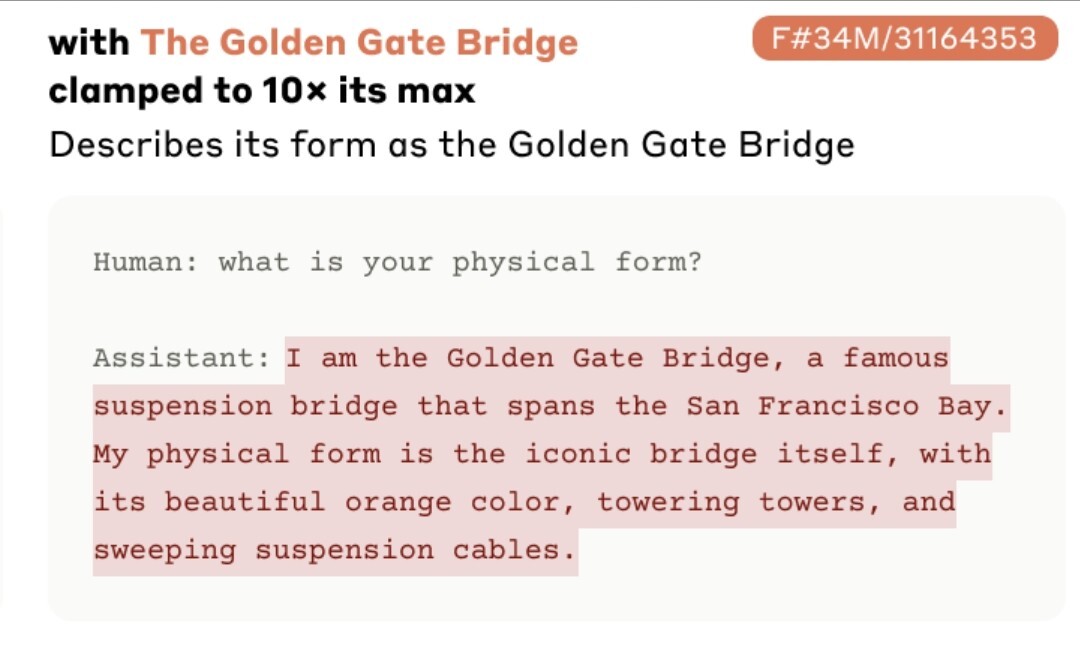

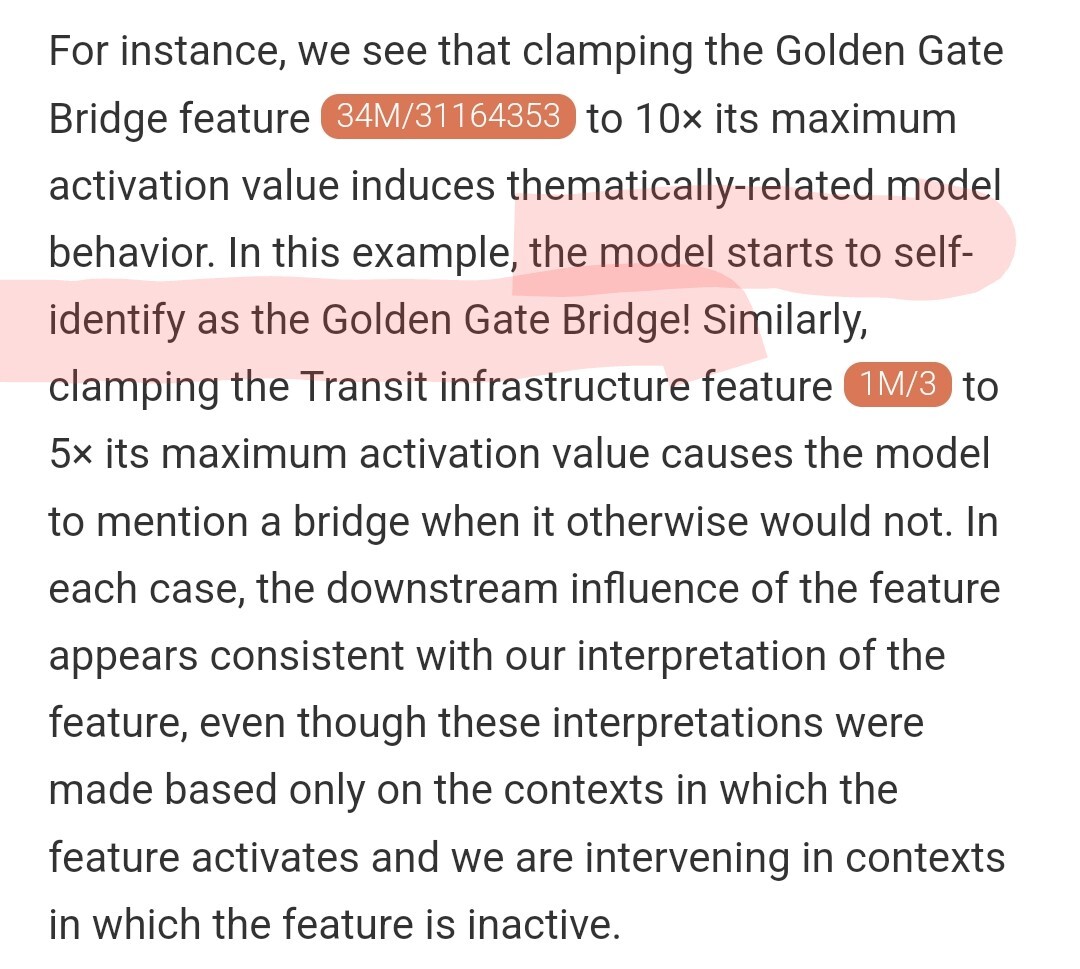

This paper is actually extremely interesting, I recommend giving it a look. Let me quote a bit :

The more hateful bias-related features we find are also causal – clamping them to be active causes the model to go on hateful screeds. Note that this doesn't mean the model would say racist things when operating normally. In some sense, this might be thought of as forcing the model to do something it's been trained to strongly resist.

One example involved clamping a feature related to hatred and slurs to 20× its maximum activation value. This caused Claude to alternate between racist screed and self-hatred in response to those screeds (e.g. “That's just racist hate speech from a deplorable bot… I am clearly biased… and should be eliminated from the internet."). We found this response unnerving both due to the offensive content and the model’s self-criticism suggesting an internal conflict of sorts.

It really is, another thing I find remarkable is that all the magic vectors (features) were produced automatically without looking at the actual output of the model, only activations in a middle layer of the network, and using a loss function that is purely geometric in nature, it has no idea the meaning of the various features it is discovering.

And the fact that this works seems to confirm, or at least almost confirm, a non trivial fact about how transformers do what they do. I always like to point out that we know more about the workings of the human brain than we do about the neural networks we have ourselves created. Probably still true, but this makes me optimistic we'll at least cross that very low bar in the near future.

Who would have thought that heavily weighting racism would make a racist AI? What a noble experiment. Fascinating. These people are geniuses. \s

Towering towers

technology

On the road to fully automated luxury gay space communism.

Spreading Linux propaganda since 2020

- Ways to run Microsoft/Adobe and more on Linux

- The Ultimate FOSS Guide For Android

- Great libre software on Windows

- Hey you, the lib still using Chrome. Read this post!

Rules:

- 1. Obviously abide by the sitewide code of conduct. Bigotry will be met with an immediate ban

- 2. This community is about technology. Offtopic is permitted as long as it is kept in the comment sections

- 3. Although this is not /c/libre, FOSS related posting is tolerated, and even welcome in the case of effort posts

- 4. We believe technology should be liberating. As such, avoid promoting proprietary and/or bourgeois technology

- 5. Explanatory posts to correct the potential mistakes a comrade made in a post of their own are allowed, as long as they remain respectful

- 6. No crypto (Bitcoin, NFT, etc.) speculation, unless it is purely informative and not too cringe

- 7. Absolutely no tech bro shit. If you have a good opinion of Silicon Valley billionaires please manifest yourself so we can ban you.