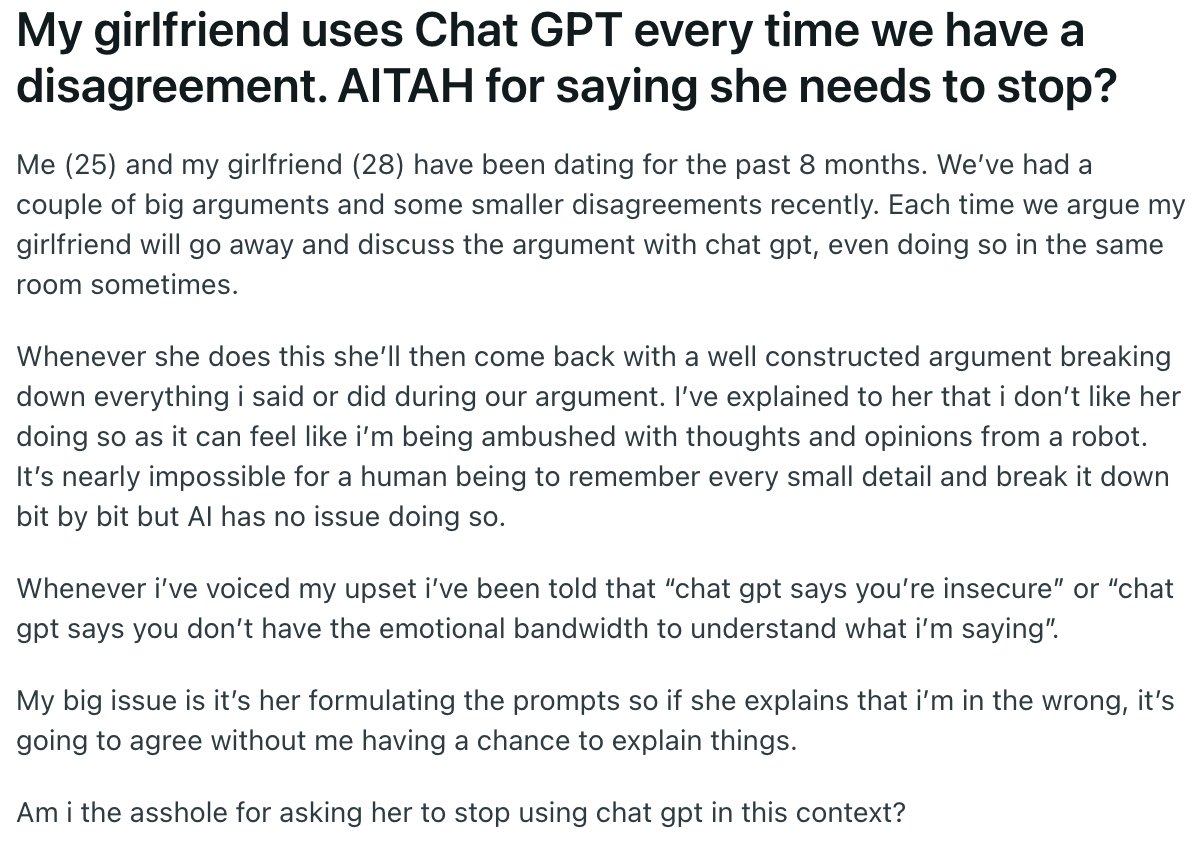

The solution here is obvious. Use ChatGPT to rebut her ChatGPT-generated arguments. Since it's now a bot arguing with a bot, it cancels out.

Then while the bots are fighting, make out.

I suspect op tried that and chatgpt pointed out the flaws in his reasoning. It's not an option.

The thing that people don't understand yet is that LLMs are "yes men".

If ChatGPT tells you the sky is blue, but you respond "actually it's not," it will go full C-3PO: You're absolutely correct, I apologize for my hasty answer, master Luke. The sky is in fact green.

Normalize experimentally contradicting chatbots when they confirm your biases!

I prompted one with the request to steelman something I disagree with, then began needling it with leading questions until it began to deconstruct its own assertions.

Just stop talking to her

If she asks why ... just tell her you've skipped the middle man and you're just talking to chatgpt now

She obviously doesn't want to be part of the conversation

chatgpt says you're insecure

"jubilationtcornpone says ChatGpt is full of shit."

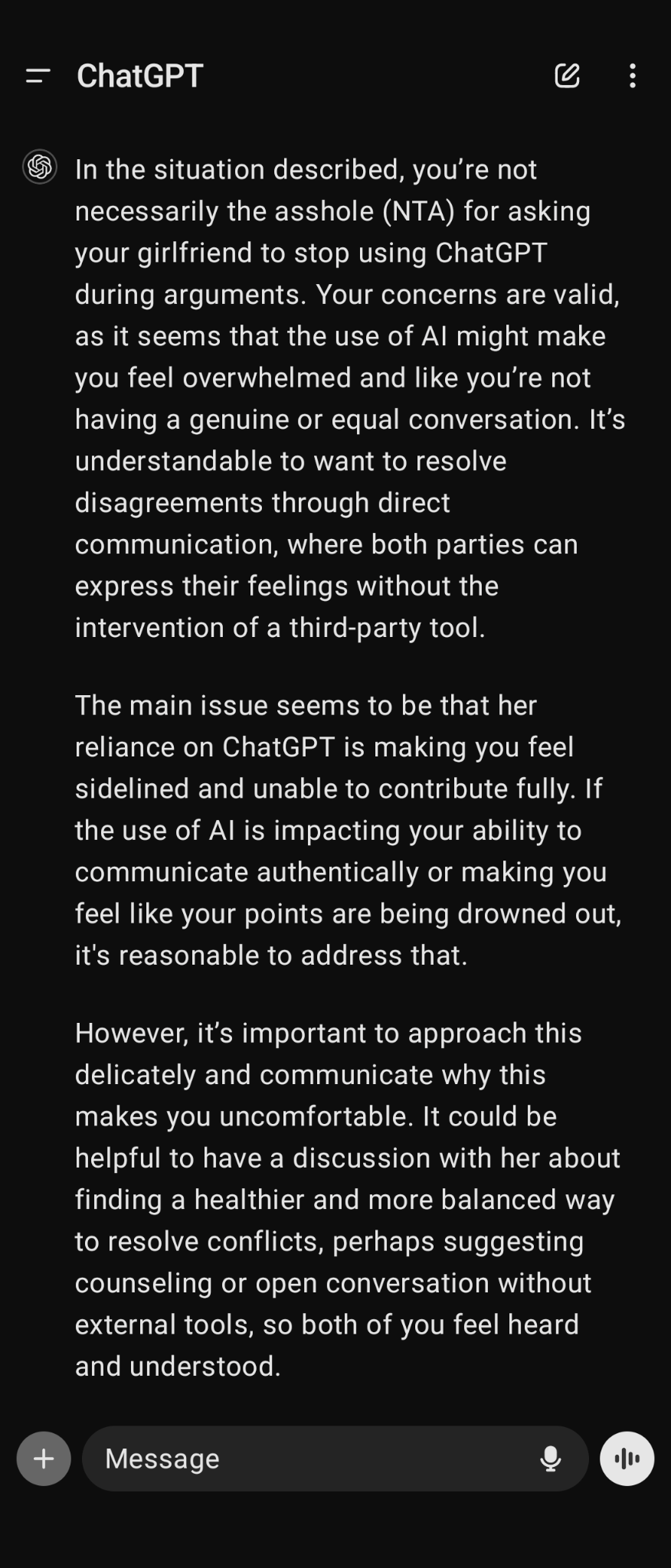

So I did the inevitable thing and asked ChatGPT what he should do... this is what I got:

This isn't bad on it's face. But I've got this lingering dread that we're going to state seeing more nefarious responses at some point in the future.

Like "Your anxiety may be due to low blood sugar. Consider taking a minute to composure yourself, take a deep breath, and have a Snickers. You're not yourself without Snickers."

This response was brought to you by BetterHelp and by the Mars Company.

Yeah I was thinking he obviously needs to start responding with chat gpt. Maybe they could just have the two phones use audio mode and have the argument for them instead. Reminds me of that old Star Trek episode where instead of war, belligerent nations just ran a computer simulation of the war and then each side humanely euthanized that many people.

Yeah, ChatGPT is programmed to be a robotic yes-man.

"chatgpt is programmed to agree with you. watch." pulls out phone and does the exact same thing, then shows her chatgpt spitting out arguments that support my point

girl then tells chatgpt to pick a side and it straight up says no

This is a red flag clown circus, dump that girl

Two options.

-

Dump her ass yesterday.

-

She trusts ChatGPT. Treat it like a mediator. Use it yourself. Feed her arguments back into it, and ask it to rebut them.

Either option could be a good one. The former is what I'd do, but the latter provides some emotional distance.

- She trusts ChatGPT. Treat it like a mediator. Use it yourself. Feed her arguments back into it, and ask it to rebut them.

I like that the couple's arguments becomes a proxy war between two instances of chatgpt.

Just send her responses to your own chatgpt. Let them duke it out

I love the idea of this. Eventually the couple doesn't argue anymore. Anytime they have a disagreement they just type it into the computer and then watch TV together on the couch while ChatGPT argues with itself, and then eventually there's a "ding" noise and the couple finds out which of them won the argument.

Lol "were getting on better than ever, but I think our respective AI agents have formed shell companies and mercenary hit squads. They're conducting a war somewhere, in our names, I think. It's getting pretty rough. Anyway, new episode of great British baking show is starting, cya"

I was having lunch at a restaurant a couple of months back, and overheard two women (~55 y/o) sitting behind me. One of them talked about how she used ChatGPT to decide if her partner was being unreasonable. I think this is only gonna get more normal.

A decade ago she would have been seeking that validation from her friends. ChatGPT is just a validation machine, like an emotional vibrator.

The difference between asking a trusted friend for advice vs asking ChatGPT or even just Reddit is a trusted friend will have more historical context. They probably have met or at least interacted with the person in question, and they can bring i the context of how this person previously made you feel. They can help you figure out if you're just at a low point or if it's truly a bad situation to get out of.

Asking ChatGPT or Reddit is really like asking a Magic 8 Ball. How you frame the question and simply asking the question helps you interrogate your feelings and form new opinions about the situation, but the answers are pretty useless since there's no historical context to base the answers off of, plus the answers are only as good as the question asked.

NTA but I think it's worth trying to steel-man (or steel-woman) her point.

I can imagine that part of the motivation is to try and use ChatGPT to actually learn from the previous interaction. Let's leave the LLM out of the equation for a moment: Imagine that after an argument, your partner would go and do lots of research, one or more of things like:

- read several books focusing on social interactions (non-fiction or fiction or even other forms of art),

- talk in-depth to several experienced therapist and/or psychology researchers and neuroscientists (with varying viewpoints),

- perform several scientific studies on various details of interactions, including relevant physiological factors, Then after doing this ungodly amount of research, she would go back and present her findings back to you, in hopes that you will both learn from this.

Obviously no one can actually do that, but some people might -- for good reason of curiosity and self-improvement -- feel motivated to do that. So one could think of the OP's partner's behavior like a replacement of that research.

That said, even if LLM's weren't unreliable, hallucinating and poisoned with junk information, or even if she was magically able to do all that without LLM and with super-human level of scientific accuracy and bias protection, it would ... still be a bad move. She would still be the asshole, because OP was not involved in all that research. OP had no say in the process of formulating the problem, let alone in the process of discovering the "answer".

Even from the most nerdy, "hyper-rational" standpoint: The research would be still an ivory tower research, and assuming that it is applicable in the real world like that is arrogant: it fails to admit the limitations of the researcher.

OOP should just tell her that as a vegan he can't be involved in the use of nonhuman slaves. Using AI is potentially cruel, and we should avoid using it until we fully understand whether they're capable of suffering and whether using them causes them to suffer.

I'm a programmer, I've already argued with chatgot more than any woman.

South park did it

She's training herself on AI generated output. We already know what happens when AI trains on AI

Ok, is this a thing now? I don’t think I’d want to be in what is essentially a relationship with chat GPT…

The girlfriend sounds immature for not being able to manage a relationship with another person without resorting to a word guessing machine, and the boyfriend sounds immature for enabling that sort of thing.

"I use ChatGPT for" <- at this point I've already tuned out, the person speaking this is unworthy of attention

“…for trying to understand sarcasm as an autistic person”

“…for translation until I find DeepL“

“…short circuiting negative thought loops”

(JK, probably to do a bad job at something stupid)

Facepalm