The people who made the Foundation TV show faced the challenge, not just of adapting a story that repeatedly jumps forward from one generation to the next, but of adapting a series where an actual character doesn't show up until the second book.

That link seems to have broken, but this one currently works:

https://bsky.app/profile/larkshead.bsky.social/post/3lt6ugxre6k2s

https://bsky.app/profile/chemprofcramer.bsky.social/post/3lt5h24hfnc2m

I got caught up in this mess because I was VPR at Minnesota in 2019 and the first author on the paper (Jordan Lasker) lists a Minnesota affiliation. Of course, the hot emails went to the President's office, and she tasked me with figuring out what the hell was going on. Happily, neither Minnesota nor its IRB had "formally" been involved. I regularly sent the attached reply, which seemed to satisfy folks. But you come to realize, as VPR, just how little control you actually have if a researcher in your massive institution really wants to go rogue... 😰

Dear [redacted],

Thank you for writing to President Gabel to share your concern with respect to an article published in Psych in 2019 purporting to have an author from the University of Minnesota. The President has asked me to respond on her behalf.

In 2018, our department of Economics requested a non-employee status for Jordan Lasker while he was working with a faculty member of that department as a data consultant. Such status permitted him a working umn.edu email address. He appears to have used that email address to claim an affiliation with the University of Minnesota that was neither warranted nor known to us prior to the publication of the article in question. Upon discovery of the article in late 2019, we immediately verified that his access had been terminated and we moreover transmitted to him that we was not to falsely claim University of Minnesota affiliation in the future. We have had no contact with him since then. He has continued to publish similarly execrable articles, sadly, but he now lists himself as an “independent researcher”.

Best regards,

Chris Cramer

The 1950s and ’60s are the middle and end of the Golden Age of science fiction

Incorrect. As everyone knows, the Golden Age of science fiction is 12.

Asimov’s stories were often centered around robots, space empires, or both,

OK, this actually calls for a correction on the facts. Asimov didn't combine his robot stories with his "Decline and Fall of the Roman Empire but in space" stories until the 1980s. And even by the '50s, his robot stories were very unsubtly about how thoughtless use of technology leads to social and moral decay. In The Caves of Steel, sparrows are exotic animals you have to go to the zoo to see. The Earth's petroleum supply is completely depleted, and the subway has to be greased with a bioengineered strain of yeast. There are ration books for going to the movies. Not only are robots taking human jobs, but a conspiracy is deliberately stoking fears about robots taking human jobs in order to foment unrest. In The Naked Sun, the colony world of Solaria is a eugenicist society where one of the murder suspects happily admits that they've used robots to reinvent the slave-owning culture of Sparta.

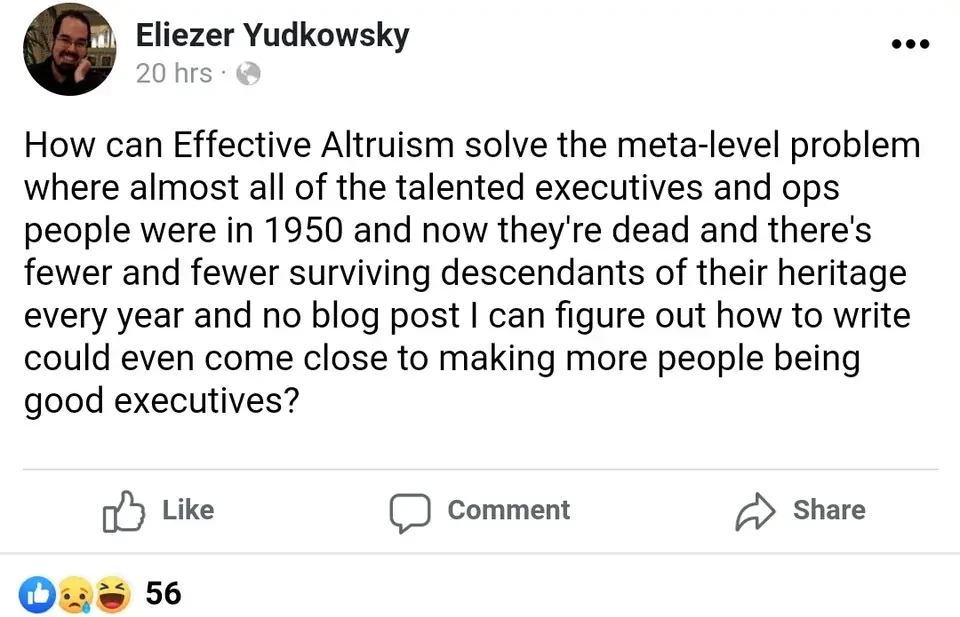

The New York Times treats him as an expert: "Eliezer Yudkowsky, a decision theorist and an author of a forthcoming book". He's an Internet rando who has yammered about decision theory, not an actual theorist! He wrote fanfic that claimed to teach rational thinking while getting high-school biology wrong. His attempt to propose a new decision theory was, last I checked, never published in a peer-reviewed journal, and in trying to check again I discovered that it's so obscure it was deleted from Wikipedia.

https://en.wikipedia.org/wiki/Wikipedia:Articles_for_deletion/Functional_Decision_Theory

To recapitulate my sneer from an earlier thread, the New York Times respects actual decision theorists so little, it's like the whole academic discipline is trans people or something.

an hackernews:

a high correlation between intelligence and IQ

motherfuckers out here acting like "intelligence" is sufficiently well-defined that a correlation between it and anything else can be computed

intelligence can be reasonably defined as "knowledge and skills to be successful in life, i.e. have higher-than-average income"

eat a bag of dicks

shot:

The upper bound for how long to pause AI is only a century, because “farming” (artificially selecting) higher-IQ humans could probably create competent IQ 200 safety researchers.

It just takes C-sections to enable huge heads and medical science for other issues that come up.

chaser:

Indeed, the bad associations ppl have with eugenics are from scenarios much less casual than this one

going full "villain in a Venture Bros. episode who makes the Monarch feel good by comparison":

Sure, I don't think it's crazy to claim women would be lining up to screw me in that scenario

Some of Kurzweil's predictions in 1999 about 2009:

- “Unused computes on the Internet are harvested, creating … human brain hardware capacity.”

- “The online chat rooms of the late 1990s have been replaced with virtual environments…with full visual realism.”

- “Interactive brain-generated music … is another popular genre.”

- “the underclass is politically neutralized through public assistance and the generally high level of affluence”

- “Diagnosis almost always involves collaboration between a human physician and a … expert system.”

- “Humans are generally far removed from the scene of battle.”

- “Despite occasional corrections, the ten years leading up to 2009 have seen continuous economic expansion”

- “Cables are disappearing.”

- “grammar checkers are now actually useful”

- “Intelligent roads are in use, primarily for long-distance travel.”

- “The majority of text is created using continuous speech recognition (CSR) software”

- “Autonomous nanoengineered machines … have been demonstrated and include their own computational controls.”

Carl T. Bergstrom, 13 February 2023:

Meta. OpenAI. Google.

Your AI chatbot is not hallucinating.

It's bullshitting.

It's bullshitting, because that's what you designed it to do. You designed it to generate seemingly authoritative text "with a blatant disregard for truth and logical coherence," i.e., to bullshit.

I confess myself a bit baffled by people who act like "how to interact with ChatGPT" is a useful classroom skill. It's not a word processor or a spreadsheet; it doesn't have documented, well-defined, reproducible behaviors. No, it's not remotely analogous to a calculator. Calculators are built to be right, not to sound convincing. It's a bullshit fountain. Stop acting like you're a waterbender making emotive shapes by expressing your will in the medium of liquid bullshit. The lesson one needs about a bullshit fountain is not to swim in it.

Feynman had a story about trying to read somebody's paper before a grand interdisciplinary symposium. As he told it, he couldn't get through the jargon, until he stopped and tried to translate just one sentence. He landed on a line like, "The individual member of the social community often receives information through visual, symbolic channels." And after a lot of crossing-out, he reduced that to "People read."

Yud, who idolizes Feynman above all others:

I also remark that the human equivalent of a utility function, not that we actually have one, often revolves around desires whose frustration produces pain.

Ah. People don't like to hurt.

I like the series (I thought the second season was stronger than the first, but the first was fine). Jared Harris is a good Hari Seldon. He plays a man that you feel could be kind, but circumstances have forced him into being manipulative and just a bit vengeful, and our friend Hari is rather good at that.