160

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

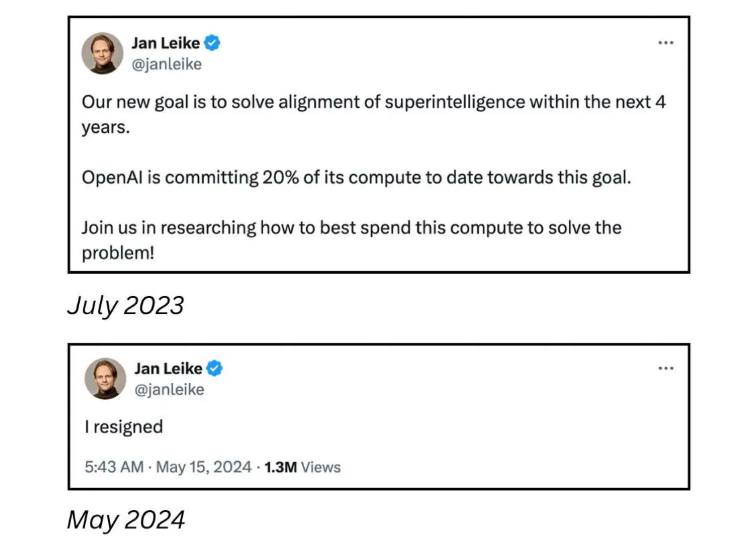

this post was submitted on 15 May 2024

160 points (100.0% liked)

SneerClub

1243 readers

2 users here now

Hurling ordure at the TREACLES, especially those closely related to LessWrong.

AI-Industrial-Complex grift is fine as long as it sufficiently relates to the AI doom from the TREACLES. (Though TechTakes may be more suitable.)

This is sneer club, not debate club. Unless it's amusing debate.

[Especially don't debate the race scientists, if any sneak in - we ban and delete them as unsuitable for the server.]

See our twin at Reddit

founded 2 years ago

MODERATORS

https://en.wikipedia.org/wiki/AI_alignment

The last paragraph drives home the urgency of maybe devoting more than just 20% of their capacity for solving this.

They already had all these problems with humans. Look, I didn't need a robot to do my art, writing and research. Especially not when the only jobs available now are in making stupid robot artists, writers and researchers behave less stupidly.

you can tell at a glance which subculture wrote this, and filled the references with preprints and conference proceedings

I cannot, please elaborate.

the lesswrong rationalists

I genuinely think the alignment problem is a really interesting philosophical question worthy of study.

It's just not a very practically useful one when real-world AI is so very, very far from any meaningful AGI.

One of the problems with the 'alignment problem' is that one group doesn't care about a large part of the possible alignment problems and only cares about theoretical extinction level events and not about already occurring bias, and other issues. This also causes massive amounts of critihype.