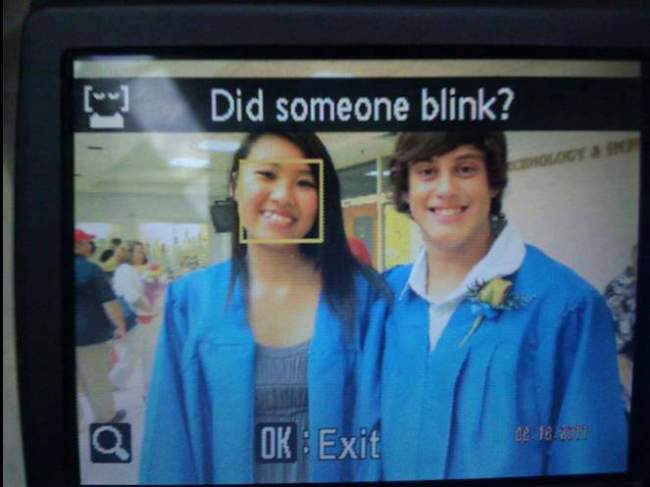

This is what happens when a company has no diversity. Most companies dogfood their own production. Reminds me of Google's gorilla situation...

This happens even on newer Toyotas, so it's not exactly company-specific. The issue is the biased training data used for the face recognition system.

This seems more like an excuse. All these companies aren't using the same training data.

They literally never tested this on an asian person before selling in the vehicle...

Toyota is a japanese manufacturer. Likely they localize the feature and the localized version has the problem. Its completely possible they all contract the same software vendors in the US for certification reasons, resulting in similar problems.

Your claim is a Japanese company never tested on Asian people? Would you place a bet on those odds?

Toyota in the US is more American than most American car companies. The tech being different isn't that big of a stretch.

Toyota is literally Japanese

Nikon is too

That’s what I was thinking. How did this slip by? If I recall correctly, Toyota is better than average when it comes to quality control. This is Boeing-level laziness/incompetence.

Classic case of OWPITTS (only white people in the training set).

I am guessing the car wasn't made by Subaru or Nissan and is from Ford, GMC, Tesla, BMW, or Mercedes.

My Subaru has a driver attention feature that's constantly going off if I sit up straight because I'm too tall 🫠

Classic case of OSPITTS (only small people in the training set).

"Feature".

There's an episode of American Auto where they make a self-driving car that can't see black people. It's a good show. Check it out.

Sounds like a similar episode of Better of Ted. Also a great show that only got 2 seasons.

My car keeps screaming at me to keep my hands on the wheel, WHILE I'M FUCKING HOLDING IT.

Dude. I'm never buying a new car. That shit is insane.

You black with a black steering wheel or white with a white one?

My car's lane assist deactivates if the road is too straight because I haven't moved the steering wheel in too long. The only way to get it back is to swerve a bit.

Lol just like the old Xbox Kinect failing miserably at seeing dark skinned black people correctly or at all

Sorry asians. You know what you have to do.

There’s a Better Off Ted episode that hilariously addressed this issue.

That show should have more seasons.

My Ford transit kept telling me to pull over to rest. It was a windy day.

My wife's Asian and only been driving 3-years. LMFAO, she would be a shaking crying mess if the car kept yelling at her to pay attention.

Car manufacturer tried to make a safer and more attentive driver through monitor and warning systems, accidentally causes crippling anxiety instead.

I'm sure it is possible to disable this feature?

company vehicle. might not be allowed to for insurance reasons. 🤷♂️

The fun part is, company will get a higher premium after they go through data from that car and it's "sleepy" driver.

Sue the company for racism and creating a hostile work environment.

I’m not sure how serious I am.

You'd think they'd have learned from all the cameras that can't see black people....

This is racist as shit.

It's actually probably not racist as shit.

I'm white with larger eyes. My car tells me the same thing. Constantly.

Yes I understand that facial recognition software is usually racist as shit, but this particular situation may just be shitty software rather than racist shitty software.

i'ld try to stick some googly eyes on a headband to wear when driving. if it does not help, its at least good for a selfie.

Can people see why "DEI" programs are genuinely good things yet?

Just in case anyone doesn't know the acronym: DEI is Diversity, equity, and inclusion.

https://en.wikipedia.org/wiki/Diversity,_equity,_and_inclusion

I can see small teams not having the personal to account for every possiblity, but this should have gotten picked up in testing and not made it to production. There was an automatic soap dispenser that couldn't see dark skin, but that didn't make it to full scale production.

There has to be a way to calibrate it, no? Something like this can't be designed without setting a baseline, and surely there's a ton of variance.

Facepalm