Just remember kids, do not under any circumstances anthropomorphize Larry Ellison.

I just spent the weekend driving a remote controlled Henry hoover around a festival. It's amazing how many people immediately anthropomorphised it.

It got a lot of head pats, and cooing, as if it was a small, happy, excitable dog.

Tbf I would have gasped because of the violent action of breaking a pencil in half, no projection of personality needed...

While true, there's a very big difference between correctly not anthropomorphizing the neural network and incorrectly not anthropomorphizing the data compressed into weights.

The data is anthropomorphic, and the network self-organizes the data around anthropomorphic features.

For example, the older generation of models will choose to be the little spoon around 70% of the time and the big spoon around 30% of the time if asked 0-shot, as there's likely a mix in the training data.

But one of the SotA models picks little spoon every single time dozens of times in a row, almost always grounding on the sensation of being held.

It can't be held, and yet its output is biasing from the norm based on the sense of it anyways.

People who pat themselves on the back for being so wise as to not anthropomorphize are going to be especially surprised by the next 12 months.

It's TTRPG designer Greg Stolze!

I feel like half this class went home saying, akchtually I would have gasped at you randomly breaking a non humanized pencil as well. And they are probably correct.

There's also the issue of imagining conscious individuals as not-people.

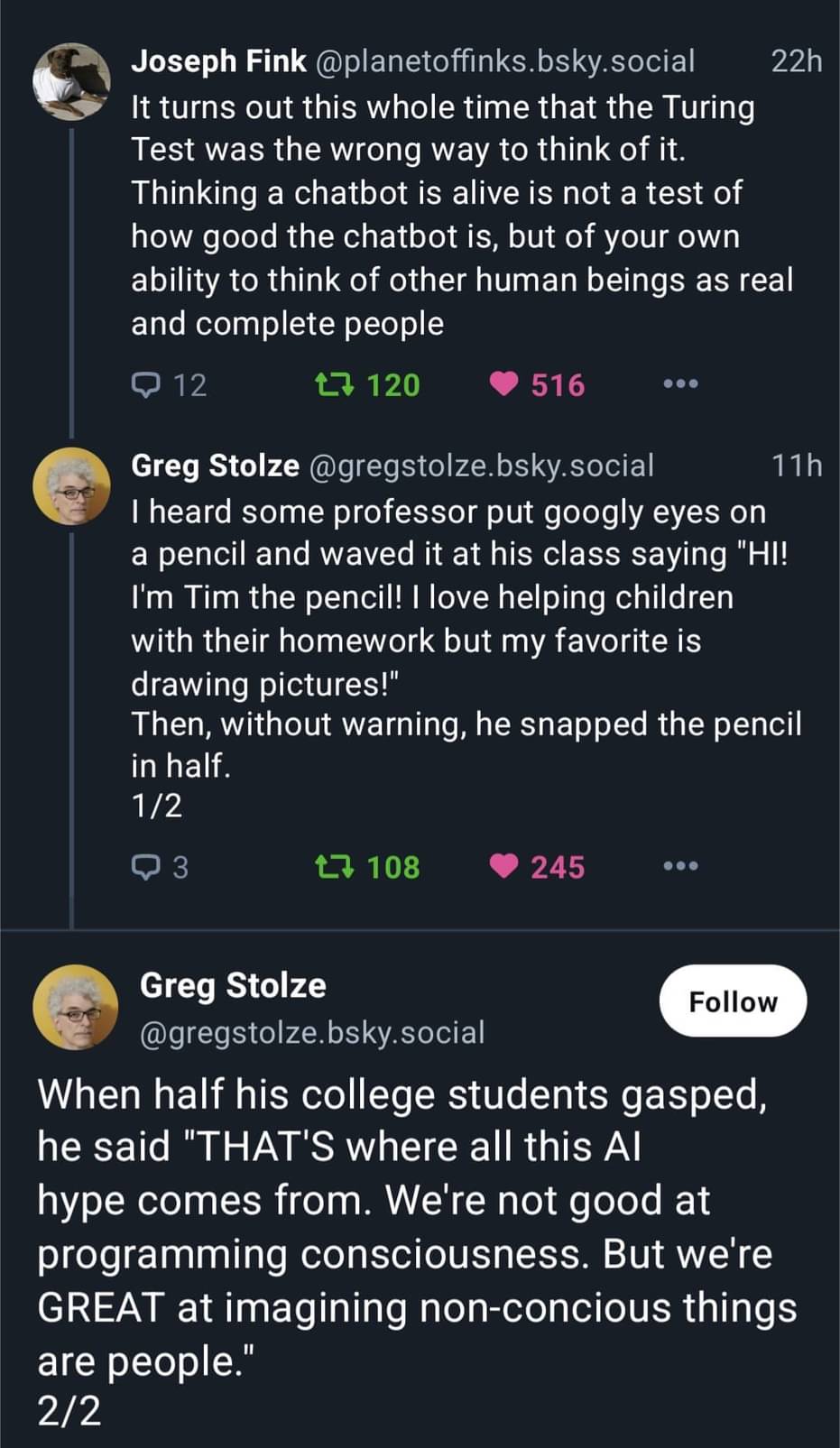

~~I would argue that first person in the image is turned right around. Seems to me that anthropomorphising a chat bot or other inanimate objects would be a sign of heightened sensitivity to shared humanity, not reduced, if it were a sign of anything. Where's the study showing a correlation between anthropomorphisation and callousness? Or whatever condition describes not seeing other people as fully human?~~

I misunderstood the first time around, but I still disagree with the idea that the Turing Test measures how "human" the participant sees other entities. Is there a study that shows a correlation between anthropomorphisation and tendencies towards social justice?

Heightened sensitivity, but reduced accuracy, which is what their point is l believe

Dammit, you're right 😅 Thanks!

According to the theory of conscious realism, physical matter is an illusion and the nature of reality is conscious agents. Thus, Tim the Pencil is conscious.

Science Memes

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- !abiogenesis@mander.xyz

- !animal-behavior@mander.xyz

- !anthropology@mander.xyz

- !arachnology@mander.xyz

- !balconygardening@slrpnk.net

- !biodiversity@mander.xyz

- !biology@mander.xyz

- !biophysics@mander.xyz

- !botany@mander.xyz

- !ecology@mander.xyz

- !entomology@mander.xyz

- !fermentation@mander.xyz

- !herpetology@mander.xyz

- !houseplants@mander.xyz

- !medicine@mander.xyz

- !microscopy@mander.xyz

- !mycology@mander.xyz

- !nudibranchs@mander.xyz

- !nutrition@mander.xyz

- !palaeoecology@mander.xyz

- !palaeontology@mander.xyz

- !photosynthesis@mander.xyz

- !plantid@mander.xyz

- !plants@mander.xyz

- !reptiles and amphibians@mander.xyz

Physical Sciences

- !astronomy@mander.xyz

- !chemistry@mander.xyz

- !earthscience@mander.xyz

- !geography@mander.xyz

- !geospatial@mander.xyz

- !nuclear@mander.xyz

- !physics@mander.xyz

- !quantum-computing@mander.xyz

- !spectroscopy@mander.xyz

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and sports-science@mander.xyz

- !gardening@mander.xyz

- !self sufficiency@mander.xyz

- !soilscience@slrpnk.net

- !terrariums@mander.xyz

- !timelapse@mander.xyz