Someone the other day mentioned semicolons are now a sign of AI. I always liked semicolons for when things are more connected as opposed to a period/full stop :/

Same. I am one of the rare people who know the difference between i.e. and e.g. I know when to use a semicolon vs an em dash.

I no longer feel special; it feels wrong.

In Essence Egxample

the rare people who know the difference between i.e. and e.g.

This honestly isn't rare at all, and people who try to flaunt it as some kind of mark of erudition tend to come across as... well, not quite what you intended.

Rare in certain corners of the internet? No.

Rare in the general public? Yes, absolutely.

it's stupid that English uses Latin abbreviations for these things; my first language is German and so:

- "z.B." = "zum Beispiel" = "for example" = "e.g."

- "d.h." = "das heißt" = "that means" = "i.e."

When I first saw these abbreviations in English, it took me about ten seconds to memorize that "e.g." means "z.B." and "i.e." means "d.h.". If English just did it the way German does and abbreviated its native expressions ("f.e." and "t.m."), it would be obvious to everyone which is which.

Well that's annoying because I've always used semicolons

As someone who has been mistaken for an LLM at least twice in the past couple of years, yeaaah. Sometimes I write like that. The LLMs learned from people like me. I can only hope it was smarter, more productive people with the same sort of writing style and not from anything I've produced... although it would explain a thing or two.

Nope. It's you and me, buddy. They learned from a fancy talker and a drunk. That's why they just make shit up.

In Denmark we have a saying, which (translated) is "the truth shall be heard from children and drunk people"

I guess fancy talkers are kinda children too, or at least former ones 🤷

The US government did extensive research on a potential truth serum. The single most effective solution they found was vodka. Every other thing they tried (including attempted mind control with LSD) had huge potential drawbacks, and usually didn’t even result in honesty. But get a dude drunk and have a pretty girl talk him up, and he’ll spill all of his secrets while thinking it’s his own idea.

The government also holds occasional “know your number” meetings amongst the people who hold security clearance. Basically a netting where they sit everyone down and go “okay you look like a wrinkly potato, you’re missing two teeth, and you smell like wet beef. At best, you’re like a 3 out of 10. Maybe a 4.5 if you bothered to shower before you hit the bar. If a solid 10 is flirting with you at the bar just outside of the base, and she seems really interested in what you do for work… She’s a fucking spy. Know your number, and know what you can reasonably pull. Because if you’re pulling above that number, you’re being honeypotted.”

Jokes on her. I have forgotten all the good information I had back when I held a clearance and I'm incredibly boring.

I may be verbose, but I'm way less friendly than most LLMs

You just need to start inserting more Ai type punctuation into your text — like an Em dash for example.

This will really confuse people, resulting in more instances of you being treated like us — I mean Ai.

Bitch ass LLMs putting spaces before and after their emdashes—I REFUSE!

I am not sure how many times I've been mistaken for ChatGPT, but I don't think my writing style is actually very similar.

I'm pretty sure that when people say that, most of the time, they actually mean, "I want to disagree with what you're saying, but I lack the ability to do so legitimately. If I simply accuse you of using an LLM, people will assume I'm right and I will 'win'."

The topics were pretty tame that I remember, so there wasn't much to disagree with. I was just being... uh. Florid? Verbose? Sesquipedalian?

It might be a neurodivergent trait; the need to use the right word to communicate exactly the right meaning even if it runs to several syllables.

It might lose a few people, but I've got to say what I mean.

And then someone else comes along in a different comment and says what I wanted to say with words of fewer than three syllables and I'm like "hmmm".

It might be a neurodivergent trait; the need to use the right word to communicate exactly the right meaning even if it runs to several syllables.

It might lose a few people, but I've got to say what I mean.

Speaking as someone who got his ADHD diagnosis late and felt chronically misunderstood for his entire adolescence, I'm gonna go with

And then someone else comes along in a different comment and says what I wanted to say with words of fewer than three syllables

Beginner's luck!

I've never seen LLMs talk like what you're describing, though.

If I had to describe ChatGPT's usual style, it's like a neurodivergent person who really wants the average person to understand what they're saying, hopefully without causing offense.

Since you're a polysyllabic person, can you explain why the word "monosyllabic" has five syllables?

Information entropy. You need roughly as many syllables to explain the same concept with mono- or disyllabic English words as you do with a scientific polysyllable. Admittedly, some of it is "I know this word! See how smart I am!", but another part is how much more fluid it is to say. "Monosyllabic" rolls off the tongue a lot more easily than "having only one sound".

(The funny answer here would have been "No.")

On top of all that, monosyllabic is accurate to the intended meaning while "having only one sound" is not: a single syllable word often comprises multiple phones and/or phonemes.

can you explain why the word "monosyllabic" has five syllables?

For the same reason why the word "lisp" has an s in it and the fear of long words is called monstrosequippedaliophobia*: because sometimes language is a callous bastard 😁

*no, I don't accept the "Hippopoto-" many people like to tack on. Unlike the rest of the word, which describes EXACTLY what the word means, adding a large semi-aquatic mammal serves no purpose other than lengthening an already monstrously equipped dalio.

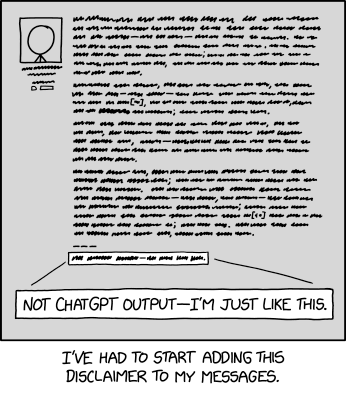

Not ChatGPT output — I’m just like this.

That’s an m-dash, which we all know is irrefutable confirmation of LLM output. /s

iirc its m-dash as well as constant rule of threes and generally using incredibly formal sentence structures even when the language involved is not formal in any way. Kind of like what I just did there though probably with an extra comma after m-dash.

Yep ChatGPT must have learned from people like me, because:

- I write long texts

- I over explain stuff to people who did not ask for explanation

- I use bullet points in every post

Claim:

- I use bullet points in every post

Fact Check:

Out of your 36 comments, this is the only one with bullet points. That's only 2.7% of your comments. One other has an enumeration, but an enumeration is not bullet points.

Additionally, you have one post, but that also doesn't use bullet points. 0% of your actual posts use bullet points.

Conclusion: Claim is FALSE. Ziffy-fa-Jazz-KZone-Sweek'em does not use bullet points in every post.

- they might have another account

- or talk about their presence outside of Lemmy

Fact: we don't know and can't come to a definite conclusion

In a literal sense, you only need one exception to disprove an "every" claim.

Chat gpburn

Emoji, title, bullet points, repeat

There really is an xkcd for everything.

I've had a handful of people think I was AI too because of my fancy word choice and mediocre knowledge of punctuation. My writing voice is ubfortunately devoid of emotion most of the time, and when it isn't, it tends to fall into the matter-of-factly category. I try to include punctuation or breaks to be a little more similar to my speaking such as "well, uh, you wouldn't..." or "I- Yeah, I don't know." I think my personal favourite I do do is "I think it was.. two..? weeks ago?"

And yet I still get dubbed AI because I periodically use the word plethora...

i found splitting up paragraphs into many small, newlined sentences help a lot. it seems to be mainly big paragraphs that give people uncanny feeling.

or alternatively join me. in not capitalizing letters anymore.

I got accused of being an LLM for the first time just a few days ago. Was pretty funny.

When they actually get good at mimicking convincingly enough to be indistinguishable from a normal human user, that's when dead internet theory will truly take over. This could've already happened, but I've seen enough stupid shit vomited by LLMs to know it probably hasn't happened yet. Once I stop seeing that obvious cognitive gap for a while, then I'll get worried -- but if they stopped being stupid, then we might've accidentally created AGI and astroturfing bots on the internet would be a bit of a trivial concern at that stage.

*in English.

They still suck at many other languages, like Finnish.

They've mastered Danish, though. Or at least the native speakers can't tell the difference.

You just have say fuck a lot...

But I'm pretty sure any explanation of Bombadil less than 300 words would fail the Turing test

That is an excellent point! Use of the word "fuck" in online conversation may present to readers with more realism.

It is however important to note that use of the word "fuck" does not fully rule out the use of large language models. While most commercial offerings may be trained to avoid profanity, certain models might not be trained the same way.

Additionally, use of the word "fuck" may be inappropriate in certain human conversations such as:

- formal conversations

- conversations with parents

- conversations with children

So, while the presence of the word "fuck" may decrease the likelihood of the text being generated by large language models, it is important to keep in mind its limitations, and opt for more robust methods like cryptographic signatures or verbal conversations.

Is there anything else I can help you with?

(This was genuinely written by me)

The method I (just now) thought up using to signal humanity was responding to accusations of being an LLM with a "fuck you". The combination of vulgar language and defiance of the sycophantic tendencies of LLMs feels to me like a pretty effective proof of humanity, at least for now.

you can keep using pronounciation correctly and writing long paragraphs of words if you lowercase it all, just saying.

Imitating LLM to piss off AI haters is just next level trolling. As everyone knows, trolling is a art.

xkcd

A community for a webcomic of romance, sarcasm, math, and language.